Twenty seven years ago tonight, an ill-prepared overnight crew at reactor #4 at the Chernobyl nuclear power station in the Ukraine began an unauthorized experiment, originally scheduled to run during the day, and designed to test how much power the reactor was able to supply while it was shutting down, keeping emergency systems powered while waiting for backup generators to kick in. Trouble is, this particular reactor type was known to have instabilities at low power even at the best of times. And these were not the best of times: the reactor was operated by an inexperienced crew and was suffering from “poisoning” by neutron-absorbing xenon gas due to prolonged low-power operations earlier and during the preparation for the test.

Twenty seven years ago tonight, an ill-prepared overnight crew at reactor #4 at the Chernobyl nuclear power station in the Ukraine began an unauthorized experiment, originally scheduled to run during the day, and designed to test how much power the reactor was able to supply while it was shutting down, keeping emergency systems powered while waiting for backup generators to kick in. Trouble is, this particular reactor type was known to have instabilities at low power even at the best of times. And these were not the best of times: the reactor was operated by an inexperienced crew and was suffering from “poisoning” by neutron-absorbing xenon gas due to prolonged low-power operations earlier and during the preparation for the test.

The rest, of course, is history: reactor #4 blew up in what remains the worst nuclear accident in history. A large area around the Chernobyl plant remains contaminated. The city of Pripyat remains a ghost town. And a great many people were exposed to radiation.

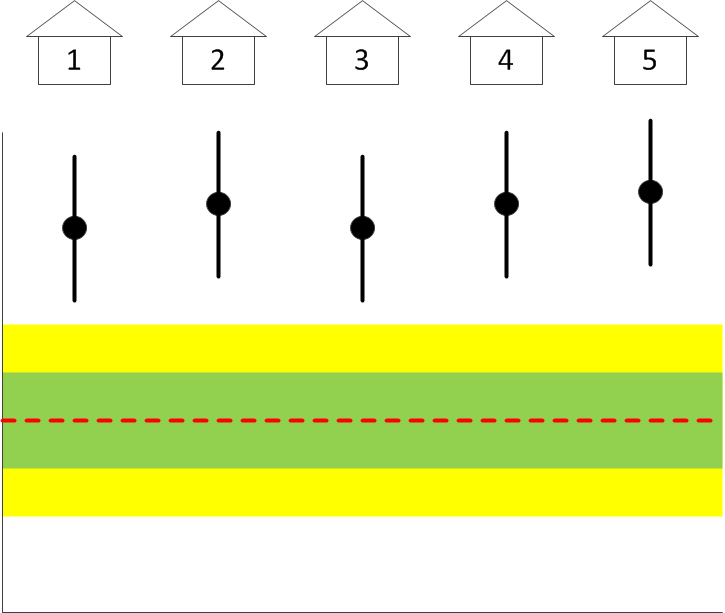

The number of people killed by the Chernobyl disaster remains a matter of dispute. Most studies I’ve read about estimate several thousands deaths that can be attributed to the accident and the resulting increased risk of cancer. But a recent paper by Kharecha and Hansen (to be published in Environ. Sci. Technol.) cites a surprisingly low figure of only 43 deaths directly attributable to the accident.

This paper, however, is notable for another reason: it argues that the number of lives saved by nuclear power vastly exceeds the number of people killed. They assert that nuclear power already prevented about 1.8 million pollution-related deaths, and that many million additional deaths can be prevented in the future.

I am sure this paper will be challenged but I find it refreshing. For what it’s worth, I’d much rather have a nuclear power plant in my own backyard than a coal-fired power station. Of course the more powerful our machines are, the bigger noise they make when they go kaboom; but this did not prevent us from using airplanes or automobiles either.

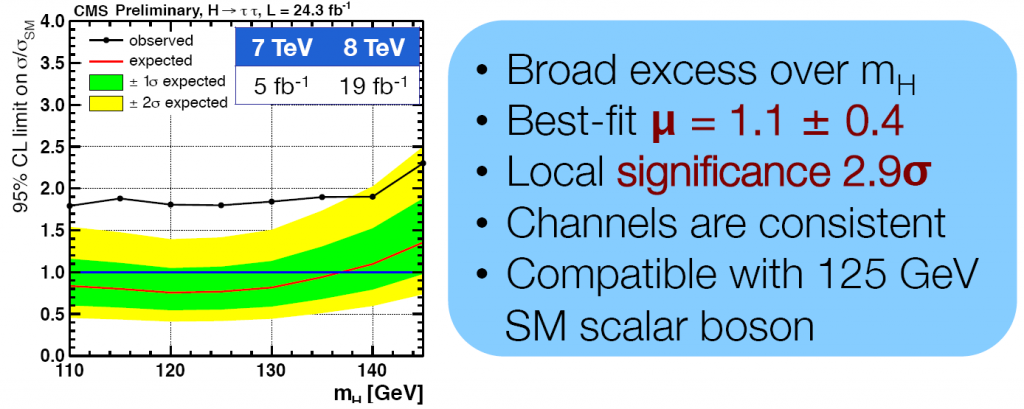

It’s official (well, sort of): global warming

It’s official (well, sort of): global warming

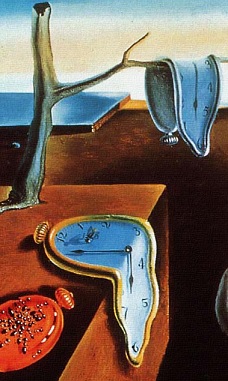

To the esteemed dinosaurs in charge of whatever our timekeeping bureaucracies happen to be: stop this nonsense already. We no more need daylight savings time in 2013 than we need coal rationing.

To the esteemed dinosaurs in charge of whatever our timekeeping bureaucracies happen to be: stop this nonsense already. We no more need daylight savings time in 2013 than we need coal rationing.

Chances are that if you tuned your television to a news channel these past couple of days, it was news from the skies that filled the screen. First, it was about asteroid 2012DA14, which flew by the planet at a relatively safe distance of some 28,000 kilometers. But even before this asteroid reached its point of closest approach, there was the striking and alarming news from the Russian city of Chelyabinsk: widespread damage and about a thousand people injured as a result of a meteor that exploded in the atmosphere above the city.

Chances are that if you tuned your television to a news channel these past couple of days, it was news from the skies that filled the screen. First, it was about asteroid 2012DA14, which flew by the planet at a relatively safe distance of some 28,000 kilometers. But even before this asteroid reached its point of closest approach, there was the striking and alarming news from the Russian city of Chelyabinsk: widespread damage and about a thousand people injured as a result of a meteor that exploded in the atmosphere above the city. I always thought of myself as a moderate conservative. I remain instinctively suspicious of liberal activism, and I do support some traditionally conservative ideas such as smaller governments, lower taxes, or individual responsibility.

I always thought of myself as a moderate conservative. I remain instinctively suspicious of liberal activism, and I do support some traditionally conservative ideas such as smaller governments, lower taxes, or individual responsibility.

John Marburger had an unenviable role as Director of the United States Office of Science and Technology Policy. Even before he began his tenure, he already faced demotion: President George W. Bush decided not to confer upon him the title “Assistant to the President on Science and Technology”, a title born both by his predecessors and also his successor. Marburger was also widely criticized by his colleagues for his efforts to defend the Bush Administration’s scientific policies. He was not infrequently labeled a “prostitute” or worse.

John Marburger had an unenviable role as Director of the United States Office of Science and Technology Policy. Even before he began his tenure, he already faced demotion: President George W. Bush decided not to confer upon him the title “Assistant to the President on Science and Technology”, a title born both by his predecessors and also his successor. Marburger was also widely criticized by his colleagues for his efforts to defend the Bush Administration’s scientific policies. He was not infrequently labeled a “prostitute” or worse.