No, AI did not kill StackOverflow or more generally, StackExchange. The site’s decline goes back a lot longer than that.

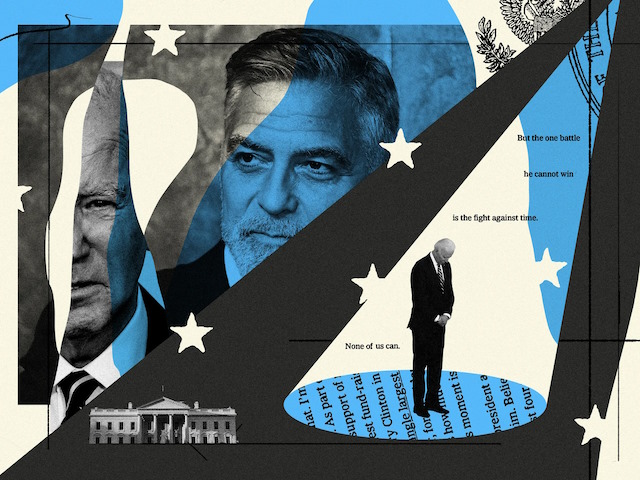

I fear I have to agree with this sobering assessment by InfoWorld, based on my own personal experience.

I’ve been active (sort of) on StackExchange for more than 10 years. I had some moderately popular answers, this one at the top, mentioning software-defined radio. I think it’s a decent answer and, well, I was rewarded with a decent number of upvotes.

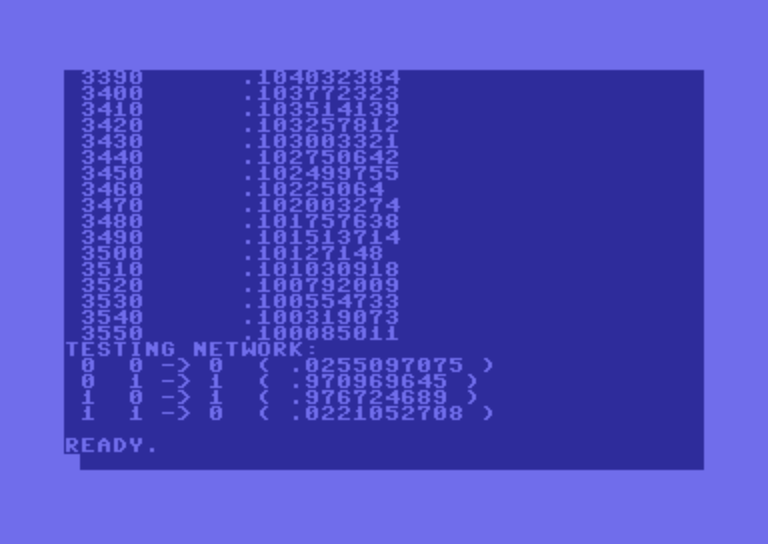

I also offered some highly technical answers, such as this one, marked as the “best answer” to a question about a specific quantum field theory derivation.

Yet… I never feel comfortable posting on StackExchange and indeed, I have not posted an answer there in ages. The reason? The site’s moderation.

Moderation rights are granted as a reputational reward. This seems to make a lot of sense until it doesn’t. As InfoWorld put it, the site “became an arena where you had to prove yourself over and over again.” Apart from the fact that it was rewarding moderators by their ability to cull what they deemed irrelevant, there is also a strong sense of the Peter principle at play. People who are good at, say, answering deeply technical questions about quantum field theory may suck as moderators. Yet they are promoted to be just that if they earn enough reputation with their answers.

In contrast, Quora — for all its faults, which are numerous — maintained a far healthier balance. Some moderation is outsourced: E.g., “spaces” are moderated by their owners, comments are moderated by those who posted the answer — but overall, moderation remains primarily Quora’s responsibility and most importantly, it is not gamified. I’ve been active on Quora for almost as long as I’ve been active on StackExchange, but I remain comfortable using (and answering on) Quora in ways I never felt when using StackEchange.

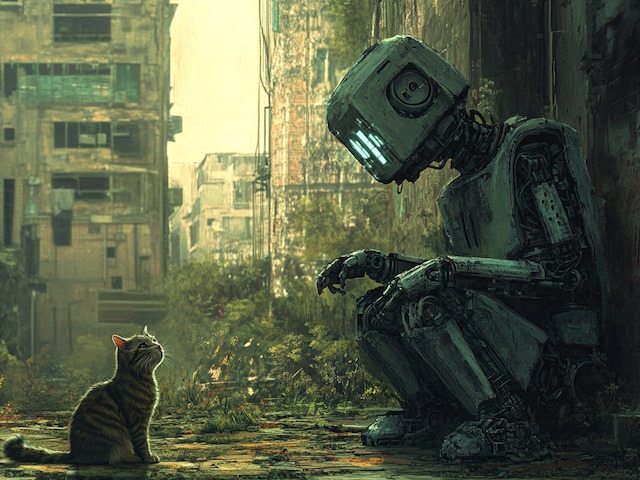

A pity, really. StackExchange has real value. But there are certain aspects of a social media site that, I guess, should never be gamified.