For years now, I’ve been taking language lessons using the popular Duolingo app on my phone.

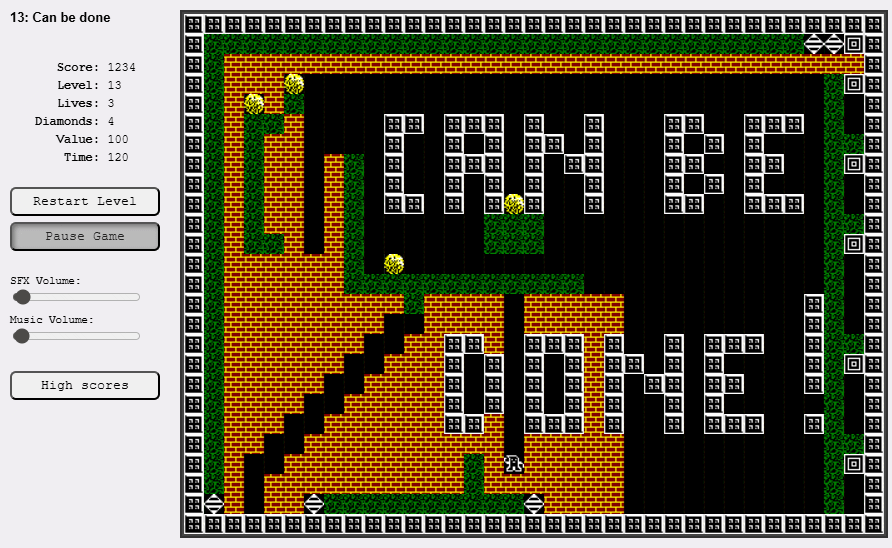

Duolingo not only offers lessons but it rewards you. You gain gems. You gain experience points. You are promoted to ever higher “leagues”, culminating in the “Diamond League”, but even beyond that, there are special championships.

For a while, I did not care. But slowly I got promoted, one league at a time, as I conscientiously took a lesson each evening, in part, I admit, in order not to lose my “streak”. One day, I found myself in the “Diamond League”.

Needless to say, this is not a status I wanted to lose! So when my position became threatened, I did what likely many other players, I mean, Duolingo users, do: I looked for cheap experience points. Take math lessons, for instance! Trivial arithmetic that I could breeze through in seconds, just to gain a few more points.

Long story short, eventually I realized that I was no longer driven by my slowly but noticeably improving comprehension of French; I was chasing points. The priority shifted from learning to winning. The gamification of learning hijacked my motivation.

Well, no more. As of last week, I only use Duolingo as I originally intended: to take casual French lessons, to help improve, however slowly, my French comprehension. Or maybe, occasionally, check out a German or even Russian lesson, to help keep my (mediocre) knowledge of these two languages alive.

But Duolingo’s gamification trap is an intriguing lesson nonetheless. I don’t blame them; it’s clever marketing, after all. But it’s also a cautionary tale, a reminder of how easily our brains can lock in on the wrong objective, like a badly trained, overfitted neural network used in machine learning. Perhaps our AI creations and we are not that different: we even share some failure modes, after all.