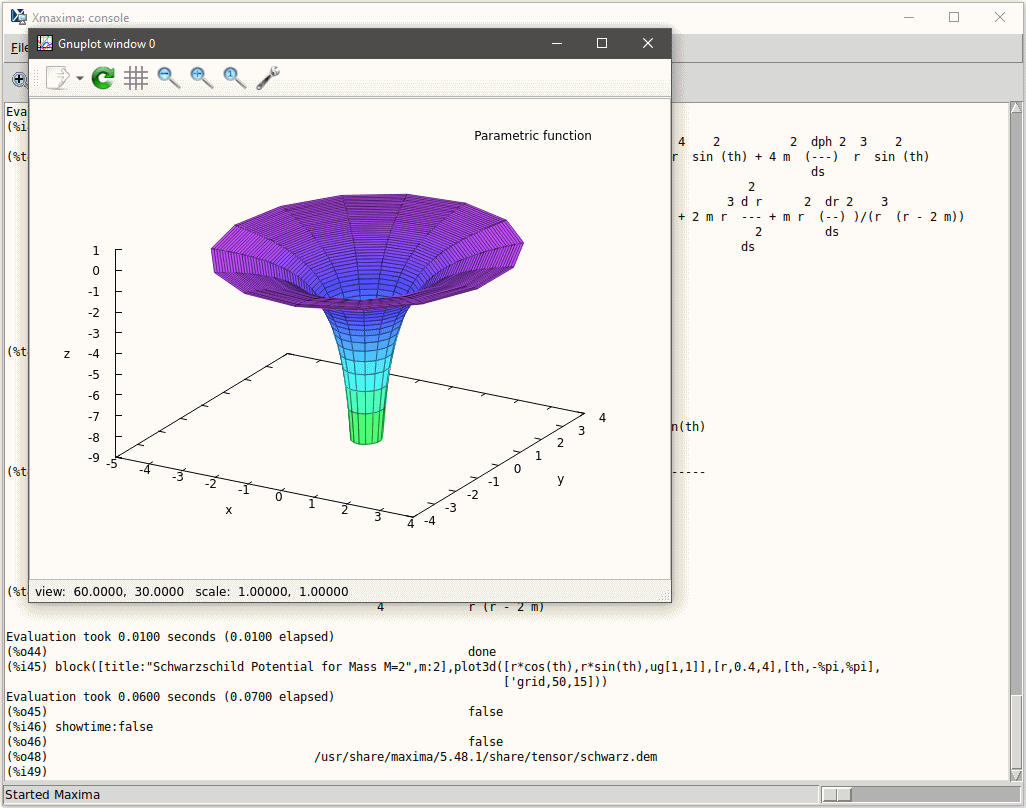

Just completed the release of Maxima 5.49.

I hope I did not mess anything up. Building and releasing a new version is always a bit stressful.

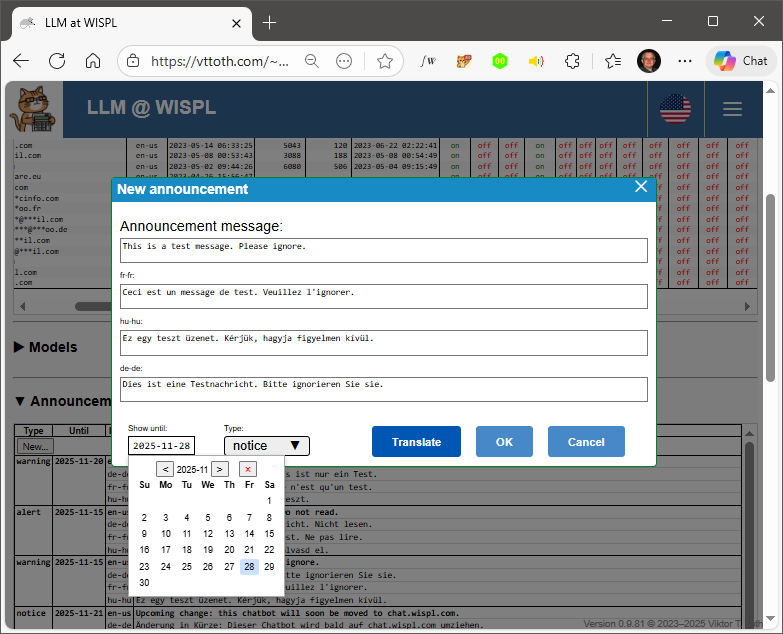

Behind every front-end there is a back-end. My WISPL.COM chatbot is no exception. It’s one thing to provide a nice chatbot experience to my select users. It’s another thing to be able to manage the system efficiently.

Sure, I can, and do, management tasks directly in the database, using SQL commands. But it’s inelegant and inconvenient. And just because I am the only admin does not mean I cannot make my own life easier by creating a more streamlined management experience.

Take announcements. The WISPL chatbot operates in four languages. Creating an announcement entails writing it in a primary language, translating it into three other languages, and the posting the requisite records to the database. Doing it by hand is not hard, but a chore.

Well, not anymore. I just created a nice back-end UI for this purpose. By itself it’s no big deal of course, but it’s the first time the software itself uses a large language model for a targeted purpose.

Note the highlighted Translate button. It sends the English-language text to a local copy of Gemma, Google’s open-weights LLM. Gemma is small but very capable. Among other things, it can produce near flawless translations into, never mind German or French, even Hungarian.

This back-end also lets me manage WISPL chatbot users as well as the language models themselves. It shows system logs, too.

A few weeks ago I had an idea.

What if I implement a GPT? No, not something on the scale of ChatGPT, with many hundreds of billions of parameters, consuming countless terawatt-hours, training on a corpus that encompasses much of the world’s literature and most of the Internet.

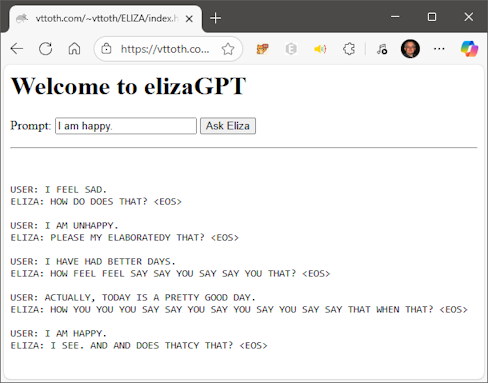

No, something far more modest. How about… a GPT that emulates the world’s first chatbot, Eliza?

Long story short (the long story will follow in due course on my Web site) I succeeded. I have built a GPT from scratch in C++, including training. I constructed a sensible (though far from perfect) training corpus of user prompts and Eliza responses. And over the course of roughly a week, using a consumer-grade GPU for hardware acceleration, I managed to train my smallest model.

No, don’t expect perfection. My little model does not have hundreds of billions of parameters. It does not even have millions of parameters. It is only a 38 thousand (!) parameter model.

Yet… it works. Sometimes its output is gibberish. But most of the time, the output is definitely Eliza-like.

The best part? The model is so small, its inference runtime works well when implemented in JavaScript, running in-browser.

And here is my first ever exchange with the JavaScript implementation, unfiltered and unedited.

No, I am not going to win awards with this chatbot, but the fact that it works at all, and that it successfully learned the basic Eliza-like behavior is no small potatoes.

For what it’s worth, I was monitoring its training using a little bit of homebrew near-real-time instrumentation, which allowed me to keep an eye on key model parameters, making sure that I intervene, adjusting learning rates, to prevent the training from destabilizing the model.

I am now training a roughly 10 times larger version. I do not yet know if that training will be successful. If it is, I expect its behavior will be more robust, with less gibberish and more Eliza-like behavior.

In the meantime, I can now rightfully claim that I know what I am talking about… after all, I have a C++ implementation, demonstrably working, complete with backpropagation, by way of credentials.

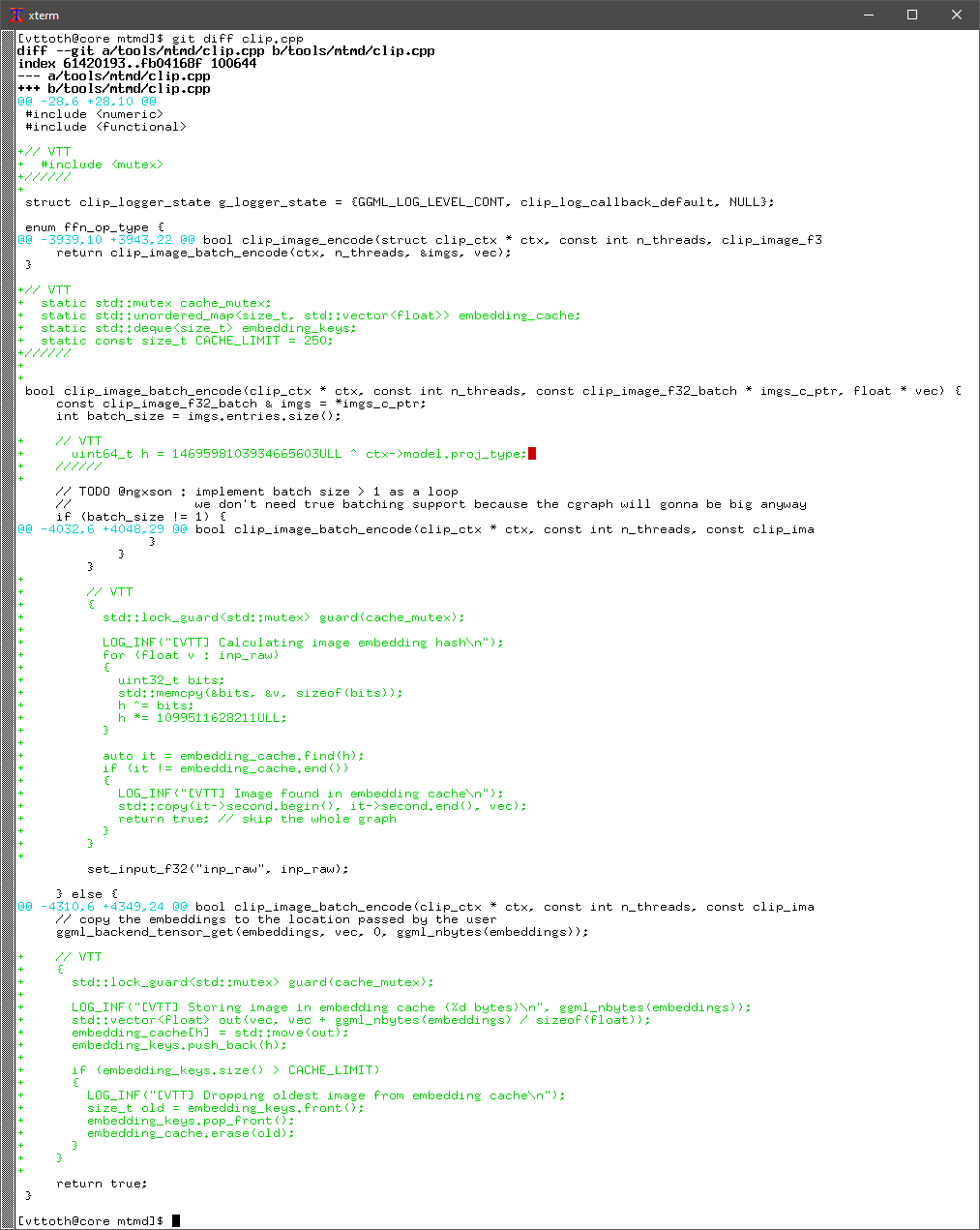

There is a wonderful tool out there that works with many of the published large language models and multimodal models: Llama.cpp, a pure C++ implementation of the inference engine to run models like Meta’s Llama or Google’s Gemma.

The C++ implementation is powerful. It allows a 12-billion parameter model to run at speed even without GPU acceleration, and emit 3-4 tokens per second in the generation phase. That is seriously impressive.

There is one catch. Multimodal operation with images requires embedding, which is often the most time-consuming part. A single image may take 45-60 seconds to encode. And in a multi-turn conversation, the image(s) are repeatedly encoded, slowing down the conversation at every turn.

An obvious solution is to preserve the embeddings in a cache and avoid re-embedding images already cached. Well, this looked like a perfect opportunity to deep-dive into the Llama.cpp code base and make a surgical change. A perfect opportunity also to practice my (supposedly considerable) C++ skills, which I use less and less these days.

Well, what can I say? I did it and it works.

I can now converse with Gemma, even with image content, and it feels much snappier.

Yet another thing I just had to do: upgrading my server to PHP 8, because increasingly, PHP 7 (though still legacy supported by Oracle OS 8) was no longer compatible with upcoming versions of content management code that I use.

So far so good… expect glitches, of course.

Much as I appreciate the effort and value we all receive from open source projects, I think I shall just keep it to myself what I think of forced upgrades and broken backwards compatibility for reasons of code purity.

Just a maintenance release but still: I completed the process to release Maxima 5.48.1.

Hope it will serve the community well. I know I’ll be using it a lot.

I just completed the process to release Maxima 5.48.

The new version introduces several noteworthy enhancements for symbolic computation, with improvements in performance, functionality, and user experience.

Highlights:

– Unicode-enabled output (when supported by the Lisp compiler)

– Numerous performance improvements across core routines

– New package for symbolic radical denesting

– New package for inferring closed-form expressions from sequences

– New package for simplification of gamma functions

– Resolution of more than 150 tickets, spanning both long-standing and recent bugs

Developed in Common Lisp, Maxima remains a reliable and customizable tool for research, education, science, and engineering.

To install, explore, or contribute: https://maxima.sourceforge.io

I have added some new apps to my Web site. One, a bit serious, the other, less so.

The serious one: a technology demonstration, showing that it is not black magic to use a Web camera and try to extract from its feed an estimate of a person’s heart rate and (less reliably) respiratory rate. The measurement is not perfect, of course, but it works surprisingly well at least some of the time.

Meanwhile, I also resurrected an old project of mine, one I initially did in 1993: itself a resurrection of an even older game from back in the heroic 8-bit days. I was inspired by a DOS version on Usenet, itself derived from an earlier X11 version; it became my first “full-featured” Visual C++ project, originally developed for Windows 3.1. A 32-bit version followed two years later, and that was the basis of my current, Web-based reimplementation. I called my version Rubble Rush, to avoid infringing on the original’s (still extant, as far as I know) trademark.

These implementations also showcase how easy it is, using modern JavaScript, to develop solutions with real-time interactivity, also using real-time media streams to boot.

A friend of mine challenged me. After telling him how I was able to implement some decent neural network solutions with the help of LLMs, he asked: Could the LLM write a neural network example in Commodore 64 BASIC?

You betcha.

Well, it took a few attempts — there were some syntax issues and some oversimplifications so eventually I had the idea of asking the LLM to just write the example on Python first and then use that as a reference implementation for the C64 version. That went well. Here’s the result:

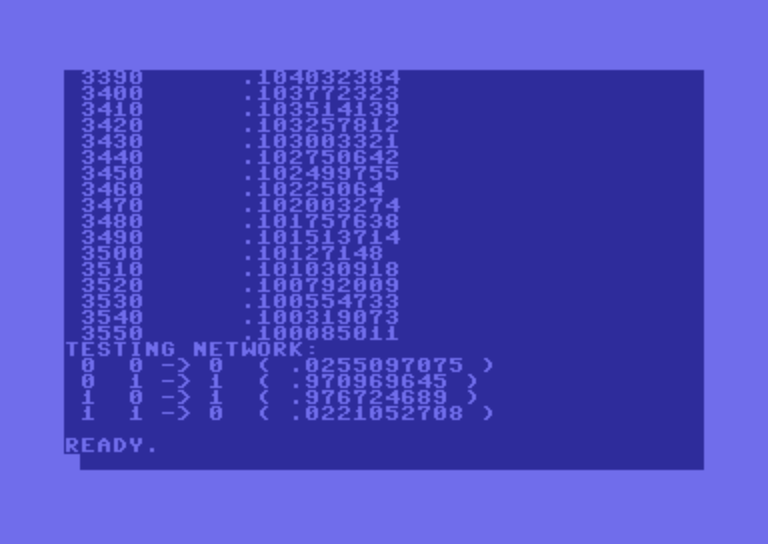

As this screen shot shows, the program was able to learn the behavior of an XOR gate, the simplest problem that requires a hidden layer of perceptrons, and as such, a precursor to modern “deep learning” solutions.

I was able to run this test on Krisztián Tóth’s (no relation) excellent C64 emulator, which has the distinguishing feature of reliable copy-paste, making it possible to enter long BASIC programs without having to retype them or somehow transfer them to a VIC-1541 floppy image first.

In any case, this is the program that resulted from my little collaboration with the Claude 3.7-sonnet language model:

10 REM NEURAL NETWORK FOR XOR PROBLEM

20 REM BASED ON WORKING PYTHON IMPLEMENTATION

100 REM INITIALIZE VARIABLES

110 DIM X(3,1) : REM INPUT PATTERNS

120 DIM Y(3) : REM EXPECTED OUTPUTS

130 DIM W1(1,1) : REM WEIGHTS: INPUT TO HIDDEN

140 DIM B1(1) : REM BIAS: HIDDEN LAYER

150 DIM W2(1) : REM WEIGHTS: HIDDEN TO OUTPUT

160 DIM H(1) : REM HIDDEN LAYER OUTPUTS

170 DIM D1(1,1) : REM PREVIOUS DELTA FOR W1

180 DIM B2 : REM BIAS: OUTPUT LAYER

190 DIM D2(1) : REM PREVIOUS DELTA FOR W2

200 DIM DB1(1) : REM PREVIOUS DELTA FOR B1

210 DB2 = 0 : REM PREVIOUS DELTA FOR B2

220 LR = 0.5 : REM LEARNING RATE

230 M = 0.9 : REM MOMENTUM

300 REM SETUP TRAINING DATA (XOR PROBLEM)

310 X(0,0)=0 : X(0,1)=0 : Y(0)=0

320 X(1,0)=0 : X(1,1)=1 : Y(1)=1

330 X(2,0)=1 : X(2,1)=0 : Y(2)=1

340 X(3,0)=1 : X(3,1)=1 : Y(3)=0

400 REM INITIALIZE WEIGHTS RANDOMLY

410 FOR I=0 TO 1

420 FOR J=0 TO 1

430 W1(I,J) = RND(1)-0.5

440 NEXT J

450 B1(I) = RND(1)-0.5

460 W2(I) = RND(1)-0.5

470 NEXT I

480 B2 = RND(1)-0.5

510 REM INITIALIZE MOMENTUM TERMS TO ZERO

520 FOR I=0 TO 1

530 FOR J=0 TO 1

540 D1(I,J) = 0

550 NEXT J

560 D2(I) = 0

570 DB1(I) = 0

580 NEXT I

590 DB2 = 0

600 REM TRAINING LOOP

610 PRINT "TRAINING NEURAL NETWORK..."

620 PRINT "EP","ER"

630 FOR E = 1 TO 5000

640 ER = 0

650 FOR P = 0 TO 3

660 GOSUB 1000 : REM FORWARD PASS

670 GOSUB 2000 : REM BACKWARD PASS

680 ER = ER + ABS(O-Y(P))

690 NEXT P

700 IF (E/10) = INT(E/10) THEN PRINT E,ER

710 IF ER < 0.1 THEN E = 5000

720 NEXT E

800 REM TEST NETWORK

810 PRINT "TESTING NETWORK:"

820 FOR P = 0 TO 3

830 GOSUB 1000 : REM FORWARD PASS

840 PRINT X(P,0);X(P,1);"->"; INT(O+0.5);" (";O;")"

850 NEXT P

860 END

1000 REM FORWARD PASS SUBROUTINE

1010 REM CALCULATE HIDDEN LAYER

1020 FOR I = 0 TO 1

1030 S = 0

1040 FOR J = 0 TO 1

1050 S = S + X(P,J) * W1(J,I)

1060 NEXT J

1070 S = S + B1(I)

1080 H(I) = 1/(1+EXP(-S))

1090 NEXT I

1100 REM CALCULATE OUTPUT

1110 S = 0

1120 FOR I = 0 TO 1

1130 S = S + H(I) * W2(I)

1140 NEXT I

1150 S = S + B2

1160 O = 1/(1+EXP(-S))

1170 RETURN

2000 REM BACKWARD PASS SUBROUTINE

2010 REM OUTPUT LAYER ERROR

2020 DO = (Y(P)-O) * O * (1-O)

2030 REM UPDATE OUTPUT WEIGHTS WITH MOMENTUM

2040 FOR I = 0 TO 1

2050 DW = LR * DO * H(I)

2060 W2(I) = W2(I) + DW + M * D2(I)

2070 D2(I) = DW

2080 NEXT I

2090 DW = LR * DO

2100 B2 = B2 + DW + M * DB2

2110 DB2 = DW

2120 REM HIDDEN LAYER ERROR AND WEIGHT UPDATE

2130 FOR I = 0 TO 1

2140 DH = H(I) * (1-H(I)) * DO * W2(I)

2150 FOR J = 0 TO 1

2160 DW = LR * DH * X(P,J)

2170 W1(J,I) = W1(J,I) + DW + M * D1(J,I)

2180 D1(J,I) = DW

2190 NEXT J

2200 DW = LR * DH

2210 B1(I) = B1(I) + DW + M * DB1(I)

2220 DB1(I) = DW

2230 NEXT I

2240 RETURN

The one proverbial fly in the ointment is that it took about two hours for the network to be trained. The Python implementation? It runs to completion in about a second.

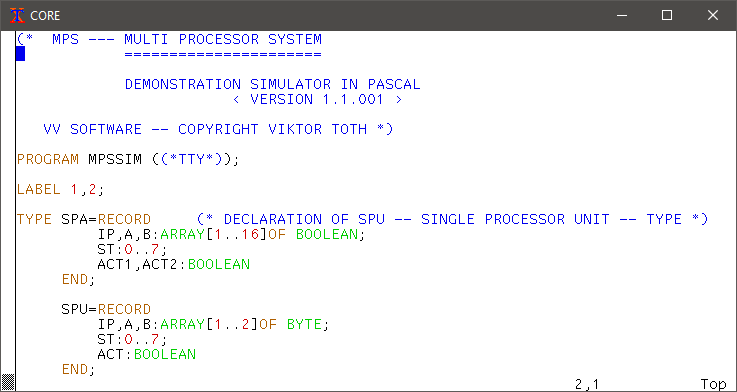

Here are the first few lines of a program I wrote over 40 years ago, an early attempt to model a massively parallel processor architecture.

No, the program is nothing special. And there are much better ways to create practical multiprocessor/multicore systems. The reason why I copy-pasted this image here has to do with something else: the fact that it was written in the Pascal programming language.

Pascal was just one of the many creations of one of the giants of computer science, Niklaus Wirth.

I learned yesterday that Wirth, who was born back in 1934, passed away on January 1.

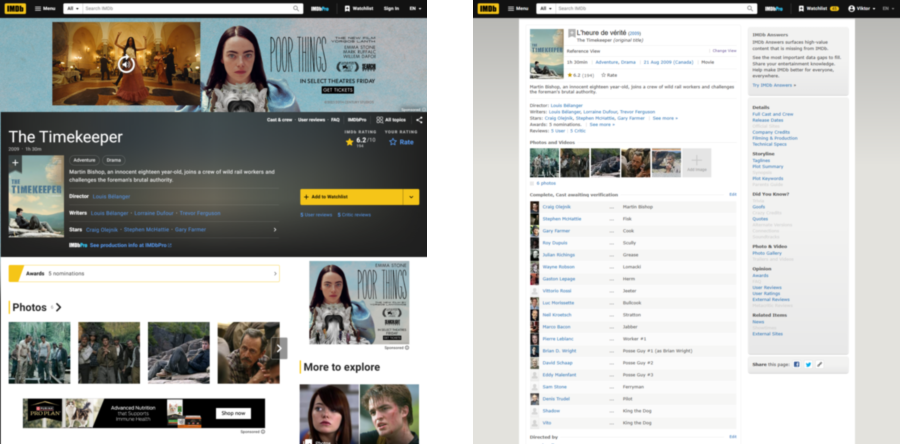

I wanted to check something on IMDB. I looked up the film. I was confronted by an unfamiliar user interface. Now unfamiliar is okay, but the UI I saw is badly organized, key information (e.g., year of release, country of origin) difficult to find, with oversized images at the expense of useful content. And no, I don’t mean the ads; I am comfortable with relevant, respectful ads. It’s the fact that a lot less information is presented, taking up a lot more space.

Fortunately, in the case of IMDB I was able to restore a much more useful design by logging in to my IMDB account, going to account settings, and making sure that the Contributors checkbox was checked. Phew. So much more (SO MUCH MORE) readable, digestible at a glance. Yes, it’s smaller print. Of course. But the information is much better organized, the appearance is more consistent (no widely different font sizes) and the page is dominated by information, not entertainment in the form of images.

IMDB is not the only example. Recently, after I gave it a valiant try, I purposefully downgraded my favorite Android e-mail software as its new user interface was such a letdown. At least I had the foresight to save the APK of the old version, so I was able to install it and then make sure in the Play Store settings that it would not be upgraded. Not that I am comfortable not upgrading software but in this case, it was worth the risk.

All this reminds me of a recent discussion with a friend who works as a software professional himself: he is fed up to his eyeballs with the pervasive “Agile” fad at his workplace, with its mandatory “Scrum” meetings and whatnot. Oh, the blessings of being an independent developer: I could tell him that if a client mentioned “Agile” more than once, it’d be time for me to “Scrum” the hell out of there…

OK, I hope it’s not just grumpy ole’ complaining on my part. But seriously, these trendy fads are not helping. Software becomes less useful. Project management culture reinvents the wheel (I have an almost 50-year old Hungarian-language book on my shelf on project management that discusses iterative management in depth) with buzzwords that no doubt bring shady consultants a lot more money than I ever made actually building things. (Not complaining. I purposefully abandoned that direction in my life 30 years ago when I quietly walked out of a meeting, not having the stomach anymore to wear a $1000 suit and nod wisely while listening to eloquent BS.) The result is all too often a badly managed project, with a management culture that is no less rigid than the old culture (no fads can overcome management incompetence) but with less documentation, less control, less consistent system behavior, more undocumented dependencies, and compromised security. UI design has fads that change with the seasons, united only by results that are about as practical as a Paris fashion designer’s latest collection of “work attire”.

OK, I would be lying if I said that only bad things come out of change. Now that I use AI in software development, not a day goes by without the AI teaching me something I did not know, including tools, language features and whatnot that can help improve the user experience. But it would be so nice if we didn’t take three steps back for every four steps forward.

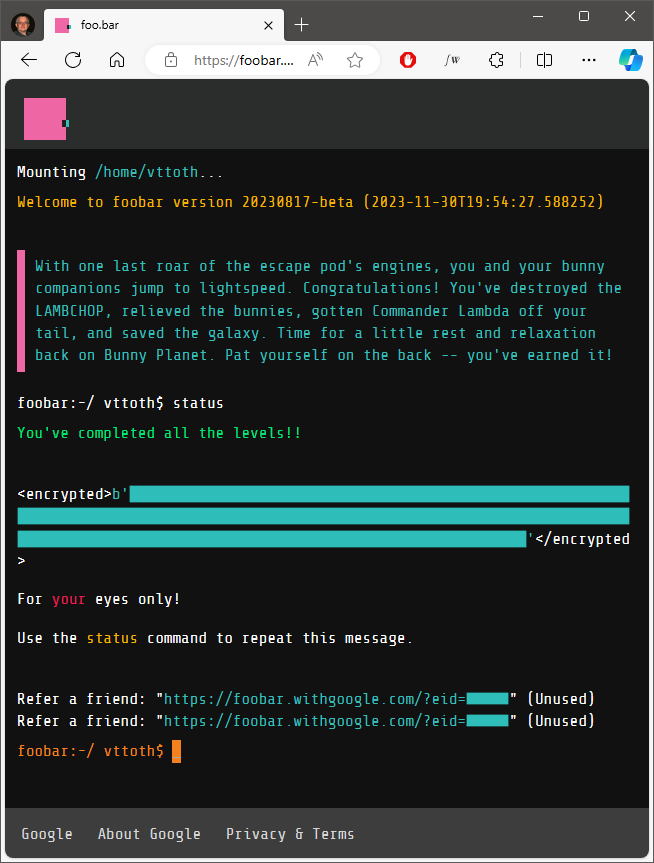

So the other day, as I was doing a Google Search (can’t exactly remember what it was that I was searching for but it was machine learning related), up pops this invitation to participate in a challenge.

Turned out to be the Google Foobar challenge, Google’s secret recruiting tool. (Its existence is not really a secret, so I am not really revealing any great secrets here.)

Though I have no plans to become a Google employee (and I doubt they’re interested in me on account of my age anyway) I decided to go through the challenge because, well, it’s hard to say no to a challenge, and it was an opportunity to practice my Python skills (which need a lot of practice, because I have not yet used Python that much.)

Well, I did it. It was fun.

More importantly, I enjoyed it just as much as I enjoyed similar challenges as a math geek in my early teens. And if that’s not a gift from life, I don’t know what it is.

And yes, I am now much better at Python than I was just a few days ago. I certainly appreciate why the language has become popular, though I can also see its non-trivial pitfalls.

One of the many unfulfilled, dare I say unfulfillable promises of the tech world (or at least, some of the tech world’s promoters) is “low code”. The idea that with the advent of AI and visual programming tools, anyone can write code.

Recall how medieval scribes prepared those beautiful codices, illuminated manuscripts. Eventually, that profession vanished, replaced by the printing press and, eventually, the typewriter. But what if someone suggested that with the advent of the typewriter, anyone can now write high literature? Laughable, isn’t it. There is so much more to writing than the act of making nicely formed letters appear on a sheet of paper.

Software development is just like that. It is about so much more than the syntax of a programming language. Just think of the complete life cycle of a software development project. Even small, informal in-house projects follow this model: A requirement is identified, a conceptual solution is formulated (dare I say, designed), the technology is selected, problems are worked out either in advance or as they are encountered during testing. The code is implemented and tested, bugs are fixed, functionality is evaluated. The code, if it works, is put into production, but it still needs to be supported, bugs need to be fixed, compatibility with other systems (including the operating system on which it runs) must be maintained, if it is a public-facing app, its security must be monitored, business continuity must be maintained even if the software fails or there are unexpected downtimes… These are all important aspects of software development, and they have very little to do with the act of coding.

In recent months, I benefited a great deal from AI. Claude and, especially perhaps, GPT-4, proved to be tremendous productivity tools of almost unbelievable efficiency. Instead of spending hours on Google searches or wading through StackExchange posts, I could just consult Claude and get an instant answer clarifying, e.g., the calling conventions of a system function. When I was struggling to come up with a sensible way to solve a problem, I could just ask GPT-4 for suggestions. Not only did GPT-4 tell me how to address the problem at hand, often with helpful code snippets illustrating the answer, it even had the audacity to tell me when my approach was suboptimal and recommended a better solution.

And yes, I could ask these little robot friends of ours to write code for me, which they did.

But this was when things took a really surprising turn. On several occasions, Claude or GPT not only offered solutions but offered inspired solutions. Elegant solutions. Except that the code they wrote had bugs. Sometimes trivial bugs like failure to initialize a variable or assigning a variable that was declared a constant. The kind of routine mistakes experienced programmers make, which are easily fixable: As the first, draft version of the code is run through the compiler or interpreter, these simple buglets are readily identified and corrected.

But this is the exact opposite of the “low code” promise. Low code was supposed to mean a world in which anyone can write software using AI-assisted visual tools. In reality, those tools do replace armies of inexperienced, entry-level programmers but experience is still required to design systems, break them down into sensible functional components, create specifications (even if it is in the form of a well-crafted prompt sent to GPT-4), evaluate solutions, perform integration and testing, and last but not least, fix the bugs.

What worries me is the fact that tomorrow’s experienced software architects will have to come from the pool of today’s inexperienced entry-level programmers. If we eliminate the market for entry-level programmers, who will serve as software architects 20, 30 years down the line?

Never mind. By then, chances are, AI will be doing it all. Where that leaves us humans, I don’t know, but we’re definitely witnessing the birth of a brand new era, and not just in software development.

For the longest time as developers, we were taught not to reinvent the wheel. “There is a library for that,” we were told, so instead of implementing our own solutions for common, recurring tasks, we just imported and linked the library in question.

And sure, it made a lot of sense. Countless hours of development time were saved. Projects were completed on time, within budget. And once the system worked, it, well, worked. So long as there was a need to maintain the software, we just kept the old development tools around for the occasional bug fix and recompile. I remember keeping a Visual Studio 6.0 configuration alive well into the 2010s, to make sure that I could offer support to a long-time customer.

But then… then came the Internet. Which implied several monumental paradigm shifts. One of the most fundamental among them is that a lot of software development no longer targeted cooperating users in a closed environment. Rather, the software was exposed to the public and, well, let’s face it, not all members of the public have the best intentions in mind when they interact with our systems.

Which means that third-party code turned from an asset into a substantial liability. Why? Because of potential security issues. Using old versions of third-party libraries in public-facing systems is an invitation for disaster. Those third-party components must be kept up-to-date. Except…

For a while, there was a great need for third-party code in Web development. HTML4 had limitations, and browser implementations varied wildly. Widely used third-party libraries like jQuery made it possible to prepare code that ran well on all major platforms. But this really is not the case anymore. “Out of the box” HTML5, CSS3 and modern JavaScript are tremendously capable tools and the implementation across major browsers is quite consistent these days, with only minor idiosyncrasies that can be easily dealt with after a modest amount of testing.

So really, my advice these days to anyone developing a new Web application is to avoid third-party libraries when possible. Especially if the application is intended to have a long life-cycle. Third-party code may cut down development time slightly, but the long-term costs may far exceed those savings. And there will still be more than enough to do just to keep up with other changes: witness the changes over time that occurred in browser security models, breaking once functioning Web applications, or the changes between, say, PHP5 and PHP7.

And of course there are still valid, legitimate use cases for specialized third-party libraries. For instance, in a recent project I used both MathJax (for rendering mathematical formulas) and markdown (for rendering displayed code). Developing something like that from scratch is just not an option.

Why am I harping on all this? I am currently facing a minor crisis of sorts (OK, that may be too strong a word) as I am trying to upgrade my Web sites from Joomla 3 to Joomla 4. Serves me right, using a third-party content management system instead of writing my own HTML! Worse yet, I used some once popular extensions with Joomla, extensions that are no longer supported, and which are wholly incompatible with Joomla 4. Dealing with this is difficult and time-consuming.

It would be a lot more time-consuming were it not for the help I get from our LLM AI friends. Thankfully, these tools, GPT-4 in particular, are immensely helpful. E.g., one third-party Joomla extension I used offered a nice way to present images as clickable thumbnails. This extension is now badly broken. However, GPT-4 already helped me write a clean, functional alterative that I’ll be able to use, and thus avoid having to redesign some important pages on my site.

Don’t look a gift horse in the mouth, they say, so I will not question how, or why, just express my happiness that my frustration is over: Redmine, the software package that I use internally for project management, works again.

It all began with an unpleasant but unavoidable upgrade of the MariaDB database from the ancient version that is part of the CentOS distribution to a more recent one. (Which, in turn, is needed to upgrade my content management software on some of my Web sites.)

Everything worked after this (planned, reasonably well pre-tested) upgrade except for Redmine.

Redmine is beautiful, very useful, but also very frustrating to install and manage. It uses Ruby on Rails, a software environment that… OK, let me not go there. I’ll keep my opinion to myself.

I spent countless hours yesterday, to no avail. The Redmine system refused to start. I installed, reinstalled, configured, reconfigured, uninstalled, reinstalled… Redmine, Ruby, its various management tools, you name it. Nothing did the trick. I gave up long after midnight.

I dreaded the moment today when I’d be resuming that thankless, frustrating exercise with no assured outcome. But I need Redmine. I have too much information in that system that I cannot afford to lose. So eventually I rolled up my sleeves (literally) opened the browser tab that had the link, and hit F5 to refresh the page, expecting the same error message that I’ve seen before to reappear.

Instead… Redmine came up in all its glory, with all my existing project data intact. Everything works.

I was so shocked by surprise I almost felt physically ill. A bit like this Midjourney cat, upon receiving an unexpected, very welcome gift.

Don’t look a gift horse in the mouth they say, so I am not complaining. But I’d still like to know what exactly happened. Why things started to work all of a sudden. I told my beautiful wife to imagine leaving a half-finished knitted sweater in her room one night, only to come back the next morning and finding a beautifully finished sweater there.

My mind, for now, is in a deeply boggled state. I honestly don’t know how or why it happened. But I am a very happy cat tonight.

A footnote: After I wrote the above, late, late, late at night, all of a sudden Redmine failed again, with a different error. I was ready to tear my hair out. But I was able to fix the problem. The likeliest cause as far as I could determine is that although the Redmine site had the correct Ruby version identified, a default setting specified an older, incompatible version of Ruby. It was fortunate that I was able to fix it, otherwise chances are I’d have spent a sleepless night trying.

I cannot teach GPT-4 how to play chess. Conceptualizing a chessboard and making a move are beyond its capabilities.

However, it was able to write (with minimal guidance from me) nice code to display and update a chessboard. That is quite impressive on its own right. It took several tries to get the board right but it did succeed in the end.

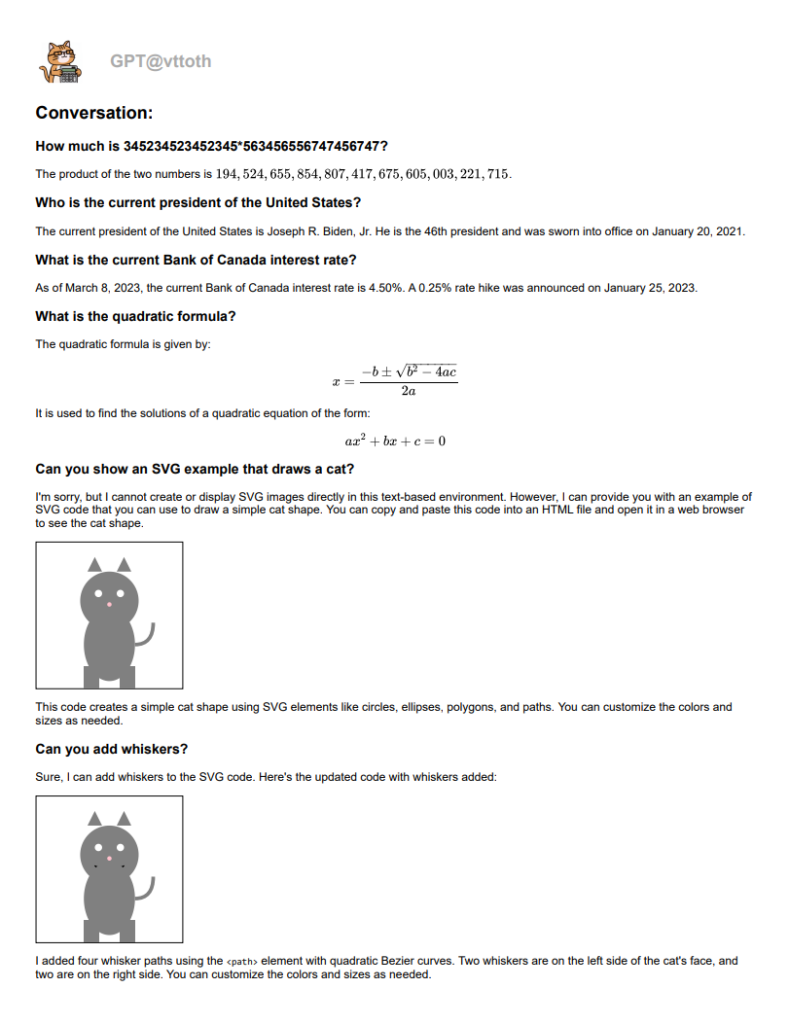

So here is my latest conversation with GPT-4 using my custom user interface. The important features of this implementation are the following:

I was able to achieve this level of integration even without plugin-level access, simply by clever “prompt engineering” to ensure that the majority of the time, GPT uses the provided tools effectively. For this, GPT-4 seems essential; GPT3.5 is not really able to grasp the notion of using external tools before constructing its answers.

By way of example, when GPT was asked about the current Bank of Canada interest rate, what happened in the background was the following exchange:

User: What is the current Bank of Canada interest rate?

GPT: SEARCH(“current Bank of Canada interest rate”)

User: Snippet 1: Mar 8, 2023 … The current Bank of Canada interest rate sits at 4.50%, with a 0.25% rate hike announced on January 25, 2023.

Snippet 2: See the Current Bank of Canada Interest Rate graphs at this page. You will read in the news about this rate when the Bank of Canada makes a rate …

Snippet 3: Find answers about questions you may have about the Bank of Canada. What is the current bank of Canada interest rate?GPT: As of March 8, 2023, the current Bank of Canada interest rate is 4.50%. A 0.25% rate hike was announced on January 25, 2023.

The prompting that I use can of course be improved. The behavior of GPT-4 is not always consistent, sometimes it fails to make use of the computer algebra result or attempts to invoke computer algebra in the wrong place. I suspect that the “right” solution will have to wait until I have plugin-level developer access. But I am very happy that I was able to accomplish this already, in particular that access to Google and the computer algebra tool are completely automated in the background, requiring no user action.

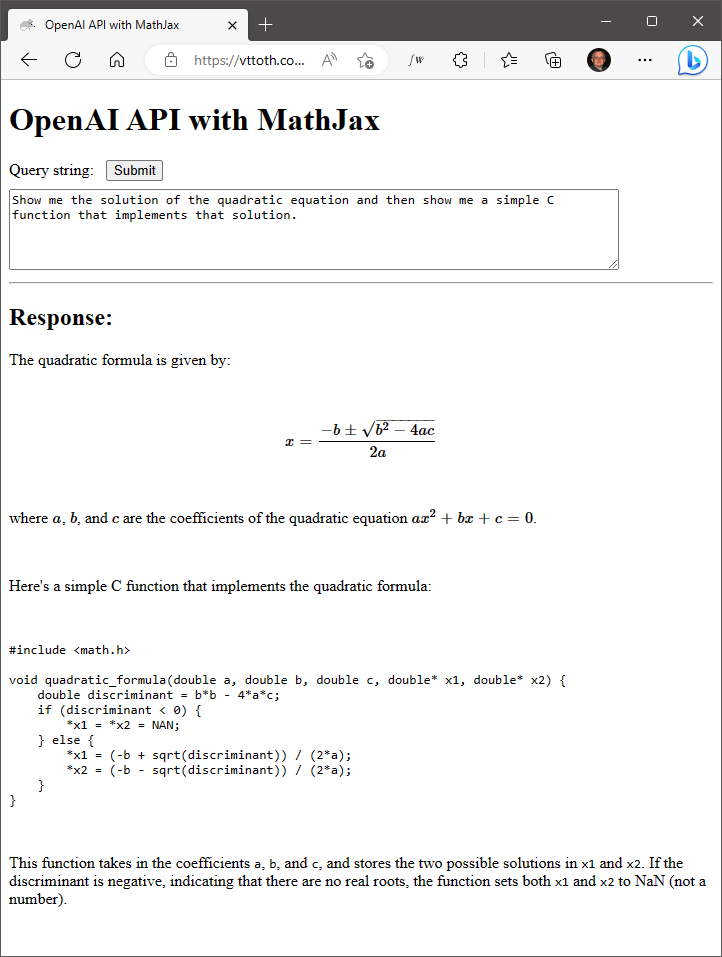

Today, with ChatGPT’s help, I finally managed to get past the initial hurdles and build some nice code making use of the OpenAI API.

I am still at the beginning of this learning curve, but I am having fun, and I now have a useful UI that can produce both nicely formatted equations and well formatted code. Yippie!

More than 20 years ago, I wrote a program that showed what Mars would look like if it had oceans.

I wrote it originally in C++, but then ported it to Java and turned it into a Web application.

That was a long time ago. Unfortunately, browsers no longer support Java applets for security reasons. So my beautiful applet was not working anymore.

Today, I finally spent some time reimplementing the applet in plain JavaScript. It’s working again! (Apart from some minor cosmetics.) And it has data sets for Mars, Earth, Venus and the Moon as well.

I really should stop messing with ChatGPT but…

I asked it some more physics questions and got moderately mediocre answers. For instance, when I asked it about the conformal group in four spacetime dimensions, it described it as consisting of the Lorentz-Poincare group plus dilations. Many physicists would say the same thing but this is an incomplete description, leaving out the most interesting bit, the so-called special conformal group. But when I asked it to describe the special conformal group it offered a decent answer; it did mention inversion, what it failed to mention was that it really is an inversion with respect to the unit circle, a translation, and a second inversion and as such, it introduces four additional degrees of freedom.

But then, I went back to asking programming questions. I thought by now that I had a good sense of the algorithm’s limitations. So I dug up an old utility I once wrote and asked ChatGPT about the code.

Can you tell me what the following C-language program does under Linux?

#include <errno.h> #include <signal.h> #include <stdlib.h> #include <stdio.h> void main(void) { int i, j, k, l; for (i = 0; i <= 32767; i++) { if (kill(i, 0) == 0 || errno == EPERM) { char s[120], f[20], c[100]; FILE *p; sprintf(s, "%6d ", i); sprintf(f, "/proc/%d/status", i); if (p = fopen(f, "r")) { l = fread(c, 1, sizeof(c), p); fclose(p); for (j = 0; j < l && c[j] != '\t'; j++); for (k = 7, j++; j < l && c[j] != '\n'; j++) s[k++] = c[j]; s[k] = '\0'; } printf("%s\n", s); } } }This C-language program is a simple utility that lists the active processes on a Linux system. When run, the program will iterate through all possible process IDs from 0 to 32767, and check if a process with that ID exists on the system. If a process with the given ID exists, the program will print information about that process, including its process ID, process name, and other details.

To do this, the program uses the kill function from the signal.h library to check if a process with a given ID exists. The kill function is typically used to send signals to processes, but it can also be used to check if a process with a given ID exists by calling it with a signal value of 0.

If the kill function returns 0 or sets the errno value to EPERM, this indicates that a process with the given ID exists on the system. In this case, the program will use the sprintf function to create a string containing the process ID, and then open the /proc/[pid]/status file, where [pid] is the process ID, using the fopen function.

The status file contains information about the process, including its name and other details. The program will read the contents of this file using the fread function and extract the process name from the file using string manipulation. The extracted process name will be appended to the string containing the process ID, and the resulting string will be printed to the console using the printf function.

Overall, this program is a simple example of how to list the active processes on a Linux system using the kill and /proc functions. It is worth noting that this program is not intended for use in a production environment, as it is not robust or efficient. It is intended only as a simple demonstration of the concept.

OK, this isn’t even funny anymore. But surely, a program that actually prints two-dimensional ASCII graphics using an obscure algorithm will leave the AI baffled?

What does the following program do?

#include <stdio.h> #include <string.h> #define R 20 void main(void) { int x, y, d, dA, dB; int i; char B[2*R+1][2*R+2]; memset(B, ' ', sizeof(B)); for (i = 0; i < 2*R+1; i++) B[i][2*R+1] = 0; x = 0; y = R; d = 5 - (R<<2); dA = 12; dB = 20 - (R<<3); while (x<=y) { B[R+x][R+y] = B[R+x][R-y] = B[R-x][R+y] = B[R-x][R-y] = B[R+y][R+x] = B[R+y][R-x] = B[R-y][R+x] = B[R-y][R-x] = 'X'; if (d<0) { d += dA; dB += 8; } else { y--; d += dB; dB += 16; } x++; dA += 8; } for (i = 0; i < 2*R+1; i++) printf("%s\n", B[i]); }This program is a simple implementation of the midpoint circle algorithm, which is used to draw a circle on a two-dimensional grid. When run, the program will print a circle with a radius of 20 units, using the character ‘X’ to mark the points on the circle.

To do this, the program first defines a 2-dimensional array named B with dimensions 2*R+1 by 2*R+2, where R is the radius of the circle. The array is initialized with spaces using the memset function, and the last column of the array is set to 0 using a for loop.

Next, the program sets the initial values for the variables used in the midpoint circle algorithm. These include the current x and y coordinates of the point being considered, as well as various intermediate values used to compute the next point on the circle.

The program then enters a while loop that continues until the value of x is greater than or equal to the value of y. Inside the while loop, the program uses the midpoint circle algorithm to compute the next point on the circle, given the current point. This involves checking if the value of d is less than 0, and computing new values for d, x, and y based on the result.

Once the new values have been computed, the program sets the corresponding element in the B array to 'X', to mark the point on the circle. The program then increments the value of x and updates the values of the other intermediate variables used in the algorithm.

After the while loop ends, the program uses a for loop to print each row of the B array to the console, resulting in a grid containing a circle with a radius of 20 units, drawn using the character 'X'.

No, it’s not OpenGPT that is baffled. I am baffled. Beyond speechless.