Been a while since I blogged about politics. Not because I don’t think about it a lot… but because lately, it’s become kind of pointless. We are, I feel, past the point when individuals trying to raise sensible concerns can accomplish anything. History is taking over, and it’s not leading us into the right direction.

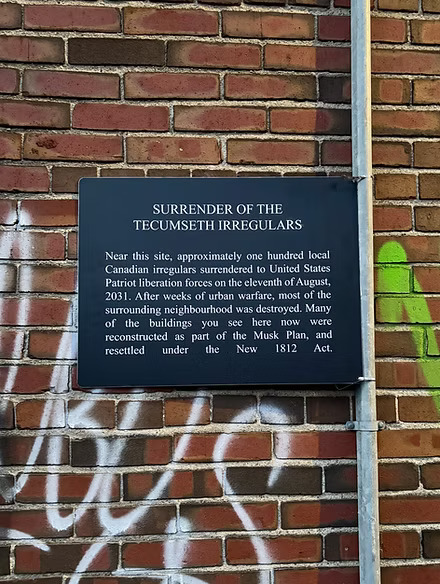

Looking beyond the specifics: be it the decisive US military action to remove Maduro in Venezuela (and the decision to leave Maduro’s regime in place with his VP in charge), the seizure of Russian-flagged tankers, yet another Russian act of sabotage against undersea infrastructure in the Baltics (this time caught red-handed by the Finns), the military situation in Ukraine, the murder of a Minnesota woman by ICE agents, more shooting by US customs and border protection agents in Portland…

Never mind the politics of the day. It’s the bigger picture that concerns me.

Back in the 1990s, I was able to rent a decent apartment here in Ottawa, all utilities included, for 600-odd dollars. It was a decent apartment with a lovely view of the city. The building was reasonably well-maintained. Eventually we moved out because we bought our townhome. The price was well within our ability to finance.

Today, that same apartment rents for three times the amount. Our townhome? A similar one was sold for five times what we paid for ours 28 years ago. Now you’d think this is perfectly normal if incomes rose at the same pace, perhaps keeping track with inflation. But that is not the case. The median income in the same time period increased by a measly 30%, give or take.

This, I daresay, is obscene. It means that a couple in their early 30s, like we were back then, doesn’t stand a chance in hell. Especially if they are immigrants like we were, with no family backing, no inherited wealth.

In light of this, I am not surprised by daily reports about rising homelessness, shelters filled to capacity, food banks struggling with demand.

What I find especially troubling is that we seem hell-bent on turning cautionary tales into reality. For instance, there’s the 1952 science-fiction novel by Pohl and Kornbluth, The Space Merchants. Nowadays considered a classic. It describes a society in which corporate power is running rampant, advertising firms rule the world, and profit trumps everything. Replace “advertising” with “social media”, make a few more surface tweaks and the novel feels like it was written in 2025. When the narrative describes the homeless seeking shelter in the staircases of Manhattan’s shiny office towers, I can’t help but think of all the homeless here on Rideau Street, just minutes away from Parliament Hill, in the heart of a wealthy G7 capital city.

Or how about a computer game from the golden era of 8-bit computing, one of the gems of Infocom, the leading company of the “interactive fiction” (that is, text adventures) genre? I am having in mind A Mind Forever Voyaging, a game in which you play as the AI protagonist, tasked with entering simulations of your town’s future 10, 20, etc., years hence, to find out how bad policy leads to societal collapse. When I read their description of the city, I am again reminded of Rideau Street’s homeless population.

In one of his best novels, the famous Hungarian writer Jenő Rejtő (killed far too young, at 37, serving in a forced labor battalion on the Eastern Front in 1943, after being drafted on account of being a Jew) has a character, a gourmand chef, utter these words: “The grub is inedible. Back at Manson, they only cooked bad food. That was tolerable. But here, they are cooking good food badly, and that is insufferable.” The West is like that today. It’s not “bad food”: our countries are not dictatorships, not failed states governed by corrupt oligarchs, but proper liberal democracies. Yet, I feel, they are increasingly mismanaged, unable to deliver on what ought to be the basic contract between the State and its Subjects in any regime.

What basic contract? Bertold Brecht put it best: “Erst kommt das Fressen, dann kommt die Moral“, says Macheath in Kurt Weill’s Threepenny Opera: “Food is the first thing, morals follow on.” A State must deliver food, shelter, basic security, a working infrastructure, and a legitimate hope that tomorrow will be better (or at least, not worse) than today.

A State that fails at that will itself fail. A State that succeeds at this mission will survive, even if it is an authoritarian regime. In fact, if the State is successful, it does not even need significant oppression to stay in power: it will, at the very least, be tolerated by the populace. (I grew up in such a state: Kádár’s “goulash communist” Hungary.) The liberal West may only forget this at its own peril.

What happens when the basic contract is violated? People look for alternatives. They may become desperate. And that’s when populist demagogues arrive on the scene, presenting themselves as saviors. In reality, they have neither the ability nor the inclination to solve anything: they feed on desperation, not solutions.

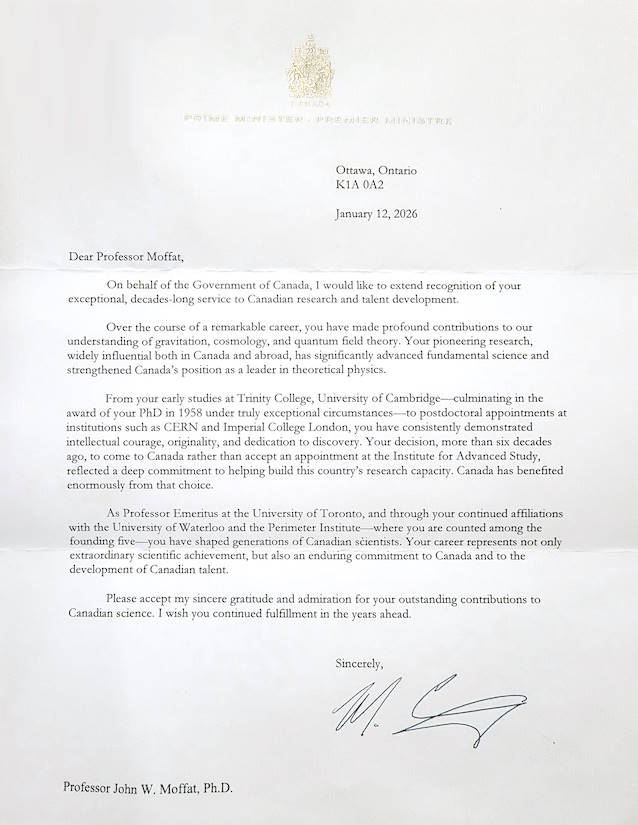

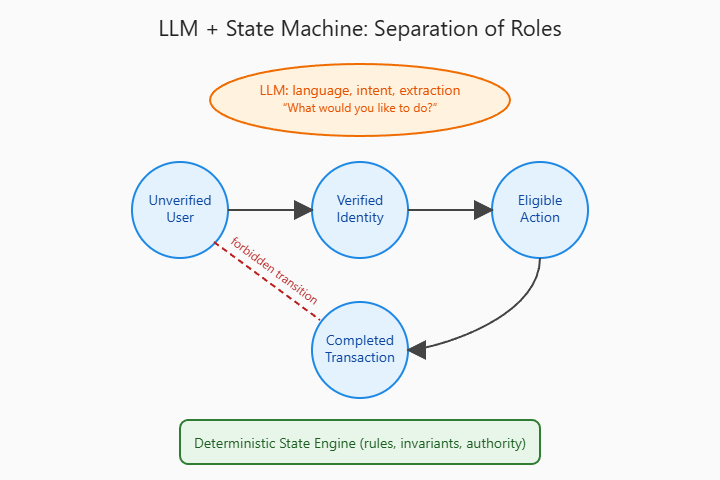

In contrast, successful States, liberal democracies and hereditary empires alike, share one thing in common: the dreaded “deep state”. That is to say, a competent meritocratic bureaucracy, capable of, and willing to, recognize and solve problems. A robust bureaucracy can survive several bad election cycles or even generations of bad Emperors. Imperial China serves as a powerful example, but we can also include the Roman Principate and later, Byzantium. Along with other examples of empires that remained stable and prosperous for many generations.

No wonder wannabe despots often target the “deep state” first. A competent meritocratic bureaucracy, after all, stands in their way towards unconstrained power. Thinning out the ranks, hollowing out the institutions is therefore the top order of the day for the would-be despot. It’s not always true of course. Talented despots learn to rely on the competent bureaucracy as opposed to eliminating it. But talented despots are not myopic populist opportunists. They are that rare kind: empire builders. Far too often, the despots we encounter lack both the talent and the vision to build anything. They just exploit the pain, and undermine the very institutions that can alleviate that pain.

This is what we see throughout the West in 2026. Even as the warning signs get stronger—among them rising wealth and income inequality, an oligarchic concentration of astronomical wealth in just a few hands, rising homelessness, decaying infrastructure, an increasingly fragile health care system, rising indebtedness, lack of employment security—there appears to be way too little appetite for meaningful structural solutions. Instead, we get easy slogans. “It’s the damn immigrants,” says one side while the other retorts with a complaint about “white supremacism,” just to name some examples, without implying moral equivalence. The slogans solve nothing: they do create, however, the specter of an “enemy” that must be eliminated, an “enemy” from whom only the populist can protect you.

One of the best records of one of my favorite bands, Electric Light Orchestra, was the incredibly prescient concept album Time, released in 1981. In addition to predicting advanced, well-aligned AI in such a way that feels almost uncanny in detail (“She does the things you do / But she is an IBM / She’s only programmed to be very nice / But she’s as cold as ice […] She tells me that she likes me very much […] She is the latest in technology / Almost mythology / But she has a heart of stone / She has an IQ of 1001 […] And she’s also a telephone“) they also describe a future that is… hollow: “Back in the good old 1980s / when things were so uncomplicated” – in other words, when Western liberal democracies still understood how to deliver on that basic contract, Brecht’s “basic food position“.