It’s time for me to write about physics again. I have a splendid reason: one of the recipients of this year’s physics Nobel is from Kingston, Ontario, which is practically in Ottawa’s backyard. He is recognized for his contribution to the discovery of neutrino oscillations. So I thought I’d write about neutrino oscillations a little.

Without getting into too much detail, the standard way of describing a theory of quantum fields is by writing down the so-called Lagrangian density of the theory. This Lagrangian density represents the kinetic and potential energies of the system, including so-called “mass terms” for fields that are massive. (Which, in quantum field theory, is the same as saying that the particles we associate with the unit oscillations of these fields have a specific mass.)

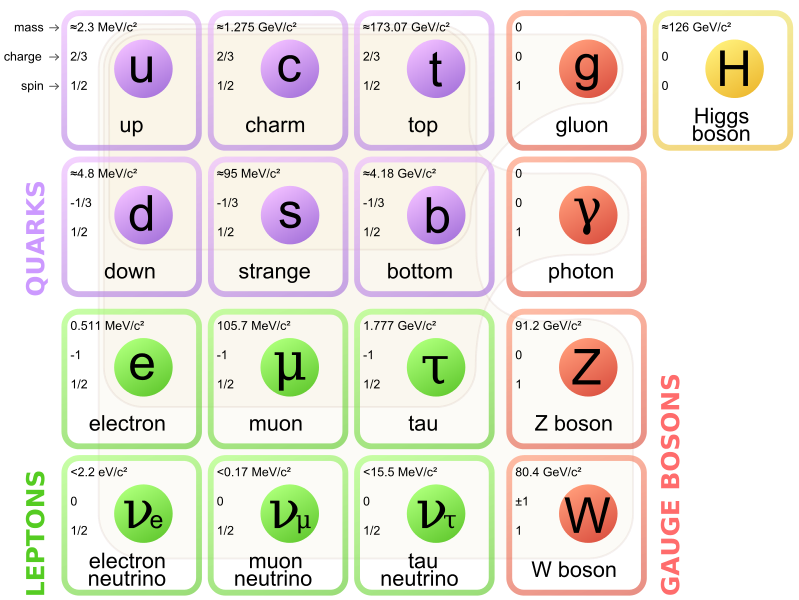

Now most massive particles in the Standard Model acquire their masses by interacting with the celebrated Higgs field in various ways. Not neutrinos though; indeed, until the mid 1990s or so, neutrinos were believed to be massless.

But then, neutrino oscillations were discovered and the physics community began to accept that neutrinos may be massive after all.

So what is this about oscillations? Neutrinos are somewhat complicated things, but I can demonstrate the concept using two hypothetical “scalar” particles (doesn’t matter what they are; the point is, their math is simpler than that of neutrinos.) So let’s have a scalar particle named \(\phi\). Let’s suppose it has a mass, \(\mu\). The mass term in the Lagrangian would actually be in the form, \(\frac{1}{2}\mu\phi^2\).

Now let’s have another scalar particle, \(\psi\), with mass \(\rho\). This means another mass term in the Lagrangian: \(\frac{1}{2}\rho\psi^2\).

But now I want to be clever and combine these two particles into a two-element abstract vector, a “doublet”. Then, using the laws of matrix multiplication, I could write the mass term as

$$\frac{1}{2}\begin{pmatrix}\phi&\psi\end{pmatrix}\cdot\begin{pmatrix}\mu&0\\0&\rho\end{pmatrix}\cdot\begin{pmatrix}\phi\\\psi\end{pmatrix}=\frac{1}{2}\mu\phi^2+\frac{1}{2}\rho\psi^2.$$

Clever, huh?

But now… let us suppose that there is also an interaction between the two fields. In the Lagrangian, this interaction would be represented by a term such as \(\epsilon\phi\psi\). Putting \(\epsilon\) into the “0” slots of the matrix, we get

$$\frac{1}{2}\begin{pmatrix}\phi&\psi\end{pmatrix}\cdot\begin{pmatrix}\mu&\epsilon\\\epsilon&\rho\end{pmatrix}\cdot\begin{pmatrix}\phi\\\psi\end{pmatrix}=\frac{1}{2}\mu\phi^2+\frac{1}{2}\rho\psi^2+\epsilon\phi\psi.$$

And here is where things get really interesting. That is because we can re-express this new matrix using a combination of a diagonal matrix and a rotation matrix (and its transpose):

$$\begin{pmatrix}\mu&\epsilon\\\epsilon&\rho\end{pmatrix}=\begin{pmatrix}\cos\theta/2&\sin\theta/2\\-\sin\theta/2&\cos\theta/2\end{pmatrix}\cdot\begin{pmatrix}\hat\mu&0\\0&\hat\rho\end{pmatrix}\cdot\begin{pmatrix}\cos\theta/2&-\sin\theta/2\\\sin\theta/2&\cos\theta/2\end{pmatrix},$$

which is equivalent to

$$\begin{pmatrix}\hat\mu&0\\0&\hat\rho\end{pmatrix}=\begin{pmatrix}\cos\theta/2&-\sin\theta/2\\\sin\theta/2&\cos\theta/2\end{pmatrix}\cdot\begin{pmatrix}\mu&\epsilon\\\epsilon&\rho\end{pmatrix}\cdot\begin{pmatrix}\cos\theta/2&\sin\theta/2\\-\sin\theta/2&\cos\theta/2\end{pmatrix},$$

or

$$\begin{pmatrix}\hat\mu&0\\0&\hat\rho\end{pmatrix}=\frac{1}{2}\begin{pmatrix}\mu+\rho+(\mu-\rho)\cos\theta-2\epsilon\sin\theta&(\rho-\mu)\sin\theta-2\epsilon\cos\theta\\(\rho-\mu)\sin\theta-2\epsilon\cos\theta&\mu+\rho+(\rho-\mu)\cos\theta+2\epsilon\sin\theta\end{pmatrix},$$

which tells us that \(\tan\theta=2\epsilon/(\rho-\mu)\), which works so long as \(\rho\ne\mu\).

Now why is this interesting? Because we can now write

\begin{align}\frac{1}{2}&\begin{pmatrix}\phi&\psi\end{pmatrix}\cdot\begin{pmatrix}\mu&\epsilon\\\epsilon&\rho\end{pmatrix}\cdot\begin{pmatrix}\phi\\\psi\end{pmatrix}\\

&{}=\frac{1}{2}\begin{pmatrix}\phi&\psi\end{pmatrix}\cdot\begin{pmatrix}\cos\theta/2&\sin\theta/2\\-\sin\theta/2&\cos\theta/2\end{pmatrix}\cdot\begin{pmatrix}\hat\mu&0\\0&\hat\rho\end{pmatrix}\cdot\begin{pmatrix}\cos\theta/2&-\sin\theta/2\\\sin\theta/2&\cos\theta/2\end{pmatrix}\cdot\begin{pmatrix}\phi\\\psi\end{pmatrix}\\

&{}=\frac{1}{2}\begin{pmatrix}\hat\phi&\hat\psi\end{pmatrix}\cdot\begin{pmatrix}\hat\mu&0\\0&\hat\rho\end{pmatrix}\cdot\begin{pmatrix}\hat\phi\\\hat\psi\end{pmatrix}.\end{align}

What just happened, you ask? Well, we just rotated the abstract vector \((\phi,\psi)\) by the angle \(\theta/2\), and as a result, diagonalized the expression. Which is to say that whereas previously, we had two interacting fields \(\phi\) and \(\psi\) with masses \(\mu\) and \(\rho\), we now re-expressed the same physics using the two non-interacting fields \(\hat\phi\) and \(\hat\psi\) with masses \(\hat\mu\) and \(\hat\rho\).

So what is actually taking place here? Suppose that the doublet \((\phi,\psi)\) interacts with some other field, allowing us to measure the flavor of an excitation (particle) as being either a \(\phi\) or a \(\psi\). So far, so good.

However, when we attempt to measure the mass of the doublet, we will not measure \(\mu\) or \(\rho\), because the two states interact. Instead, we will measure \(\hat\mu\) or \(\hat\rho\), corresponding to the states \(\hat\phi\) or \(\hat\psi\), respectively: that is, one of the mass eigenstates.

Which means that if we first perform a flavor measurement, forcing the particle to be in either the \(\phi\) or the \(\psi\) state, followed by a mass measurement, there will be a nonzero probability of finding it in either the \(\hat\phi\) or the \(\hat\psi\) state, with corresponding masses \(\hat\mu\) or \(\hat\rho\). Conversely, if we first perform a mass measurement, the particle will be either in the \(\hat\phi\) or the \(\hat\psi\) state; a subsequent flavor measurement, therefore, may give either \(\phi\) or \(\psi\) with some probability.

In short, the flavor and mass eigenstates do not coincide.

This is more or less how neutrino oscillations work (again, omitting a lot of important details), except things get a bit more complicated, as neutrinos are fermions, not scalars, and the number of flavors is three, not two. But the basic principle remains the same.

This is a unique feature of neutrinos, by the way. Other particles, e.g., charged leptons, do not have mass eigenstates that are distinct from their flavor eigenstates. The mechanism that gives them masses is also different: instead of a self-interaction in the form of a mass matrix, charged leptons (as well as quarks) obtain their masses by interacting with the Higgs field. But that is a story for another day.

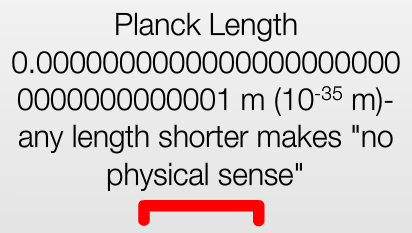

A physics meme is circulating on the Interwebs, suggesting that any length shorter than the so-called Planck length makes “no physical sense”.

A physics meme is circulating on the Interwebs, suggesting that any length shorter than the so-called Planck length makes “no physical sense”.