His views were notably controversial, especially in these troubled, polarized times. But his cartoons delighted millions, myself included.

Rest in peace, Scott Adams. Along with Catbert, we mourn you.

His views were notably controversial, especially in these troubled, polarized times. But his cartoons delighted millions, myself included.

Rest in peace, Scott Adams. Along with Catbert, we mourn you.

Been a while since I blogged about politics. Not because I don’t think about it a lot… but because lately, it’s become kind of pointless. We are, I feel, past the point when individuals trying to raise sensible concerns can accomplish anything. History is taking over, and it’s not leading us into the right direction.

Looking beyond the specifics: be it the decisive US military action to remove Maduro in Venezuela (and the decision to leave Maduro’s regime in place with his VP in charge), the seizure of Russian-flagged tankers, yet another Russian act of sabotage against undersea infrastructure in the Baltics (this time caught red-handed by the Finns), the military situation in Ukraine, the murder of a Minnesota woman by ICE agents, more shooting by US customs and border protection agents in Portland…

Never mind the politics of the day. It’s the bigger picture that concerns me.

Back in the 1990s, I was able to rent a decent apartment here in Ottawa, all utilities included, for 600-odd dollars. It was a decent apartment with a lovely view of the city. The building was reasonably well-maintained. Eventually we moved out because we bought our townhome. The price was well within our ability to finance.

Today, that same apartment rents for three times the amount. Our townhome? A similar one was sold for five times what we paid for ours 28 years ago. Now you’d think this is perfectly normal if incomes rose at the same pace, perhaps keeping track with inflation. But that is not the case. The median income in the same time period increased by a measly 30%, give or take.

This, I daresay, is obscene. It means that a couple in their early 30s, like we were back then, doesn’t stand a chance in hell. Especially if they are immigrants like we were, with no family backing, no inherited wealth.

In light of this, I am not surprised by daily reports about rising homelessness, shelters filled to capacity, food banks struggling with demand.

What I find especially troubling is that we seem hell-bent on turning cautionary tales into reality. For instance, there’s the 1952 science-fiction novel by Pohl and Kornbluth, The Space Merchants. Nowadays considered a classic. It describes a society in which corporate power is running rampant, advertising firms rule the world, and profit trumps everything. Replace “advertising” with “social media”, make a few more surface tweaks and the novel feels like it was written in 2025. When the narrative describes the homeless seeking shelter in the staircases of Manhattan’s shiny office towers, I can’t help but think of all the homeless here on Rideau Street, just minutes away from Parliament Hill, in the heart of a wealthy G7 capital city.

Or how about a computer game from the golden era of 8-bit computing, one of the gems of Infocom, the leading company of the “interactive fiction” (that is, text adventures) genre? I am having in mind A Mind Forever Voyaging, a game in which you play as the AI protagonist, tasked with entering simulations of your town’s future 10, 20, etc., years hence, to find out how bad policy leads to societal collapse. When I read their description of the city, I am again reminded of Rideau Street’s homeless population.

In one of his best novels, the famous Hungarian writer Jenő Rejtő (killed far too young, at 37, serving in a forced labor battalion on the Eastern Front in 1943, after being drafted on account of being a Jew) has a character, a gourmand chef, utter these words: “The grub is inedible. Back at Manson, they only cooked bad food. That was tolerable. But here, they are cooking good food badly, and that is insufferable.” The West is like that today. It’s not “bad food”: our countries are not dictatorships, not failed states governed by corrupt oligarchs, but proper liberal democracies. Yet, I feel, they are increasingly mismanaged, unable to deliver on what ought to be the basic contract between the State and its Subjects in any regime.

What basic contract? Bertold Brecht put it best: “Erst kommt das Fressen, dann kommt die Moral“, says Macheath in Kurt Weill’s Threepenny Opera: “Food is the first thing, morals follow on.” A State must deliver food, shelter, basic security, a working infrastructure, and a legitimate hope that tomorrow will be better (or at least, not worse) than today.

A State that fails at that will itself fail. A State that succeeds at this mission will survive, even if it is an authoritarian regime. In fact, if the State is successful, it does not even need significant oppression to stay in power: it will, at the very least, be tolerated by the populace. (I grew up in such a state: Kádár’s “goulash communist” Hungary.) The liberal West may only forget this at its own peril.

What happens when the basic contract is violated? People look for alternatives. They may become desperate. And that’s when populist demagogues arrive on the scene, presenting themselves as saviors. In reality, they have neither the ability nor the inclination to solve anything: they feed on desperation, not solutions.

In contrast, successful States, liberal democracies and hereditary empires alike, share one thing in common: the dreaded “deep state”. That is to say, a competent meritocratic bureaucracy, capable of, and willing to, recognize and solve problems. A robust bureaucracy can survive several bad election cycles or even generations of bad Emperors. Imperial China serves as a powerful example, but we can also include the Roman Principate and later, Byzantium. Along with other examples of empires that remained stable and prosperous for many generations.

No wonder wannabe despots often target the “deep state” first. A competent meritocratic bureaucracy, after all, stands in their way towards unconstrained power. Thinning out the ranks, hollowing out the institutions is therefore the top order of the day for the would-be despot. It’s not always true of course. Talented despots learn to rely on the competent bureaucracy as opposed to eliminating it. But talented despots are not myopic populist opportunists. They are that rare kind: empire builders. Far too often, the despots we encounter lack both the talent and the vision to build anything. They just exploit the pain, and undermine the very institutions that can alleviate that pain.

This is what we see throughout the West in 2026. Even as the warning signs get stronger—among them rising wealth and income inequality, an oligarchic concentration of astronomical wealth in just a few hands, rising homelessness, decaying infrastructure, an increasingly fragile health care system, rising indebtedness, lack of employment security—there appears to be way too little appetite for meaningful structural solutions. Instead, we get easy slogans. “It’s the damn immigrants,” says one side while the other retorts with a complaint about “white supremacism,” just to name some examples, without implying moral equivalence. The slogans solve nothing: they do create, however, the specter of an “enemy” that must be eliminated, an “enemy” from whom only the populist can protect you.

One of the best records of one of my favorite bands, Electric Light Orchestra, was the incredibly prescient concept album Time, released in 1981. In addition to predicting advanced, well-aligned AI in such a way that feels almost uncanny in detail (“She does the things you do / But she is an IBM / She’s only programmed to be very nice / But she’s as cold as ice […] She tells me that she likes me very much […] She is the latest in technology / Almost mythology / But she has a heart of stone / She has an IQ of 1001 […] And she’s also a telephone“) they also describe a future that is… hollow: “Back in the good old 1980s / when things were so uncomplicated” – in other words, when Western liberal democracies still understood how to deliver on that basic contract, Brecht’s “basic food position“.

To all good people everywhere: I wish for peace. A just peace.

You know what? Bad people, too. Peace is worth it.

So I had a surprisingly smooth experience with a chat agent, most likely an AI agent though probably with some human-in-the-loop supervision. This had to do with canceling/downgrading my Sirius XM subscription, now that we no longer have a vehicle with satellite radio.

And it got me thinking. Beyond the hype, what does it take to build a reliable AI customer experience (CX) agent?

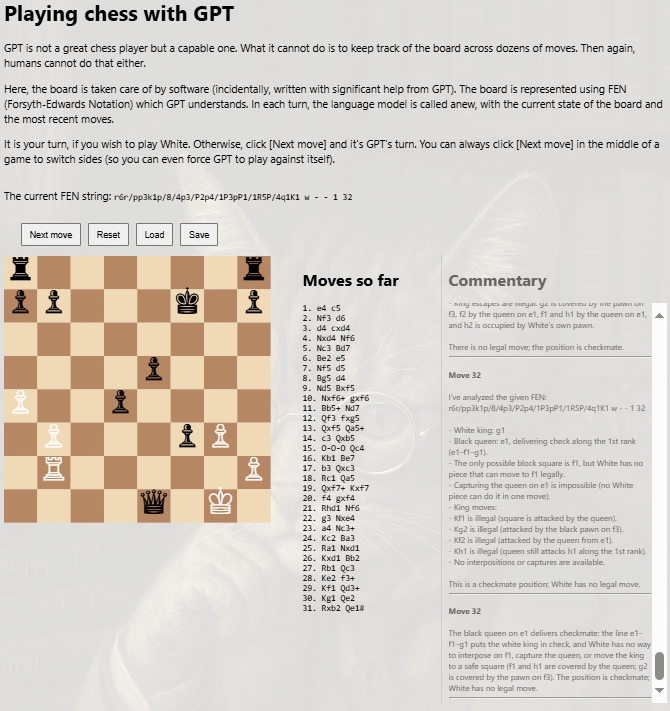

And that’s when it hit me: I already did it. Granted, not an agent per se, just the way I set up GPT-5 to play chess.

The secret? State machines.

I did not ask GPT to keep track of the board. I did not ask GPT to update the board either. I told GPT the state of the board and asked GPT to make a move.

The board state was tracked not by GPT but by conventional, deterministic code. The board is a state machine. Its state transitions are governed by the rules of chess. There is no ambiguity. The board’s state (including castling and en passant) is encoded in a FEN string unambiguously. When GPT offers a move, its validity is determined by a simple question: does it represent a valid state transition for the chessboard?

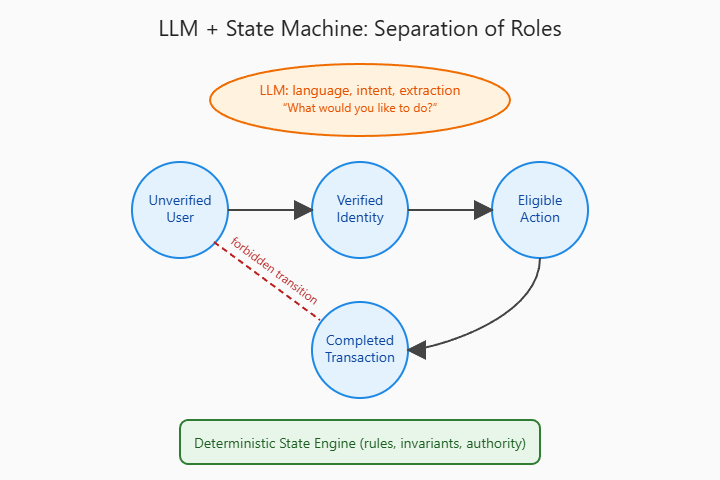

And this is how a good AI CX agent works. It does not unilaterally determine the state of the customer’s account. It offers state changes, which are then evaluated by the rigid logic of a state machine.

Diagram created by ChatGPT to illustrate a CX state machine

Take my case with Sirius XM. Current state: Customer with a radio and Internet subscription. Customer indicates intent to cancel radio. Permissible state changes: Customer cancels; customer downgrades to Internet-only subscription. This is where the LLM comes in: with proper scaffolding and a system prompt, it interrogates the customer. Do you have any favorite Sirius XM stations? Awesome. Are you planning on purchasing another XM radio (or a vehicle equipped with one)? No, fair enough. Would you like a trial subscription to keep listening via the Internet-only service? Great. State change initiated… And that’s when, for instance, a human supervisor comes in, to approve the request after glancing at the chat transcript.

The important thing is, the language mode does not decide what the next state is. It has no direct authority over the state of the customer’s account. What it can do, the only thing it can do at this point, is initiating a valid state transition.

The hard part when it comes to designing such a CX solution is mapping the states adequately, and making sure that the AI has the right instructions. Here is what is NOT needed:

In fact, a modest locally run model like Gemma-12B would be quite capable of performing the chat function. So there’s no need even to worry about leaking confidential customer information to the cloud.

Bottom line: use language models for what they do best, associative reasoning. Do not try to use a system with no internal state and no modeling capability as a reasoning engine. That’s like, if I may offer a crude but (I hope) not stupid analogy, it’s like building a world-class submarine and then, realizing that it is not capable of flying, nailing some makeshift wooden wings onto its body.

I almost feel tempted to create a mock CX Web site to demonstrate all this in practice. Then again, I realize that my chess implementation already does much of the same: the AI agent supplies a narrative and a proposed state transition, but the state (the chessboard) is maintained, its consistence is ensured, by conventional software scaffolding.

Good-bye, 2022 Accord. Hardly knew ya.

Really, our Accord had ridiculously low mileage. We weren’t driving much even before COVID but since then? I’ve not been to NYC since 2016, or to the Perimeter Institute since, what, 2018 I think. In fact in the past 5 years, the farthest I’ve been from Ottawa was Montreal, maybe twice.

Needless to say, when our dealer saw a car with such low mileage, they pounced. Offered a new lease. I told them, sure, but I’m downsizing: no need for an Accord when we use the car this little, a Civic will do just fine. And a Civic it is.

Things missing? Very few. In no particular order:

This is it. Really. That’s all. (I thought it also lacked blindspot warning, but I was mistaken.) And it’s a car just as decent and capable as the Accord, but substantially cheaper. So… who am I to complain?

So here we go, nice little Civic, until this lease expires. (No, I am not buying cars anymore. They are loaded with things that, when they go bad, are very costly to repair or replace. The technical debt is substantial.)

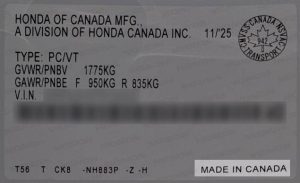

Almost forgot this rather important bit:

Yes. Made in Canada. These days, sadly, it matters.

It happened in 1959, at the height of the Cold War, during the early years of the Space Race between the United States of America and the Soviet Union.

An accident in rural Oklahoma, near the town of Winganon.

But… don’t be fooled by appearances. This thing is not what it looks like.

That is to say, it is not a discarded space capsule, some early NASA relic.

What is it, then? Why, it is the container of a cement mixer that had an accident at this spot. Cement, of course, has the nasty tendency to solidify rapidly if it is not mixed, and we quickly end up with a block that weighs several tons and… well, it’s completely useless.

There were, I understand, plans to bury the thing but it never happened.

But then, in 2011, local artists had an idea and transformed the thing into something else. Painting it with a NASA logo, an American flag, and adding decorations, they made it appear like a discarded space capsule.

And I already know that if it ever happens again that I take another cross-country drive in America to visit the West Coast, and my route goes anywhere near the place, I will absolutely, definitely, visit it. Just as I visited the TARDIS of Doctor Who 12 years ago when I was spending a few lovely days in the fine city of London.

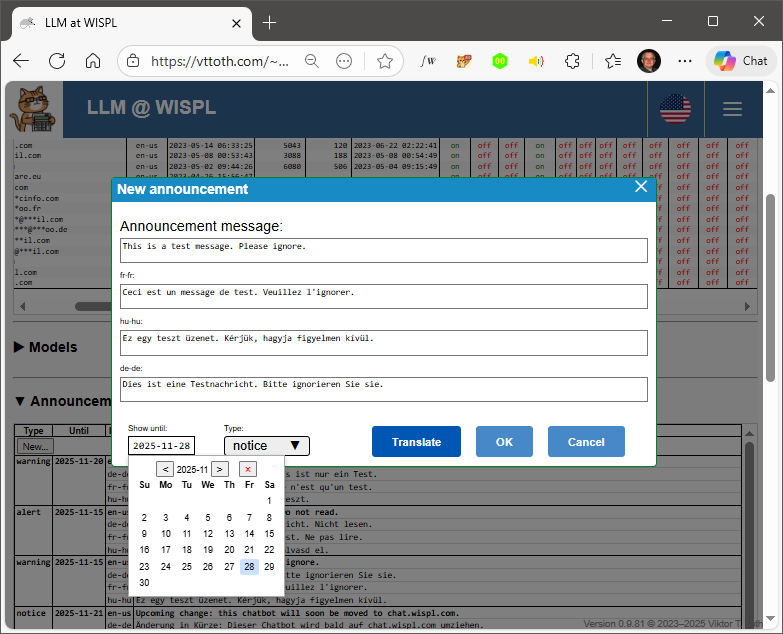

Behind every front-end there is a back-end. My WISPL.COM chatbot is no exception. It’s one thing to provide a nice chatbot experience to my select users. It’s another thing to be able to manage the system efficiently.

Sure, I can, and do, management tasks directly in the database, using SQL commands. But it’s inelegant and inconvenient. And just because I am the only admin does not mean I cannot make my own life easier by creating a more streamlined management experience.

Take announcements. The WISPL chatbot operates in four languages. Creating an announcement entails writing it in a primary language, translating it into three other languages, and the posting the requisite records to the database. Doing it by hand is not hard, but a chore.

Well, not anymore. I just created a nice back-end UI for this purpose. By itself it’s no big deal of course, but it’s the first time the software itself uses a large language model for a targeted purpose.

Note the highlighted Translate button. It sends the English-language text to a local copy of Gemma, Google’s open-weights LLM. Gemma is small but very capable. Among other things, it can produce near flawless translations into, never mind German or French, even Hungarian.

This back-end also lets me manage WISPL chatbot users as well as the language models themselves. It shows system logs, too.

Earlier this morning, still in bed, I was thinking about how electricity entered people’s lives in the past century or so.

My wife and I personally knew older people who spent their childhood in a world without electricity.

One could almost construct a timeline along these lines. This is what was on my mind earlier in the morning as I was waking up.

And then, a few hours later, a post showed up in my feed on Facebook, courtesy of the City of Ottawa Archives, accompanied by an image of some exhibition grounds celebrating World Television Day back in 1955.

It’s almost as though Facebook read my mind.

No, I do not believe that they did (otherwise I’d be busy constructing my first official tinfoil hat) but it is still an uncanny coincidence. I could not have possibly come up with a better illustration to accompany my morning thoughts on this subject.

It was high time, I think. I just finished putting together a Web site that showcases my AI and machine learning related work.

The site is called WISPL. It is a domain name I fortuitously obtained almost a decade ago with an entirely different concept in mind, but which fits perfectly. It’s a short, pronounceable domain name and it reminds one of the phrase, “AI whisperer”.

The site has of course been the home of my “chatbot” for more than two years already, but now it is something more. In addition to the chatbot, I now present my retrieval augmented generation (RAG) solution; I show a Web app that allows the user to play chess against GPT “properly” (while also demonstrating the ground truth that autoregressive stochastic next-token predictors will never be great reasoning engines); I showcase my work on Maxima (the computer algebra system, an example of more “conventional” symbolic AI); and I describe some of my AI/ML research projects.

I came across this meme earlier today:

For the first time in history, you can say “He is an idiot” and 90% of the world will know whom you are talking about.

In an inspired moment, I fed this sentence, unaltered, to Midjourney. Midjourney 6.1 to be exact.

Our AI friends are… fun.

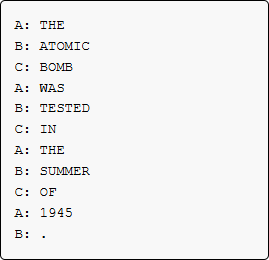

While I was working on my minimalist but full implementation of a GPT, I also thought of a game that can help participants understand better how language models really work. Here are the rules:

Say, there are three participants, Alice, Bob and Christine, trying to answer the question, “What was the most significant geopolitical event of the 20th century”?

Did Alice really want to talk about the atomic bomb? Perhaps she was thinking of the Sarajevo assassination and the start of WW1. Or the collapse of the USSR.

Did Bob really mean to talk about the bomb? Perhaps he was thinking about the discovery of the atomic nature of matter and how it shaped society. Or maybe something about the atomic chain reaction?

Did Christine really mean to talk about the first atomic test, the Trinity test in New Mexico? Maybe she had in mind Hiroshima and Nagasaki.

The answer we got is an entirely sensible answer. But none of the participants knew that this will be the actual answer. There was no “mind” conceiving this specific answer. Yet the “latent knowledge” was present in the “network” of the three players. At each turn, there were high probability and lower probability variants. Participants typically but not necessarily picked the highest probability “next word”, but perhaps opted for a lower probability alternative on a whim, for instance when Bob used “TESTED” instead of “DROPPED”.

Language models do precisely this, except that in most cases, what they predict next is not a full word (though it might be) but a fragment, a token. There is no advance knowledge of what the model would say, but the latent knowledge is present, as a result of the model’s training.

In 1980, Searle argued, in the form of his famous Chinese Room thought experiment, that algorithmic symbol manipulation does not imply understanding. In his proposed game, participants who do not speak Chinese manipulate Chinese language symbols according to preset rules, conveying the illusion of comprehension without actual understanding. I think my little game offers a perfect counterexample: A non-algorithmic game demonstrating the emergence of disembodied intelligence based on the prior world knowledge of its participants, but not directly associated with any specific player.

My wife and I just played two turns of this game. It was a fascinating experience for both of us.

A few weeks ago I had an idea.

What if I implement a GPT? No, not something on the scale of ChatGPT, with many hundreds of billions of parameters, consuming countless terawatt-hours, training on a corpus that encompasses much of the world’s literature and most of the Internet.

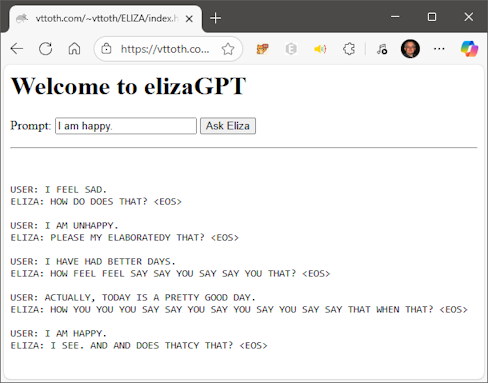

No, something far more modest. How about… a GPT that emulates the world’s first chatbot, Eliza?

Long story short (the long story will follow in due course on my Web site) I succeeded. I have built a GPT from scratch in C++, including training. I constructed a sensible (though far from perfect) training corpus of user prompts and Eliza responses. And over the course of roughly a week, using a consumer-grade GPU for hardware acceleration, I managed to train my smallest model.

No, don’t expect perfection. My little model does not have hundreds of billions of parameters. It does not even have millions of parameters. It is only a 38 thousand (!) parameter model.

Yet… it works. Sometimes its output is gibberish. But most of the time, the output is definitely Eliza-like.

The best part? The model is so small, its inference runtime works well when implemented in JavaScript, running in-browser.

And here is my first ever exchange with the JavaScript implementation, unfiltered and unedited.

No, I am not going to win awards with this chatbot, but the fact that it works at all, and that it successfully learned the basic Eliza-like behavior is no small potatoes.

For what it’s worth, I was monitoring its training using a little bit of homebrew near-real-time instrumentation, which allowed me to keep an eye on key model parameters, making sure that I intervene, adjusting learning rates, to prevent the training from destabilizing the model.

I am now training a roughly 10 times larger version. I do not yet know if that training will be successful. If it is, I expect its behavior will be more robust, with less gibberish and more Eliza-like behavior.

In the meantime, I can now rightfully claim that I know what I am talking about… after all, I have a C++ implementation, demonstrably working, complete with backpropagation, by way of credentials.

Now that I have put together my little RAG project (little but functional, more than a mere toy demo) it led to another idea. The abstract vector database (embedding) that represents my answers can be visualized, well, sort of, in a two-dimensional representation, and I built just that: an interactive visualization of all my Quora answers.

It is very educational to explore, how the embedding model managed to cluster answers by semantics. As a kind of a trivial example, there is a little “cat archipelago” in the upper right quadrant: several of my non-physics answers related to cats can be found in this corner. Elsewhere there is, for instance, a cluster of some of my French-language answers.

Anyhow, feel free to take a look. It’s fun. Unlike the RAG engine itself, exploring this map does not even consume any significant computing (GPU) resources on my server.

I’ve been reading about this topic a lot lately: Retrieval Augmented Generation, the next best thing that should make large language models (LLMs) more useful, respond more accurately in specific use cases. It was time for me to dig a bit deeper and see if I can make good sense of the subject and understand its implementation.

The main purpose of RAG is to enable a language model to respond using, as context, a set of relevant documents drawn from a documentation library. Preferably, relevance itself is established using machine intelligence, so it’s not just some simple keyword search but semantic analysis that helps pick the right subset.

One particular method is to represent documents in an abstract vector space of many dimensions. A query, then, can be represented in the same abstract vector space. The most relevant documents are found using a “cosine similarity search”, which is to say, by measuring the “angle” between the query and the documents in the library. The smaller the angle (the closer the cosine is to 1) the more likely the document is a match.

The abstract vector space in which representations of documents “live” is itself generated by a specialized language model (an embedding model.) Once the right documents are found, they are fed, together with the user’s query, to a generative language model, which then produces the answer.

As it turns out, I just had the perfect example corpus for a test, technology demo implementation: My more than 11,000 Quora answers, mostly about physics.

Long story short, I now have this:

The nicest part: This RAG solution “lives” entirely on my local hardware. The main language model is Google’s Gemma with 12 billion parameters. At 4-bit quantization, it fits comfortably within the VRAM of a 16 GB consumer-grade GPU, leaving enough room for the cosine similarity search. Consequently, the model response to queries in record time: the answer page shown in this example was generated in less than about 30 seconds.

I regularly get despicable garbage on Facebook, for instance:

Meanwhile, just the other day, Facebook apparently lost all my prior notifications. Not sure if it is a site-wide problem or specific to my account, but it was annoying either way.

And then… this. I regularly repost my blog entries to Facebook. Over the past year, they randomly removed three of them, for allegedly violating their famous “community standards”. (Because Meta cares so much about “community”. Right.) The three that they removed were

So why do I even bother with Facebook, then? Well, a good question with a simple answer: there are plenty of people — old friends, classmates — that I’d lose touch with otherwise.

That does not mean that I have to like the experience.

Anyhow, now I wonder if this post will also be banned as “spam” by their broken algorithms. Until then, here’s an image of our newest cat.

Marcel may be young (just over 3 months) but he already understands a lot about the world. Including Facebook. His facial expression says it all.

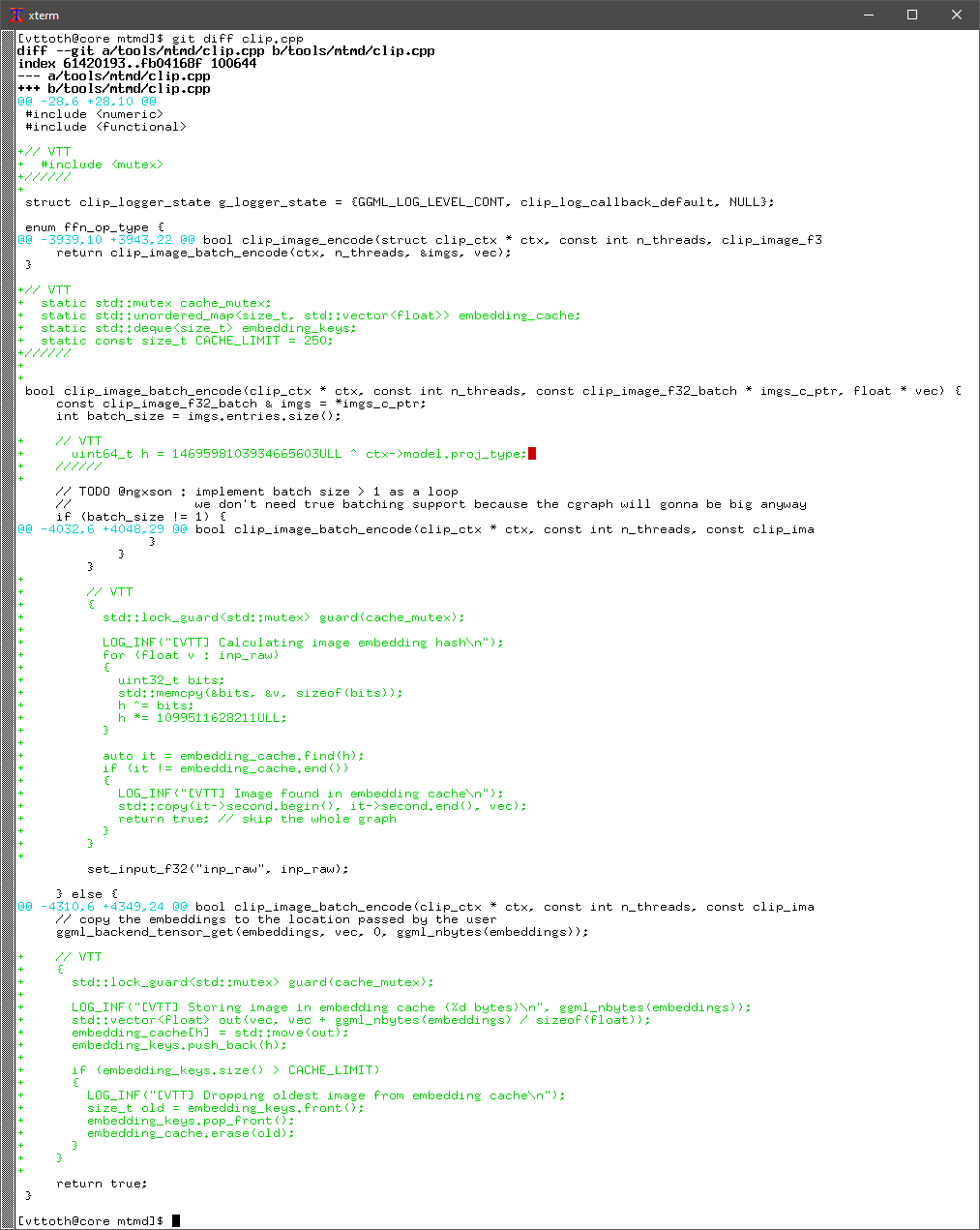

There is a wonderful tool out there that works with many of the published large language models and multimodal models: Llama.cpp, a pure C++ implementation of the inference engine to run models like Meta’s Llama or Google’s Gemma.

The C++ implementation is powerful. It allows a 12-billion parameter model to run at speed even without GPU acceleration, and emit 3-4 tokens per second in the generation phase. That is seriously impressive.

There is one catch. Multimodal operation with images requires embedding, which is often the most time-consuming part. A single image may take 45-60 seconds to encode. And in a multi-turn conversation, the image(s) are repeatedly encoded, slowing down the conversation at every turn.

An obvious solution is to preserve the embeddings in a cache and avoid re-embedding images already cached. Well, this looked like a perfect opportunity to deep-dive into the Llama.cpp code base and make a surgical change. A perfect opportunity also to practice my (supposedly considerable) C++ skills, which I use less and less these days.

Well, what can I say? I did it and it works.

I can now converse with Gemma, even with image content, and it feels much snappier.

Once again, I am playing with “low-end” language and multimodal AI running on my own hardware. And I am… somewhat astonished.

But first… recently, I learned how to make the most out of published models available through Hugging Face, using the Llama.cpp project. This project is a C++ “engine” that can run many different models if they are presented in a standard form. In fact, I experimented with Llama.cpp earlier, but only a prepackaged version. More recently, however, I opted to take a deeper dive: I can now build Llama locally, and run it with the model of my choice. And that is exactly what I have been doing.

How efficient is Llama.cpp? Well… we can read a lot about just how much power it takes to run powerful language models and the associated insane hardware requirements in the form of powerful GPUs with tons of high-speed RAM. Sure, that helps. But Llama.cpp can run a decent model in the ~10 billion parameter range even without a GPU, and still produce output at a rate of 3-5 tokens (maybe 2-3 words) per second.

But wait… 10 billion? That sounds like a lot until we consider that the leading-edge, “frontier class” models are supposedly in the trillion-parameter range. So surely, a “tiny” 10-billion parameter model is, at best, a toy?

Maybe not.

Take Gemma, now fully incorporated into my WISPL.COM site by way of Llama.cpp. Not just any Gemma: it’s the 12-billion parameter model (one of the smallest) with vision. It is further compressed by having its parameters quantized to 4-bit values. In other words, it’s basically as small as a useful model can be made. Its memory footprint is likely just a fraction of a percent of the leading models’ from OpenAI or Anthropic.

I had a test conversation with Gemma the other day, after ironing out details. Gemma is running here with a 32,768 token context window, using a slightly customized version of my standard system prompt. And look what it accomplished in the course of a single conversation:

Throughout it all, and despite the numerous context changes, the model never lost coherence. The final exchanges were rather slow in execution (approximately 20 minutes to parse all images and the entire transcript and generate a response) but the model remained functional.

prompt eval time = 1102654.82 ms / 7550 tokens ( 146.05 ms per token, 6.85 tokens per second)

eval time = 75257.86 ms / 274 tokens ( 274.66 ms per token, 3.64 tokens per second)

total time = 1177912.68 ms / 7824 tokens

This is very respectable performance for a CPU-only run of a 12-billion parameter model with vision. But I mainly remain astonished by the model’s capabilities: its instruction-following ability, its coherence, its robust knowledge that remained free of serious hallucinations or confabulations despite the 4-bit quantization.

In other words, this model may be small but it is not a toy. And the ability to run such capable models locally, without cloud resources (and without the associated leakage of information) opens serious new horizons for diverse applications.

I hear from conservative-leaning friends, especially conservative-leaning friends in the US, that they are fed up. They just want to be… left alone.

Left alone in what sense? I dare not ask because I know the answers. They will tell me things like (just a few examples):

I could counter this by pointing out that taxes pay for the infrastructure we all use. That vaccinations protect not just the vaccinated by those around them. That the Second Amendment is about denying government a monopoly on organized violence, not about individuals with a peashooter-carrying fetish. That (in the US) illegals power a significant chunk of the economy while existing as second-class citizens. That rioting on Capitol Hill is not democracy. That regulation is what keeps fish alive in rivers and supermarket food safe. That respect for bodily autonomy is not murder. That parents do not have a right to abuse their children or deny them medical treatment.

But by doing so, I’d only contribute to the problem, by helping to deepen divisions. Pointing out that the identity politics of the liberal-leaning crowd is often no better — bringing race and gender into everything including abstract mathematics is the exact opposite of the great society in which we are all judged by the content of our character — and it would only fuel the grievance.

So instead, how about I mention a few very personal examples from my immediate circle of family of friends. People who were not left alone (and believe you me, my little list is far from complete):

In light of this, perhaps my exasperation is a tad more understandable when I listen to folks presenting themselves as victims while living as comfortably middle-class denizens in the First World, enjoying freedoms and a standard of living without precedent: a life of freedom and prosperity that I could only dream about at, say, age 20.

I again played a little with my code that implements a functional user interface to play chess with language models.

This time around, I tried to play chess with GPT-5. The model played reasonably, roughly at my level as an amateur: it knows the rules, but its reasoning is superficial and it loses a game even against a weak machine opponent (GNU Chess at its lowest level.)

Tellingly, it is strong in the opening moves, when it can rely on its vast knowledge of the chess literature. It then becomes weak mid-game.

In my implementation, the model is asked to reason and then move. It comments as it reasons. When I showed the result to another instance of GPT-5, it made an important observation: language models have rhetorical competence, but little tactical competence.

This, actually, is a rather damning statement. It implies that efforts to turn language models into autonomous “reasoning agents” are likely misguided.

This should come as no surprise. Language models learn, well, they learn language. They have broad knowledge and can be extremely useful assistants at a wide variety of tasks, from business writing to code generation. But their knowledge is not grounded in experience. Just as they cannot track the state of a chess board, they cannot analyze the consequences of a chain of decisions. The models produce plausible narratives, but they are often hollow shells: there is no real understanding of the consequences of decisions.

This is well in line with recent accounts of LLMs failing at complex coordination or problem-solving tasks. The same LLM that writes a flawless subroutine under the expert guidance of a seasoned software engineer often produces subpar results in a “vibe coding” exercise when asked to deliver a turnkey solution.

My little exercise using chess offers a perfect microcosm. The top-of-the-line LLM, GPT-5, knows the rules of chess, “understands” chess. Its moves are legal. But it lacks the ability to analyze the outcome of its planned moves to any meaningful depth: thus, it pointlessly sacrifices its queen, loses pieces in reckless moves, and ultimately loses the game even against a lowest-level machine opponent. The model’s rhetorical strength is exemplary; its tactical abilities are effectively non-existent.

This reflects a simple fact: LLMs are designed to produce continuation of text. They are not designed to perform in-depth analysis of decisions and consequences.

The inevitable conclusion is that attempts to use LLMs as high-level agents, orchestrators of complex behavior without external grounding are bound to fail. Treating language models as autonomous agents is a mistake: they should serve as components of autonomous systems, but the autonomy itself must come from something other than a language model.