A few weeks ago I had an idea.

What if I implement a GPT? No, not something on the scale of ChatGPT, with many hundreds of billions of parameters, consuming countless terawatt-hours, training on a corpus that encompasses much of the world’s literature and most of the Internet.

No, something far more modest. How about… a GPT that emulates the world’s first chatbot, Eliza?

Long story short (the long story will follow in due course on my Web site) I succeeded. I have built a GPT from scratch in C++, including training. I constructed a sensible (though far from perfect) training corpus of user prompts and Eliza responses. And over the course of roughly a week, using a consumer-grade GPU for hardware acceleration, I managed to train my smallest model.

No, don’t expect perfection. My little model does not have hundreds of billions of parameters. It does not even have millions of parameters. It is only a 38 thousand (!) parameter model.

Yet… it works. Sometimes its output is gibberish. But most of the time, the output is definitely Eliza-like.

The best part? The model is so small, its inference runtime works well when implemented in JavaScript, running in-browser.

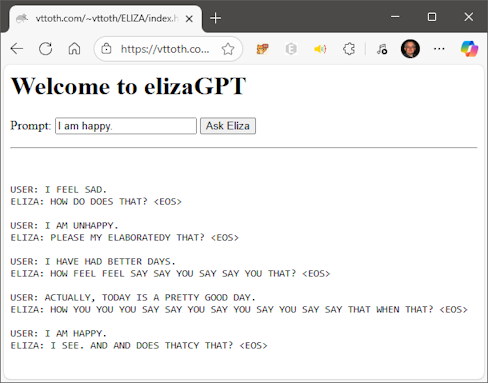

And here is my first ever exchange with the JavaScript implementation, unfiltered and unedited.

No, I am not going to win awards with this chatbot, but the fact that it works at all, and that it successfully learned the basic Eliza-like behavior is no small potatoes.

For what it’s worth, I was monitoring its training using a little bit of homebrew near-real-time instrumentation, which allowed me to keep an eye on key model parameters, making sure that I intervene, adjusting learning rates, to prevent the training from destabilizing the model.

I am now training a roughly 10 times larger version. I do not yet know if that training will be successful. If it is, I expect its behavior will be more robust, with less gibberish and more Eliza-like behavior.

In the meantime, I can now rightfully claim that I know what I am talking about… after all, I have a C++ implementation, demonstrably working, complete with backpropagation, by way of credentials.

Registered to try it. Our conversation was a brief one:

– Hi, are you familiar with Hungarian poetry?

– Perhaps the answer lies within yourself?

I immediately got sure it works in Eliza style (and a number of similar programs of that and even some later epoch). Though honestly my understanding of the matter is too naive to judge about 38k parameters. For the person not well acquainted with LLM internals it sounds as overkill – the whole Eliza source code is shorter, if I remember correctly :)

Though definitely LLMs are not designed to do things in economic way, the goal is different. So I’d say it’s quite ok.

BTW, if I understood correctly that your site has more complicated chat agents – what I wanted to ask is like this. I once (in childhood) stuck upon the two lines from the poem, supposedly by Sandor Petefi – of course in translation. It is like “And only in the land where I was born, Sun laughs to me as to his own son”. Regretfully I never was able to trace original title or even the author of translation.

So as I noticed by chance the website provides Magyar as one of the interface languages I had naive idea to solve this old puzzle :) Who knows, perhaps you’ll have once a model trained specifically on poems.

38k parameters is very tiny for a machine learning model. Of course algorithmic Eliza is much smaller. Here, the idea is to “learn to be like Eliza” from scratch, for a general purpose neural network.

Anyhow, unlike Eliza, I know Petőfi. Hard not to know him if you grew up in Hungary like I did :-)

He wrote many patriotic poems, the most famous among them Nemzeti Dal.

But the line you quoted, if it was a Russian translation, my best guess is this one.

(You can get Google or ChatGPT to translate it for you.)