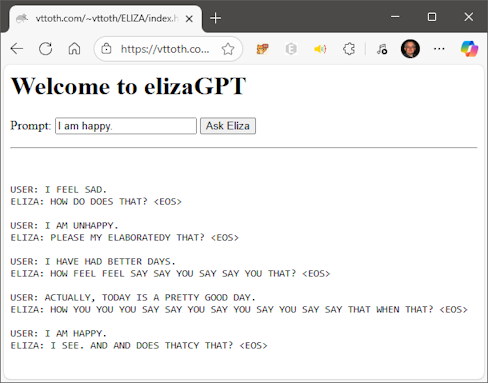

Behind every front-end there is a back-end. My WISPL.COM chatbot is no exception. It’s one thing to provide a nice chatbot experience to my select users. It’s another thing to be able to manage the system efficiently.

Sure, I can, and do, management tasks directly in the database, using SQL commands. But it’s inelegant and inconvenient. And just because I am the only admin does not mean I cannot make my own life easier by creating a more streamlined management experience.

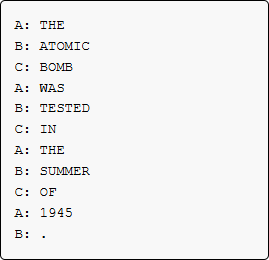

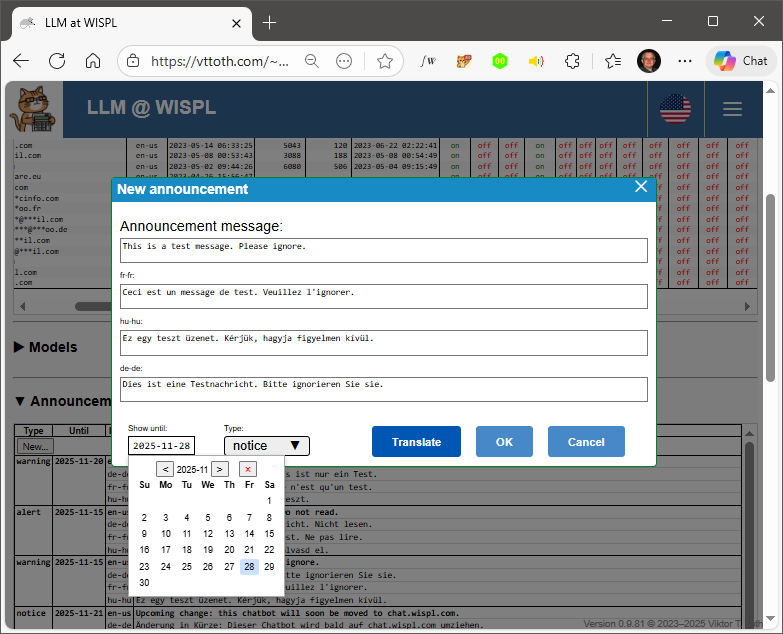

Take announcements. The WISPL chatbot operates in four languages. Creating an announcement entails writing it in a primary language, translating it into three other languages, and the posting the requisite records to the database. Doing it by hand is not hard, but a chore.

Well, not anymore. I just created a nice back-end UI for this purpose. By itself it’s no big deal of course, but it’s the first time the software itself uses a large language model for a targeted purpose.

Note the highlighted Translate button. It sends the English-language text to a local copy of Gemma, Google’s open-weights LLM. Gemma is small but very capable. Among other things, it can produce near flawless translations into, never mind German or French, even Hungarian.

This back-end also lets me manage WISPL chatbot users as well as the language models themselves. It shows system logs, too.