A friend of mine challenged me. After telling him how I was able to implement some decent neural network solutions with the help of LLMs, he asked: Could the LLM write a neural network example in Commodore 64 BASIC?

You betcha.

Well, it took a few attempts — there were some syntax issues and some oversimplifications so eventually I had the idea of asking the LLM to just write the example on Python first and then use that as a reference implementation for the C64 version. That went well. Here’s the result:

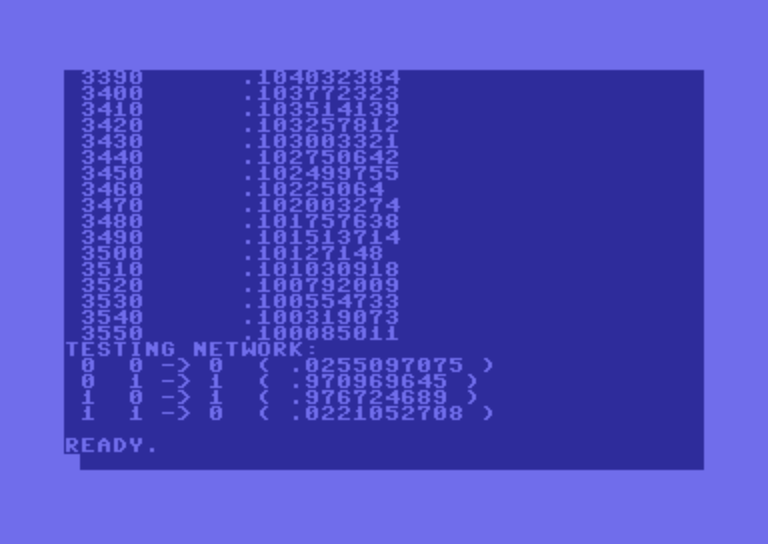

As this screen shot shows, the program was able to learn the behavior of an XOR gate, the simplest problem that requires a hidden layer of perceptrons, and as such, a precursor to modern “deep learning” solutions.

I was able to run this test on Krisztián Tóth’s (no relation) excellent C64 emulator, which has the distinguishing feature of reliable copy-paste, making it possible to enter long BASIC programs without having to retype them or somehow transfer them to a VIC-1541 floppy image first.

In any case, this is the program that resulted from my little collaboration with the Claude 3.7-sonnet language model:

10 REM NEURAL NETWORK FOR XOR PROBLEM

20 REM BASED ON WORKING PYTHON IMPLEMENTATION

100 REM INITIALIZE VARIABLES

110 DIM X(3,1) : REM INPUT PATTERNS

120 DIM Y(3) : REM EXPECTED OUTPUTS

130 DIM W1(1,1) : REM WEIGHTS: INPUT TO HIDDEN

140 DIM B1(1) : REM BIAS: HIDDEN LAYER

150 DIM W2(1) : REM WEIGHTS: HIDDEN TO OUTPUT

160 DIM H(1) : REM HIDDEN LAYER OUTPUTS

170 DIM D1(1,1) : REM PREVIOUS DELTA FOR W1

180 DIM B2 : REM BIAS: OUTPUT LAYER

190 DIM D2(1) : REM PREVIOUS DELTA FOR W2

200 DIM DB1(1) : REM PREVIOUS DELTA FOR B1

210 DB2 = 0 : REM PREVIOUS DELTA FOR B2

220 LR = 0.5 : REM LEARNING RATE

230 M = 0.9 : REM MOMENTUM

300 REM SETUP TRAINING DATA (XOR PROBLEM)

310 X(0,0)=0 : X(0,1)=0 : Y(0)=0

320 X(1,0)=0 : X(1,1)=1 : Y(1)=1

330 X(2,0)=1 : X(2,1)=0 : Y(2)=1

340 X(3,0)=1 : X(3,1)=1 : Y(3)=0

400 REM INITIALIZE WEIGHTS RANDOMLY

410 FOR I=0 TO 1

420 FOR J=0 TO 1

430 W1(I,J) = RND(1)-0.5

440 NEXT J

450 B1(I) = RND(1)-0.5

460 W2(I) = RND(1)-0.5

470 NEXT I

480 B2 = RND(1)-0.5

510 REM INITIALIZE MOMENTUM TERMS TO ZERO

520 FOR I=0 TO 1

530 FOR J=0 TO 1

540 D1(I,J) = 0

550 NEXT J

560 D2(I) = 0

570 DB1(I) = 0

580 NEXT I

590 DB2 = 0

600 REM TRAINING LOOP

610 PRINT "TRAINING NEURAL NETWORK..."

620 PRINT "EP","ER"

630 FOR E = 1 TO 5000

640 ER = 0

650 FOR P = 0 TO 3

660 GOSUB 1000 : REM FORWARD PASS

670 GOSUB 2000 : REM BACKWARD PASS

680 ER = ER + ABS(O-Y(P))

690 NEXT P

700 IF (E/10) = INT(E/10) THEN PRINT E,ER

710 IF ER < 0.1 THEN E = 5000

720 NEXT E

800 REM TEST NETWORK

810 PRINT "TESTING NETWORK:"

820 FOR P = 0 TO 3

830 GOSUB 1000 : REM FORWARD PASS

840 PRINT X(P,0);X(P,1);"->"; INT(O+0.5);" (";O;")"

850 NEXT P

860 END

1000 REM FORWARD PASS SUBROUTINE

1010 REM CALCULATE HIDDEN LAYER

1020 FOR I = 0 TO 1

1030 S = 0

1040 FOR J = 0 TO 1

1050 S = S + X(P,J) * W1(J,I)

1060 NEXT J

1070 S = S + B1(I)

1080 H(I) = 1/(1+EXP(-S))

1090 NEXT I

1100 REM CALCULATE OUTPUT

1110 S = 0

1120 FOR I = 0 TO 1

1130 S = S + H(I) * W2(I)

1140 NEXT I

1150 S = S + B2

1160 O = 1/(1+EXP(-S))

1170 RETURN

2000 REM BACKWARD PASS SUBROUTINE

2010 REM OUTPUT LAYER ERROR

2020 DO = (Y(P)-O) * O * (1-O)

2030 REM UPDATE OUTPUT WEIGHTS WITH MOMENTUM

2040 FOR I = 0 TO 1

2050 DW = LR * DO * H(I)

2060 W2(I) = W2(I) + DW + M * D2(I)

2070 D2(I) = DW

2080 NEXT I

2090 DW = LR * DO

2100 B2 = B2 + DW + M * DB2

2110 DB2 = DW

2120 REM HIDDEN LAYER ERROR AND WEIGHT UPDATE

2130 FOR I = 0 TO 1

2140 DH = H(I) * (1-H(I)) * DO * W2(I)

2150 FOR J = 0 TO 1

2160 DW = LR * DH * X(P,J)

2170 W1(J,I) = W1(J,I) + DW + M * D1(J,I)

2180 D1(J,I) = DW

2190 NEXT J

2200 DW = LR * DH

2210 B1(I) = B1(I) + DW + M * DB1(I)

2220 DB1(I) = DW

2230 NEXT I

2240 RETURN

The one proverbial fly in the ointment is that it took about two hours for the network to be trained. The Python implementation? It runs to completion in about a second.

That’s very interesting as it touches a few things about which I have curiosity too.

I wonder why basic implementation is so slow. Of course I know computers of the era were not fast, and interpreted basic was not either. Still there seem to be 5000 iterations, each of them touching just a handful of neurons… more the second for the iteration! Perhaps I need to try it myself (additionally to try this curious emulator).

Next the task for neural network looks bit too simple – not in its simplicity itself, but in amount of various combinations of inputs and outputs. 5000 iterations look bit too much to train so few combinations.

I remember conceiving similar experiment, but in my case NN was to train predicting the value of sine function at X given three values at X-3, X-2 and X-1. As I’m quite naive in the math of NNs I trained it with simple random search. Here inputs may get any values in range -1 … +1, but as far as I remember training was working fine. Later I made a small problem based on this (link removed as unnecessary for my comment “appears to be spam”).

But returning to simplicity – I guess “neural network mimicking XOR” is some sample problem easily found over internet. Could we pick some other function for which, hopefully, LLM is less likely to find a ready example?

I recently asked “deepseek” to compile assembly code (8051 syntax) to hex file. To my surprise it immediately produced output, even richly commenting about the process. Of course my surprise subsided when I found the output being quite buggy, as if glued from pieces remotely looking like suitable. Lines have incorrect check sum or even lack it, from some point code completely diverts from the source. I asked it to fix checksums – it said “yes, sure, ok” and produced the same output. I asked why it is the same, and got answer like “sure, you was correct that it should be different, but I am correct to produce it the same”. Thus I grew more curious about cornering LLM’s with task variations for which they seemingly are not able to correctly construct the output.

You might be underestimating just how slow 8-bit processors are. The 6510 in the C64 had a clock of 1 MHz and its fastest instructions took two clock cycles, some 3 or 4 as I recall. So at most maybe 300,000 or 400,000 machine instructions a second. And it had no floating point, so floating point operations, even a simple multiplication, took hundreds of clock cycles; division, a lot more. So at most, a few hundred floating point instructions per second. And then there’s the BASIC interpreter: BASIC instructions were tokenized, but otherwise the program was read character-by-character, interpreted and executed. So do not expect in the end more than a few dozen BASIC instructions executed per second… which is consistent with the fact that in this example, each iteration took a couple of seconds, so 3,000+ iterations took a couple of hours. A hand-optimized machine language implementation could have sped that up by at least an order of magnitude; replacing floating point weights and biases with 8-bit integers would have sped things up by maybe even as much as two orders of magnitude. So a hand-optimized, machine-language version could have executed in seconds. But not the BASIC version.

The significance of XOR has to do with a 1969 paper by Minsky and Papert who proved that a single-layer network of perceptrons cannot model the XOR operation. Many took it as an indication that neural networks are inherently limited, and this paper is often mentioned as one of the reasons why machine learning was held back by years if not decades. With a hidden layer, the neural network can learn XOR just fine, but this was missed, not so much by Minsky and Papert but by those who read and interpreted their paper.

Language models are NOT reasoning engines or machine language compilers. They’ll happily oblige when you ask them but they’ll likely produce garbage. Value them for their breadth of knowledge and ability to understand concepts in context, do not expect them to be meticulous algorithmic engines: That, they are not.