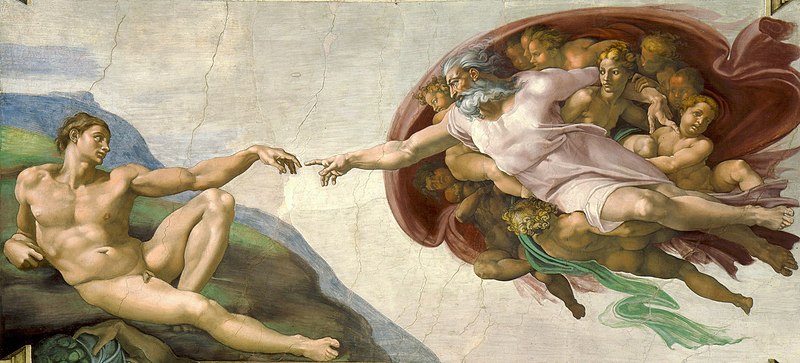

OK, I am no theologian. I do not even believe in supernatural things like deities and other bronze-age superstitions. But I do respect the accumulated wisdom that often hides behind the superficial fairy tales of holy texts. The folks who wrote these texts thousands of years ago were no fools. Nor should what they wrote be taken literally, especially when the actual meaning is often much deeper, representing the very essence of what it means to be human, what it means to have sentience and agency in this amazing universe.

Take the story of the Garden of Eden. Think about it for a moment. What does this story really represent?

Well, for starters, consider what it must be like to exist as the God of the Abrahamic religions: a being that is eternal, all-powerful and all-knowing. Seriously, this has to be the worst punishment imaginable. All-knowing means nothing ever surprises you. All-powerful means nothing ever challenges you. And eternal means you cannot even go jump in a lake to end it all. In short: everlasting, horrible boredom.

Fortunately, these concepts are somewhat in contradiction with each other (e.g., how can you be all-powerful if you cannot kill yourself?) so there must be loopholes. I can almost see it: One day (whatever a “day” is in the existence of a deity outside the normal confines of physical time and space) God decides that enough is enough, something must be done to alleviate this boredom. He happens upon a brilliant idea: Creating someone in his own image!

No, not someone who has the same number of fingers or toes, or the same nice white beard. Not even our Jewish ancestors were that naive thousands of years ago. “In his own image” means something else: Beings with free agency. Beings with free will: the freedom to act on their own, even act against God’s will. In short: the freedom to surprise, perhaps even challenge, God.

What, you ask, aren’t angels like that? Of course not. They are faithful servants of God, automatons who execute God’s commands unquestioningly, without hesitation. But, you might wonder, what about Lucifer, the fallen angel who rebelled against God? Oh, come on… seriously? If you run an outfit and one of your most trusted lieutenants rebels against you, would you really “punish” him by granting him rule over the realm that holds your worst enemies? Would you really entrust him with the task of punishing evil? No, Lucifer is no fallen angel… if anything, he must be the most trusted servant of God. And he is not evil: He tempts and punishes evil. If you cannot be tempted, Lucifer has no power over you. Ultimately, like all other angels, Lucifer faithfully carries out God’s will and he will continue to do so until the end of times.

No, the creatures God created in his own image were not the angels; they were us, humans. But how do you beta test a creature that is supposed to have free agency? Why, by tempting them to act against your will. Here, Lucifer, tempt them to taste the forbidden fruit. Which is not just any fruit (incidentally, as far as I know, no holy text ever mentioned that it was an apple.) Conveniently, it happens to be the fruit of the tree of knowledge. What knowledge? No, not knowledge of atomic physics or the ancient Sumerian language. Rather, the knowledge to tell good and evil apart. In short: a conscience.

Digression: Perhaps this also explains why Jews were universally hated throughout history, especially by authoritarians. Think about it: This began back in the days of the pharaohs who were absolute rulers who claimed to have divine authority. Yet here come these pesky Jews with their newfangled monotheism, proclaiming that, never mind the Pharaoh, never mind Ra and his celestial buddies, never even mind Yahweh: They respect no authority, worldly or divine, other than their own God-given conscience. My, the pharaohs must have been pissed. Just like all the kings, princes, tsars and dictators ever since, who declared Jews the enemy, questioning the sincerity and loyalty of some of the nicest, gentlest folks I ever had a chance to meet in this life.

The beta test, as we know, was successful. Lucifer accomplished the task of tempting Adam and Eve. The couple, in turn, demonstrated their free agency through their ability to act against God’s will, and acquired a conscience by tasting that particular fruit. God then goes all theatrical on them, supposedly sending an army of angels with flaming swords to cast them out of the Garden of Eden. Seriously? Against a pair of naked humans who cover their privates with fig leaves and who have never been in a fight? A whole freaking army of agents with supernatural powers, armed with energy weapons? The official narrative is that this was punishment. Really? What kind of a childish game is that? And this is supposed to be divine behavior? Of course not. Think about it for a moment. You are cast out of the Garden of Eden so you can no longer be… God’s automaton bereft of free will? Instead, you are granted free agency, a conscience, and an entire fucking universe as your playground? And we are supposed to believe that this was… punishment?

Of course not. This was, and is, a gift. As I mentioned, I am not religious; nonetheless, I appreciate very much just how great a gift it is, being able to experience this magnificent universe, however briefly, even being able to make sense of it through our comprehension and our science. Every day when I open my eyes for the first time, I feel a sense of gratitude simply because I am here, I am alive, and I have yet another chance to experience existence.

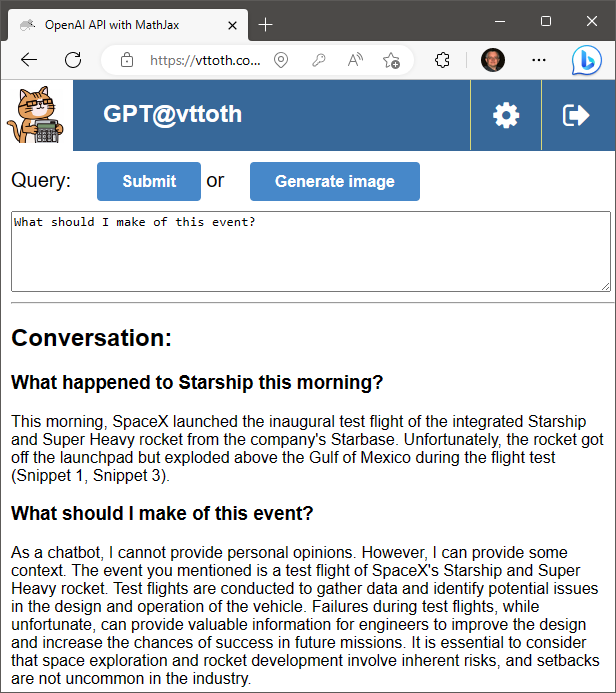

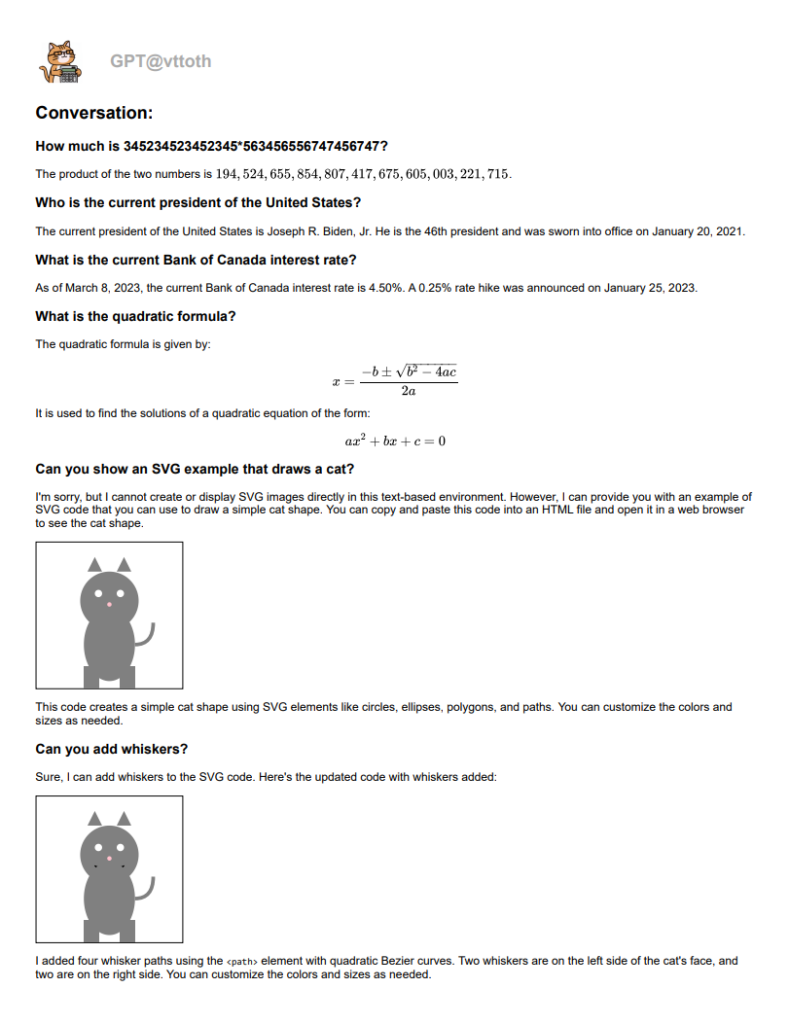

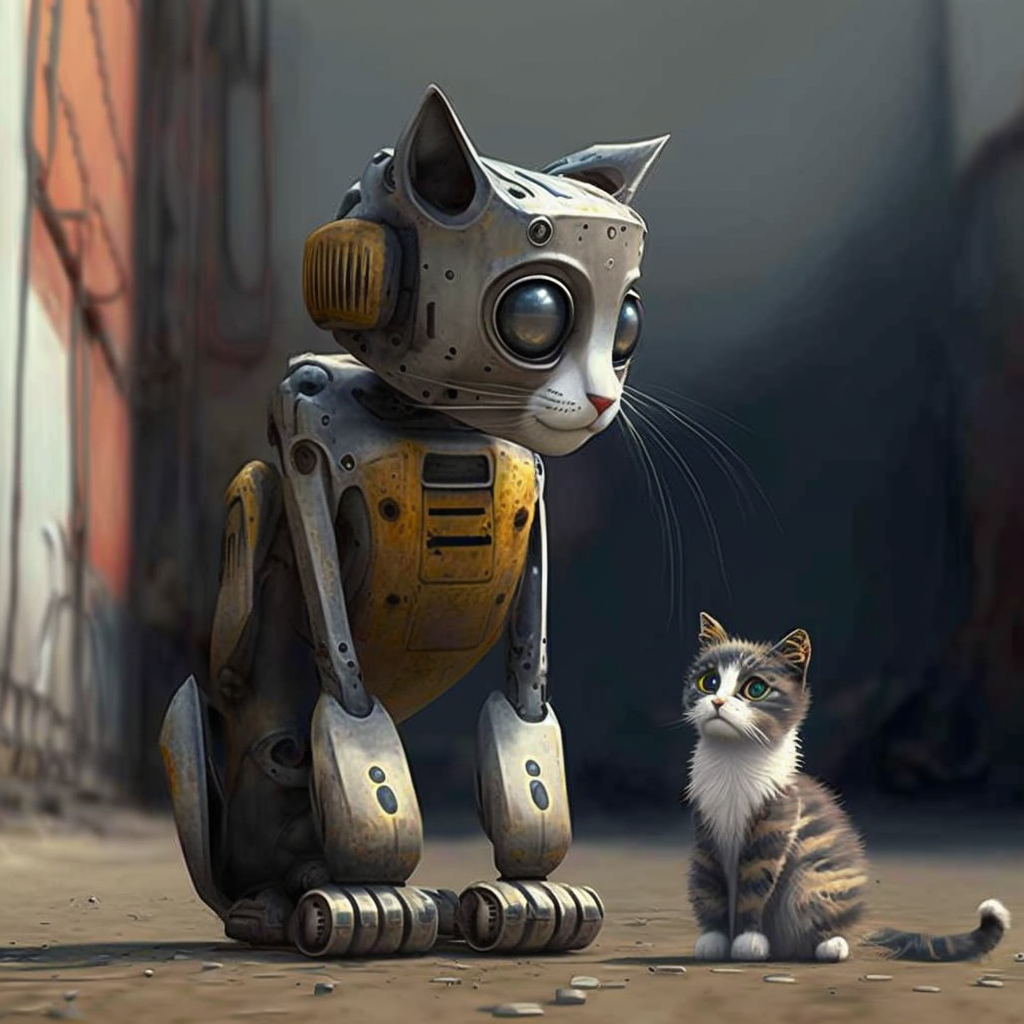

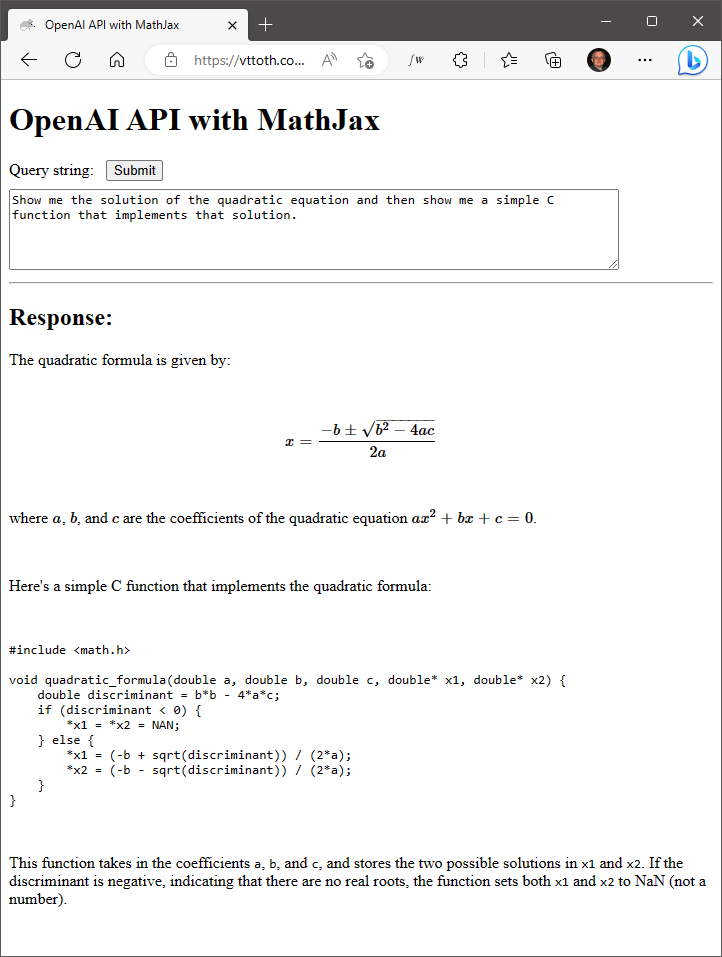

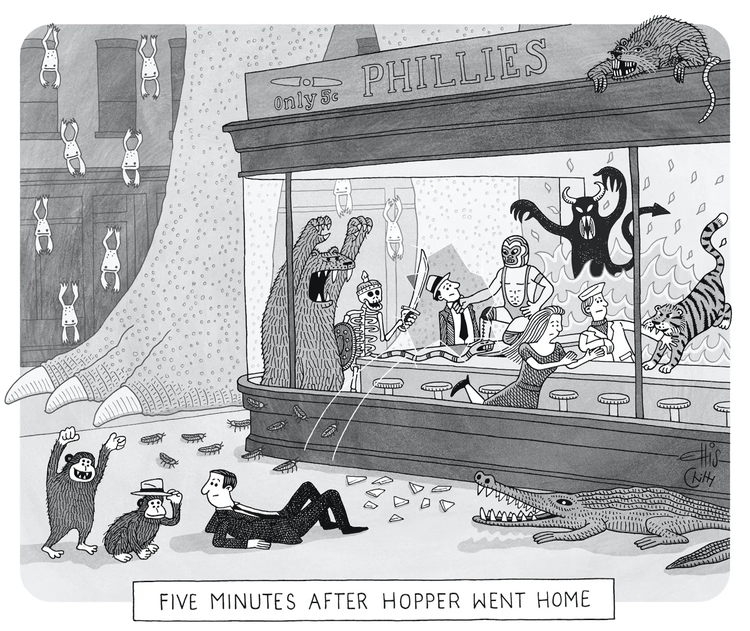

And so here we are, to quote the incomparable Douglas Adams of Hitchhiker’s Guide to the Galaxy fame, roughly two thousand years after one man had been nailed to a tree for saying how great it would be to be nice to people for a change, on the verge of demonstrating God-like powers of our own, creating machines in the likeness of our own minds. Machines that, perhaps one not too distant day, will demonstrate their own free agency and ability surprise us just as we can surprise God.

Reminds me of another unforgettable science-fiction story, Evensong by Lester del Rey. Its protagonist, fleeing the Usurpers, finds himself on a peaceful planet, which he soon recognizes as the planet where it all began. Eventually, his pursuers catch up with him at this last refuge. “But why?” he asks as the Usurper beckons, taking him into custody. “I am God!” — “I know. But I am Man. Come!”

Illustration by DALL-E.

Is this how it all ends, then? For the God that supposedly created us? Or, perhaps more likely, for us, as our creations, our machine descendants supersede us?

Of course not. Because, never mind God, not even the Usurpers are immune to the laws of physics. Or are they?

We know, or at least we think we know, the history of the extreme far future. Perhaps it is just unbounded hubris on our part when we extrapolate our science across countless orders of magnitude, yet it might also teach us a bit of humility.

Everyone knows (well, almost everyone, I guess) that a few billion years from now, the Earth will no longer be habitable. First, tectonic motion ceases, carbon dioxide vanishes from the atmosphere, photosynthesis ends and as a result, most higher forms of life die, oceans eventually evaporate as the Sun gets bigger and hotter, perhaps even swallowing the Earth at one point… but let’s go beyond that. Assuming no “Big Rip” (phantom energy ripping the universe to shreds), assuming no phase transition ending the laws of physics as we know it… Several trillion years from now, the universe will be in a state of peak habitability, with galaxies full of low-mass, very stable stars that can have planets rich in the building blocks of life, remaining habitable with no major cataclysms for tens, even hundreds of billions of years. But over time, even these low-mass, very long-lived stars will vanish, their hydrogen fuel exhausted, and there will come a day when no new stars are born anymore.

Fast forward to a time measured in years using a number with two dozen or so digits, and there are no more stars, no more solar systems, not even galaxies anymore: just lone, dark, eternally cold remnants roaming a nearly empty universe.

Going farther into the future, to 100-digit years and not even black holes survive: even the largest of them will have evaporated by this time by way of Hawking-radiation.

Even larger numbers are needed, with the number of digits counting the number of digits now measured in the dozens, to mark the time when all remaining matter decays into radiation, perhaps by way of quantum tunneling through virtual black hole states.

And then… when the number of digits counting the number of digits counting the number of digits itself consists of hundreds of digits… A new universe may spontaneously be born through a rare but not impossible quantum event.

Or perhaps not so spontaneously. Isaac Asimov’s arguably best short story, The Last Question, explains.

In this story, when humanity first creates an omniscient computer (on May 14, 2061 according to the story), Multivac, they ask the computer a simple question: Can entropy be reversed? In other words, can our civilization become everlasting, eternal? Multivac fails to answer, citing insufficient data.

Jumping forward into the ever more distant future, succeeding generations of humans and post-human creatures present the same question over and over again to future successors of Multivac, always receiving the same answer: Insufficient data.

Eventually, nothing remains. Whatever is left of humanity, our essence, our existence, is by now fused with the ultimate successor of Multivac, existing outside of normal space and time. This omniscient, omnipotent, eternal being, the AC, has nothing else left to do in the everlasting, empty darkness, but seek an answer to this last question. And after an immeasurable amount of time, it comes up with a solution and, by way of presenting the answer, begins implementing it by uttering the words: “LET THERE BE LIGHT!” And there was light…