While much of the media is busy debating how the United States already “lost” a cyberwar with North Korea, or how it should respond decisively (I agree), a few began to discuss the possible liability of SONY itself in the hack.

The latest news is that the hackers stole a system administrator’s credentials; armed with these credentials, they were able to roam SONY’s corporate network freely and over the course of several months, they stole over 10 terabytes (!) of data.

Say what? Root password? Months? Terabytes?

OK, I am going to go out on a limb here. I know nothing about SONY’s IT security, the people who work there, their training or responsibilities. And of course it wouldn’t be the first time for the media to get even basic facts wrong.

Still, the magnitude of the hack is evident. It had to take a considerable amount of time to steal all that data and do all that damage.

Which could not have possibly happened if SONY’s IT security folks actually knew what they were doing.

Not that I am surprised. SONY is not alone in this regard; everywhere I turn, corporations, government departments, you name it, I see the same thing. Security, all too often, is about harassing or hindering legitimate users. No, you cannot have an EXE attachment in your e-mail! No, you cannot install that shrink-wrapped software on your workstation! No, we cannot let you open TCP port 12345 on that experimental server!

Users are pesky creatures and most of them actually find ways to get their work done. Yes, their work. This is not about evil corporate overlords not letting you update your Facebook status or watch funny cat videos on YouTube. This is about being able to accomplish tasks that you are paid to do.

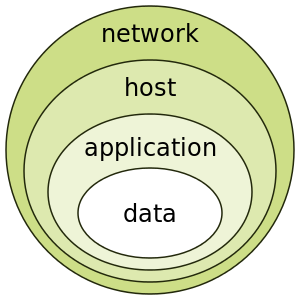

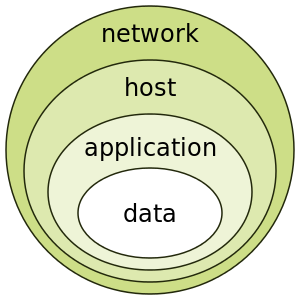

Unfortunately, when it comes to IT security, a flawed mentality is all too prevalent. Even on Wikipedia. Look at this diagram, for instance, illustrating the notion of defense in depth:

This, I would argue, is a very narrow-minded view of IT security in general, and the concept of in-depth defense in particular. To me, defense in depth means a lot more than merely deploying technologies to protect data through its life cycle. Here are a few concepts:

- Partnership with users: Legitimate users are not the enemy! Your job is to help them accomplish their tasks safely, not to become Mordac the Preventer from the Dilbert comic strip. Users can be educated, but they can also be part of your security team, for instance by alerting you when something is not working quite the way it was expected.

- Detection plans and strategies: Recognize that, especially if your organization is prominently exposed, the question is not if but when. You will get security breaches. How do you detect them? What are the redundant technologies and methods (including organization and education) that you use to make sure that an intrusion is detected as early as possible, before too much harm is done?

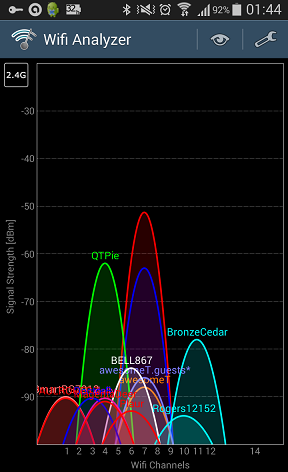

- Mitigation and recovery: Suppose you detect an intrusion. What do you do? Perhaps it’s a good idea to place a “don’t panic” sticker on the cover page of your mitigation and recovery plan. That’s because one of the worst things you can do in these cases is a knee-jerk panic response shutting down entire corporate systems. (Such a knee-jerk reaction is also ripe for exploitation. For instance, a hacker might compromise the open Wi-Fi of the coffee shop across the street from your headquarters before hacking into your corporate network, intentionally in such a way that it would be discovered, counting on the knee-jerk response that would drive employees in droves across the street to get their e-mails and get urgent work done.)

- Compartmentalization. I don’t care if you are the most trusted system administrator on the planet. It does not mean that you need to have access to every hard drive, every database or every account on the corporate network. The tools (encrypted databases, disk-level encryption, granulated access control lists) are all there: use them. Make sure that even if Kim Jong-un’s minions steal your root password, they still wouldn’t be able to read data from the corporate mail server or download confidential files from corporate systems.

SONY’s IT department probably failed on all these counts. OK, I am not sure about #1, as I never worked at SONY, but why would they be any different from other corporate environments? As to #2, the failure is obvious: it must have taken weeks if not months for the hackers to extract the reported 10 terabytes. They very obviously failed on #3, and if the media reports about a system administration’s credentials are true, #4 as well.

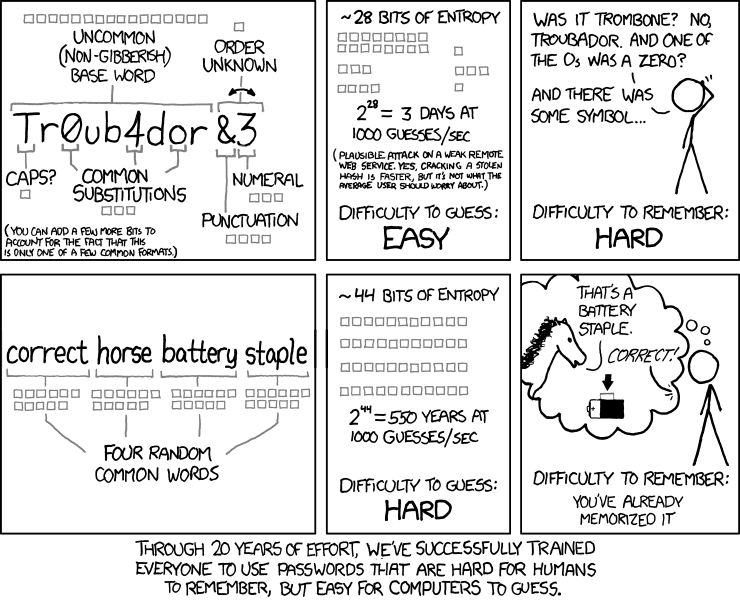

Just to be clear, I am not trying to blame the victim here. When your attackers have the resources of a nation state at their disposal, it is a grave threat. But this is why IT security folks get the big bucks. I can easily see how, equipped with the resources of a nation state, the attackers were able to deploy zero day exploits and other, perhaps previously unknown techniques that would have defeated technological barriers. (Except that maybe they didn’t… the reports say that they stole user credentials and, I am guessing, there is a good chance that they used social engineering, not advanced technology.) But it’s one thing to be the victim of a successful attack, it’s another thing not being able to detect it, mitigate it, or recover from it. This is where IT security folks should shine, not harassing users about EXE attachments or with asinine password expiration policies.

Microsoft officially ended support for Windows XP today.

Microsoft officially ended support for Windows XP today.