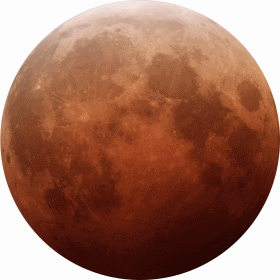

The other day, I ran across a question on Quora: Can you focus moonlight to start a fire?

The question actually had an answer on xkcd, and it’s a rare case of an incorrect xkcd answer. Or rather, it’s an answer that reaches the correct conclusion but follows invalid reasoning. As a matter of fact, they almost get it right, but miss an essential point.

The xkcd answer tells you that “You can’t use lenses and mirrors to make something hotter than the surface of the light source itself”, which is true, but it neglects the fact that in this case, the light source is not the Moon but the Sun. (OK, they do talk about it but then they ignore it anyway.) The Moon merely acts as a reflector. A rather imperfect reflector to be sure (and this will become important in a moment), but a reflector nonetheless.

But first things first. For our purposes, let’s just take the case when the Moon is full and let’s just model the Moon as a disk for simplicity. A disk with a diameter of \(3,474~{\rm km}\), located \(384,400~{\rm km}\) from the Earth, and bathed in sunlight, some of which it absorbs, some of which it reflects.

The Sun has a radius of \(R_\odot=696,000~{\rm km}\) and a surface temperature of \(T_\odot=5,778~{\rm K}\), and it is a near perfect blackbody. The Stephan-Boltzmann law tells us that its emissive power \(j^\star_\odot=\sigma T_\odot^4\sim 6.32\times 10^7~{\rm W}/{\rm m}^2\) (\(\sigma=5.670373\times 10^{-8}~{\rm W}/{\rm m}^2/{\rm K}^4\) is the Stefan-Boltzmann constant).

The Sun is located \(1~{\rm AU}\) (astronomical unit, \(1.496\times 10^{11}~{\rm m}\)) from the Earth. Multiplying the emissive power by \(R_\odot^2/(1~{\rm AU})^2\) gives the “solar constant”, aka. the irradiance (the terminology really is confusing): approx. \(I_\odot=1368~{\rm W}/{\rm m}^2\), which is the amount of solar power per unit area received here in the vicinity of the Earth.

The Moon has an albedo. The albedo determines the amount of sunshine reflected by a body. For the Moon, it is \(\alpha_\circ=0.12\), which means that 88% of incident sunshine is absorbed, and then re-emitted in the form of heat (thermal infrared radiation). Assuming that the Moon is a perfect infrared emitter, we can easily calculate its surface temperature \(T_\circ\), since the radiation it emits (according to the Stefan-Boltzmann law) must be equal to what it receives:

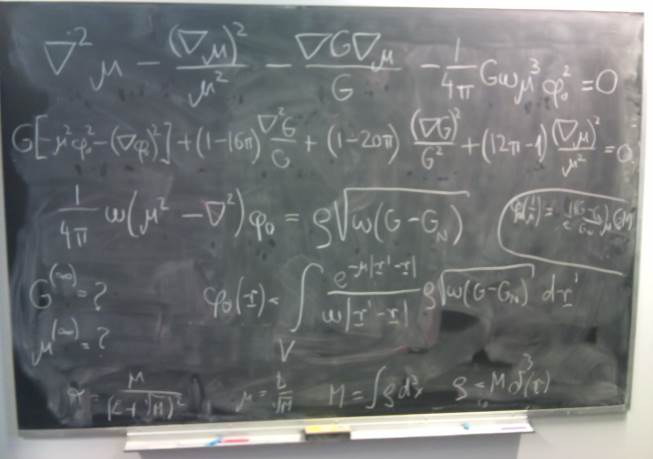

\[\sigma T_\circ^4=(1-\alpha_\circ)I_\odot,\]

from which we calculate \(T_\circ\sim 382~{\rm K}\) or about 109 degrees Centigrade.

It is indeed impossible to use any arrangement of infrared optics to focus this thermal radiation on an object and make it hotter than 109 degrees Centigrade. That is because the best we can do with optics is to make sure that the object on which the light is focused “sees” the Moon’s surface in all sky directions. At that point, it would end up in thermal equilibrium with the lunar surface. Any other arrangement would leave some of the deep sky exposed, and now our object’s temperature will be determined by the lunar thermal radiation it receives, vs. any thermal radiation it loses to deep space.

But the question was not about lunar thermal infrared radiation. It was about moonlight, which is reflected sunlight. Why can we not focus moonlight? It is, after all, reflected sunlight. And even if it is diminished by 88%… shouldn’t the remaining 12% be enough?

Well, if we can focus sunlight on an object through a filter that reduces the intensity by 88%, the object’s temperature is given by

\[\sigma T^4=\alpha_\circ\sigma T_\odot^4,\]

which is easily solved to give \(T=3401~{\rm K}\), more than hot enough to start a fire.

Suppose the lunar disk was a mirror. Then, we could set up a suitable arrangement of lenses and mirrors to ensure that our object sees the Sun, reflected by the Moon, in all sky directions. So we get the same figure, \(3401~{\rm K}\).

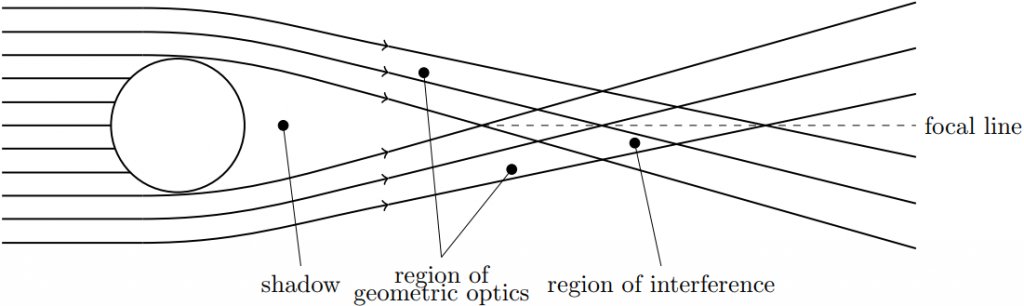

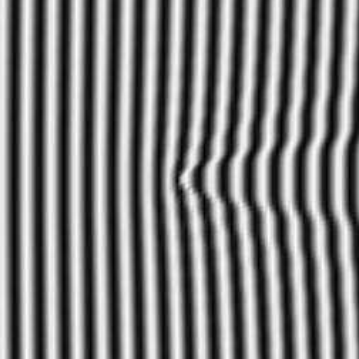

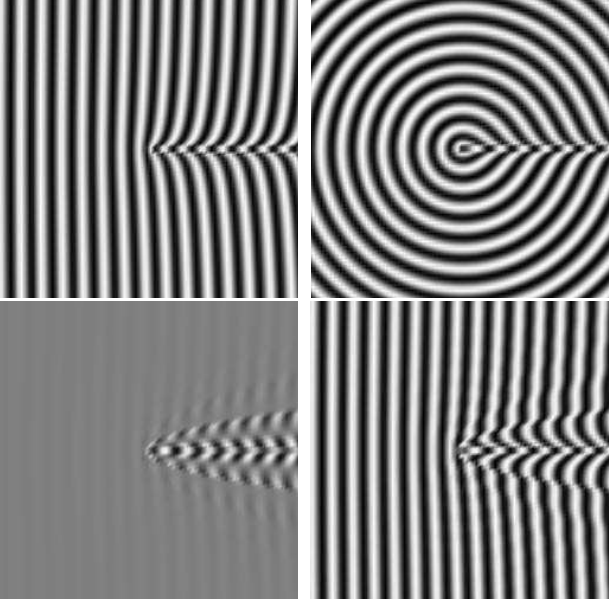

But, and this is where we finally get to the real business of moonlight, the lunar disk is not a mirror. It is not a specular reflector. It is a diffuse reflector. What does this mean?

Well, it means that even if we were to set up our optics such that we see the Moon in all sky directions, most of what we would see (or rather, wouldn’t see) is not reflected sunlight but reflections of deep space. Or, if you wish, our “seeing rays” would go from our eyes to the Moon and then to some random direction in space, with very few of them actually hitting the Sun.

What this means is that even when it comes to reflected sunlight, the Moon acts as a diffuse emitter. Its spectrum will no longer be a pure blackbody spectrum (as it is now a combination of its own blackbody spectrum and that of the Sun) but that’s not really relevant. If we focused moonlight (including diffusely reflected light and absorbed light re-emitted as heat), it’s the same as focusing heat from something that emits heat or light at \(j^\star_\circ=I_\odot\). That something would have an equivalent temperature of \(394~{\rm K}\), and that’s the maximum temperature to which we can heat an object using optics that ensures that it “sees” the Moon in all sky directions.

So then let me ask another question… how specular would the Moon have to be for us to be able to light a fire with moonlight? Many surfaces can be characterized as though they were a combination of a diffuse and a specular reflector. What percentage of sunlight would the Moon have to reflect like a mirror, which we could then collect and focus to produce enough heat, say, to combust paper at the famous \(451~{\rm F}=506~{\rm K}\)? Very little, as it turns out.

If the Moon had a specularity coefficient of only \(\sigma_\circ=0.00031\), with a suitable arrangement of optics (which may require some mighty big mirrors in space, but never mind that, we’re talking about a thought experiment here), we could concentrate reflected sunlight and lunar heat to reach an intensity of

\[I=\alpha_\circ\sigma_\circ j^\star_\odot+(1-\alpha_\circ\sigma_\circ)j^\star_\circ=3719~{\rm W}/{\rm m}^2,\]

which, according to Ray Bradbury, is enough heat to make a piece of paper catch a flame.

So if it turns out that the Moon is not a perfectly diffuse emitter but has a little bit of specularity, it just might be possible to use its light to start a fire.