I am reading some breathless reactions to a preprint posted a few days ago by the MiniBooNE experiment. The experiment is designed to detect neutrinos, in particular neutrino oscillations (the change of one neutrino flavor into another.)

The headlines are screaming. Evidence found of a New Fundamental Particle, says one. Strange New Particle Could Prove Existence of Dark Matter, says another. Or how about, A Major Physics Experiment Just Detected A Particle That Shouldn’t Exist?

The particle in question is the so-called sterile neutrino. It is a neat concept, one I happen to quite like. It represents an elegant resolution to the puzzle of neutrino handedness. This refers to the chirality of neutrinos, essentially the direction in which they spin compared to their direction of motion. We only ever see “left handed” neutrinos. But neutrinos have rest mass. So they move slower than light. That means that if you run fast enough and outrun a left-handed neutrino, so that relative to you it is moving backwards (but still spins in the same direction as before), when you look back, you’ll see a right-handed neutrino. This implies that right-handed neutrinos should be seen just as often as left-handed neutrinos. But they aren’t. How come?

Sterile neutrinos offer a simple answer: We don’t see right-handed neutrinos because they don’t interact (they are sterile). That is to say, when a neutrino interacts (emits or absorbs a Z-boson, or emits or absorbs a W-boson while changing into a charged lepton), it has to be a left-handed neutrino in the interaction’s center-of-mass frame.

If this view is true and such sterile neutrinos exist, even though they cannot be detected directly, their existence would skew the number of neutrino oscillation events. As to what neutrino oscillations are: neutrinos are massive. But unlike other elementary particles, neutrinos do not have a well-defined mass associated with their flavor (electron, muon, or tau neutrino). When a neutrino has a well-defined flavor (is in a flavor eigenstate) it has no well-defined mass and vice versa. This means that if we detect neutrinos in a mass eigenstate, their flavor can appear to change (oscillate) between one state or another; e.g., a muon neutrino may appear at the detector as an electron neutrino. These flavor oscillations are rare, but they can be detected, and that’s what the MiniBooNE experiment is looking for.

And that is indeed what MiniBooNE found: an excess of events that is consistent with neutrino oscillations.

MiniBooNE detects electron neutrinos. These can come from all kinds of (background) sources. But one particular source is an intense beam of muon neutrinos produced at Fermilab. Because of neutrino oscillations, some of the neutrinos in this beam will be detected as electron neutrinos, yielding an excess of electron neutrino events above background.

And that’s exactly what MiniBooNE sees, with very high confidence: 4.8σ. That’s almost the generally accepted detection threshold for a new particle. But this value of 4.8σ is not about a new particle. It is the significance associated with excess electron neutrino detection events overall: an excess that is expected from neutrino oscillations.

So what’s the big deal, then? Why the screaming headlines? As far as I can tell, it all boils down to this sentence in the paper: “Although the data are fit with a standard oscillation model, other models may provide better fits to the data.”

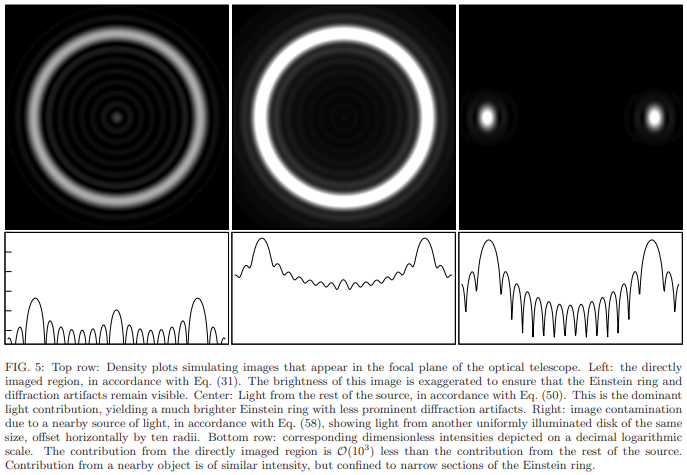

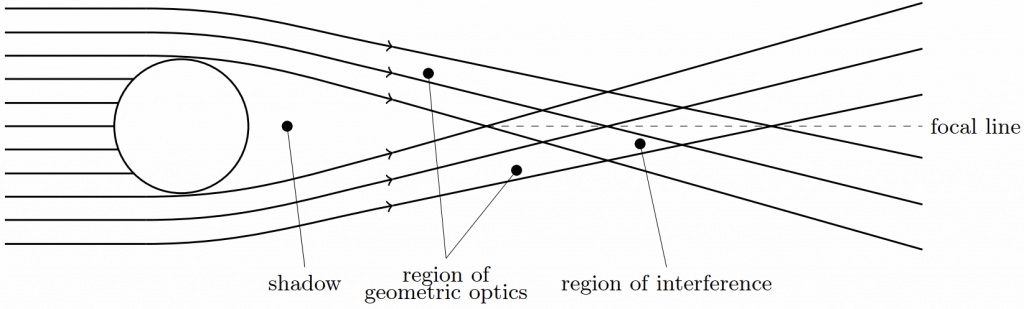

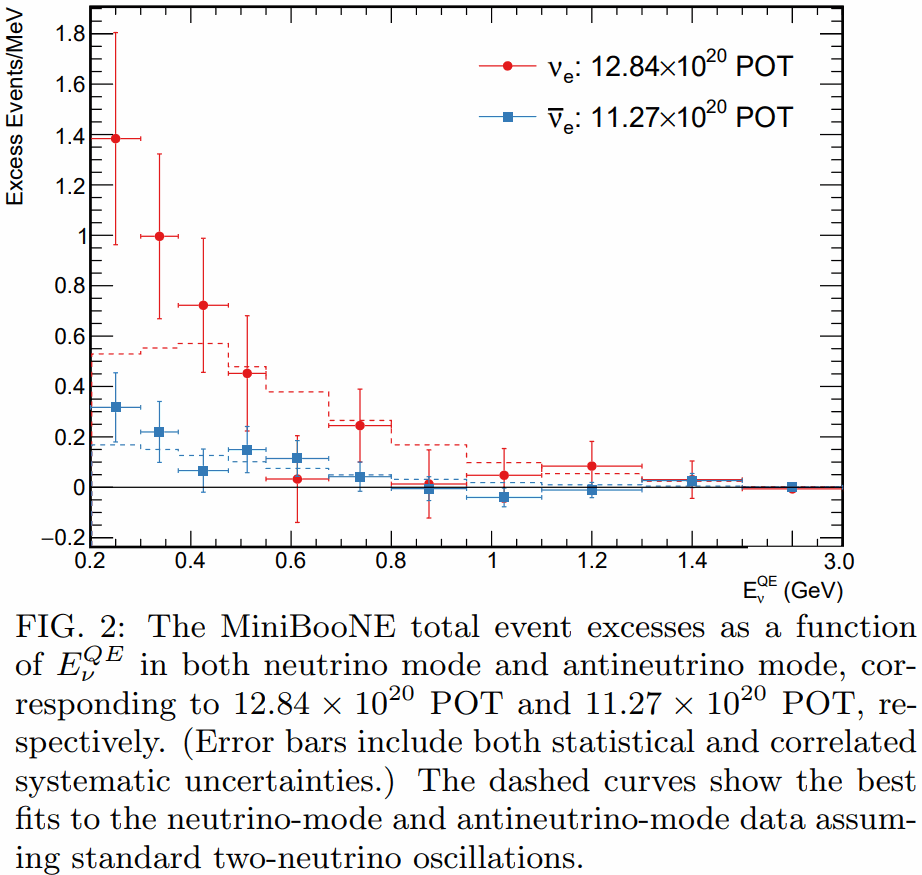

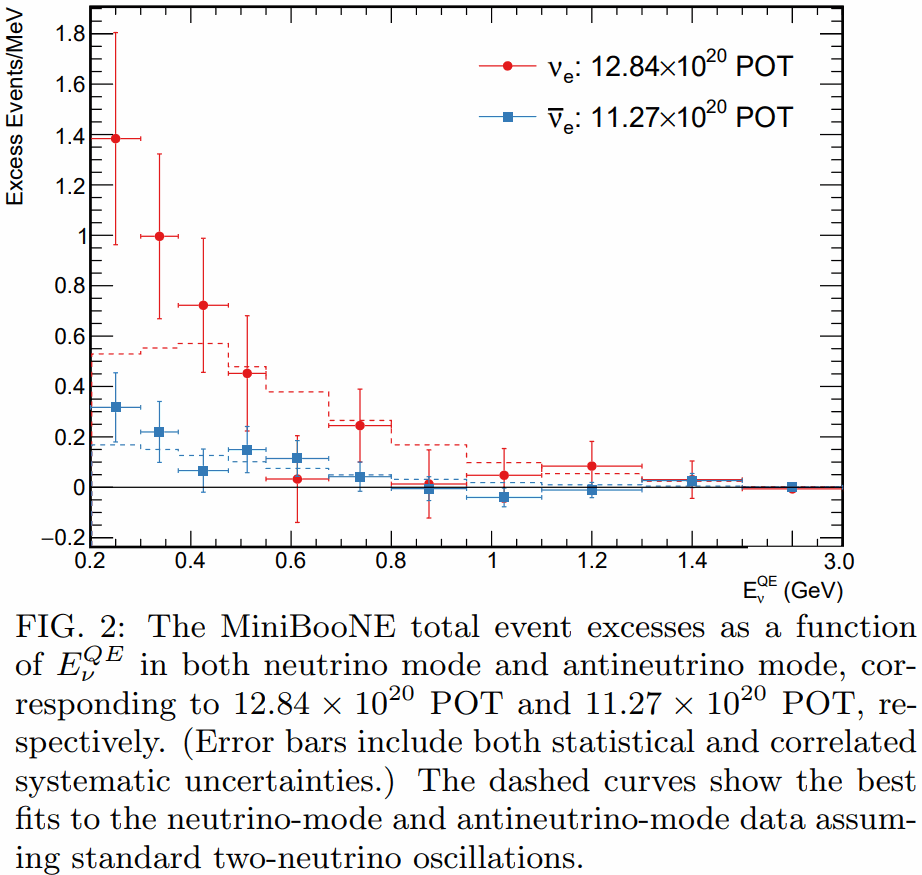

What this somewhat cryptic sentence means is best illustrated by a figure from the paper:

This figure shows the excess events (above background) detected by MiniBooNE, but also the expected number of excess events from neutrino oscillations. Notice how only the first two red data points fall significantly above the expected number. (In case you are wondering, POT means Protons On Target, that is to say, the number of protons hitting a beryllium target at Fermilab, producing the desired beam of muon neutrinos.)

Yes, these two data points are intriguing. Yes, they may indicate the existence of new physics beyond two-neutrino oscillations. In particular, they may indicate the existence of another oscillation mode, muon neutrinos oscillating into sterile neutrinos that, in turn, oscillate into electron neutrinos, yielding this excess.

Mind you, if this is a sign of sterile neutrinos, these sterile neutrinos are unlikely dark matter candidates; their mass would be too low.

Or these two data points are mere statistical flukes. After all, as the paper says, “the best oscillation fit to the excess has a probability of 20.1%”. That is far from improbable. Sure, the fact that it is only 20.1% can be interpreted as a sign of some tension between the Standard Model and this experiment. But it is certainly not a discovery of new physics, and absolutely not a confirmation of a specific model of new physics, such as sterile neutrinos.

And indeed, the paper makes no such claim. The word “sterile” appears only four times in the paper, in a single sentence in the introduction: “[…] more exotic models are typically used to explain these anomalies, including, for example, 3+N neutrino oscillation models involving three active neutrinos and N additional sterile neutrinos [6-14], resonant neutrino oscillations [15], Lorentz violation [16], sterile neutrino decay [17], sterile neutrino non-standard interactions [18], and sterile neutrino extra dimensions [19].”

So yes, there is an intriguing sign of an anomaly. Yes, it may point the way towards new physics. It might even be new physics involving sterile neutrinos.

But no, this is not a discovery. At best, it’s an intriguing hint; quite possibly, just a statistical fluke.

So why the screaming headlines, then? I wish I knew.