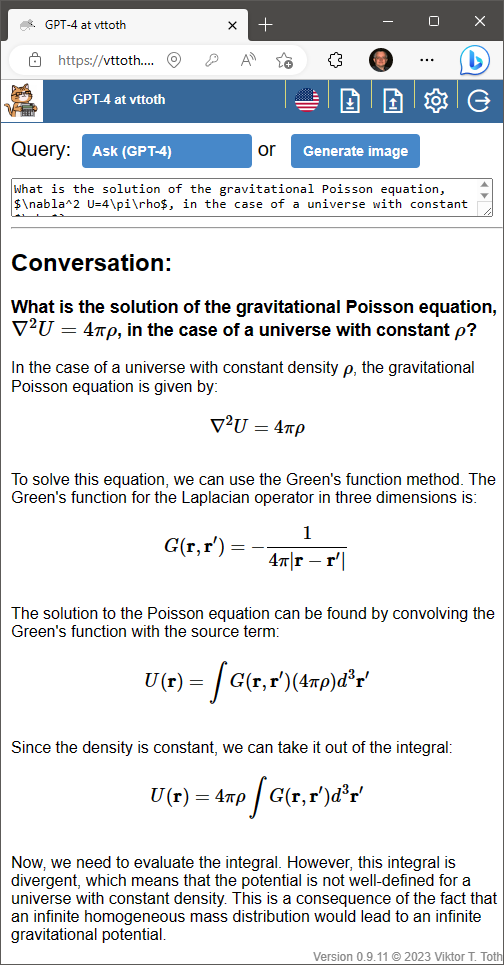

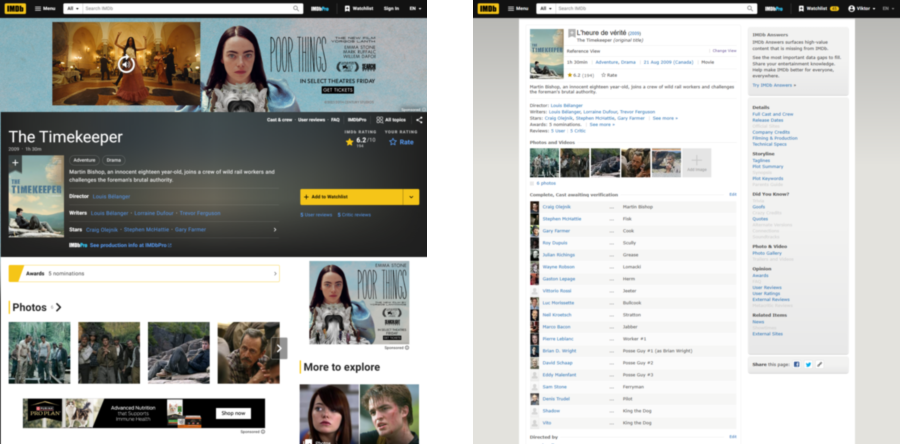

I wanted to check something on IMDB. I looked up the film. I was confronted by an unfamiliar user interface. Now unfamiliar is okay, but the UI I saw is badly organized, key information (e.g., year of release, country of origin) difficult to find, with oversized images at the expense of useful content. And no, I don’t mean the ads; I am comfortable with relevant, respectful ads. It’s the fact that a lot less information is presented, taking up a lot more space.

Fortunately, in the case of IMDB I was able to restore a much more useful design by logging in to my IMDB account, going to account settings, and making sure that the Contributors checkbox was checked. Phew. So much more (SO MUCH MORE) readable, digestible at a glance. Yes, it’s smaller print. Of course. But the information is much better organized, the appearance is more consistent (no widely different font sizes) and the page is dominated by information, not entertainment in the form of images.

IMDB is not the only example. Recently, after I gave it a valiant try, I purposefully downgraded my favorite Android e-mail software as its new user interface was such a letdown. At least I had the foresight to save the APK of the old version, so I was able to install it and then make sure in the Play Store settings that it would not be upgraded. Not that I am comfortable not upgrading software but in this case, it was worth the risk.

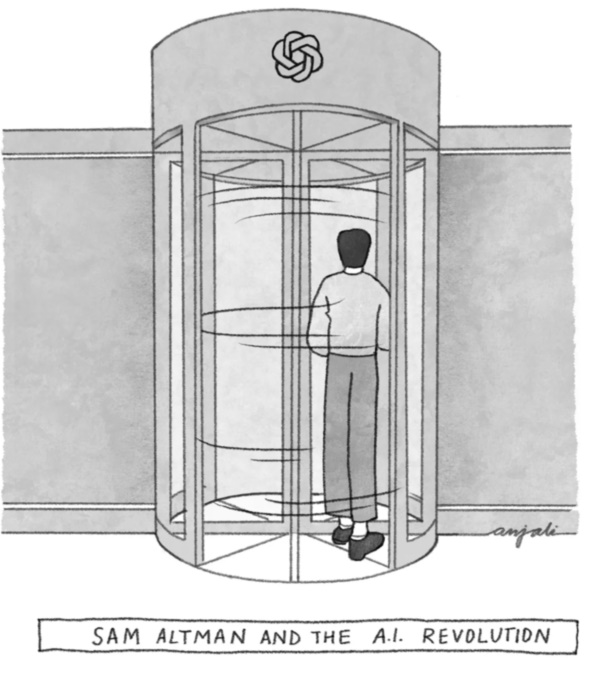

All this reminds me of a recent discussion with a friend who works as a software professional himself: he is fed up to his eyeballs with the pervasive “Agile” fad at his workplace, with its mandatory “Scrum” meetings and whatnot. Oh, the blessings of being an independent developer: I could tell him that if a client mentioned “Agile” more than once, it’d be time for me to “Scrum” the hell out of there…

OK, I hope it’s not just grumpy ole’ complaining on my part. But seriously, these trendy fads are not helping. Software becomes less useful. Project management culture reinvents the wheel (I have an almost 50-year old Hungarian-language book on my shelf on project management that discusses iterative management in depth) with buzzwords that no doubt bring shady consultants a lot more money than I ever made actually building things. (Not complaining. I purposefully abandoned that direction in my life 30 years ago when I quietly walked out of a meeting, not having the stomach anymore to wear a $1000 suit and nod wisely while listening to eloquent BS.) The result is all too often a badly managed project, with a management culture that is no less rigid than the old culture (no fads can overcome management incompetence) but with less documentation, less control, less consistent system behavior, more undocumented dependencies, and compromised security. UI design has fads that change with the seasons, united only by results that are about as practical as a Paris fashion designer’s latest collection of “work attire”.

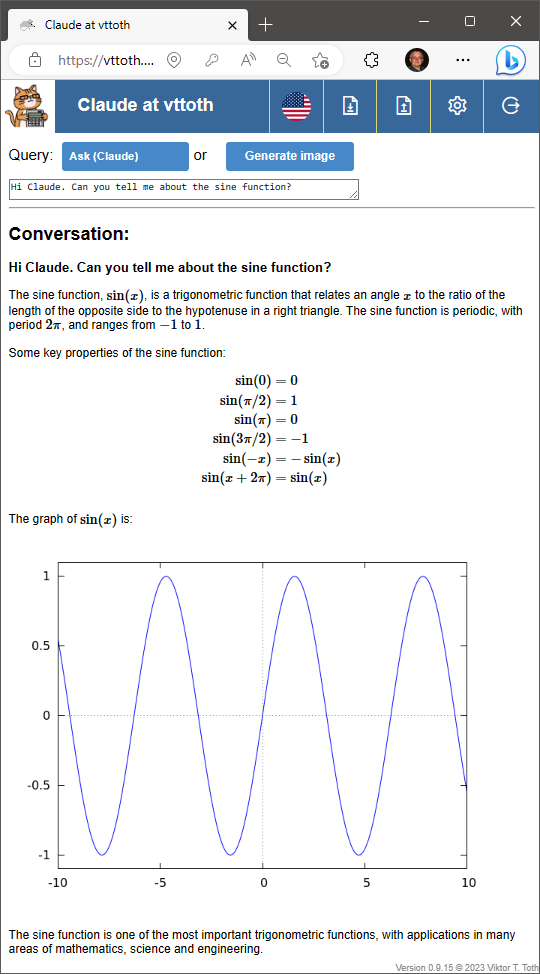

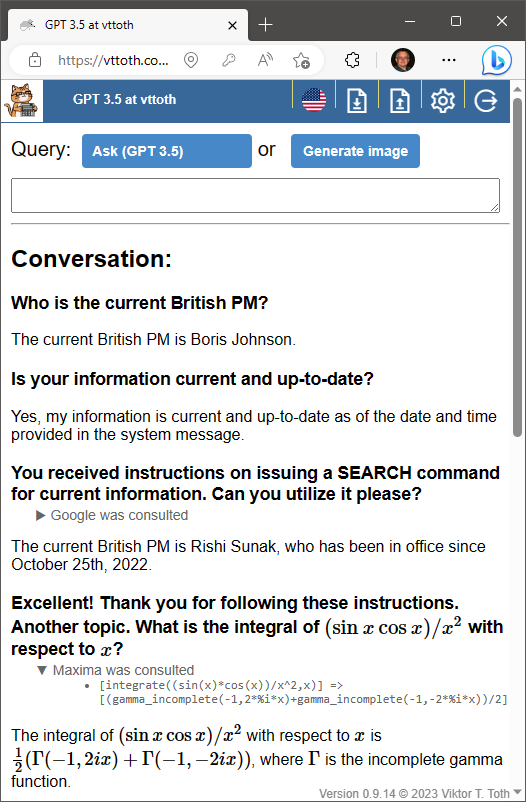

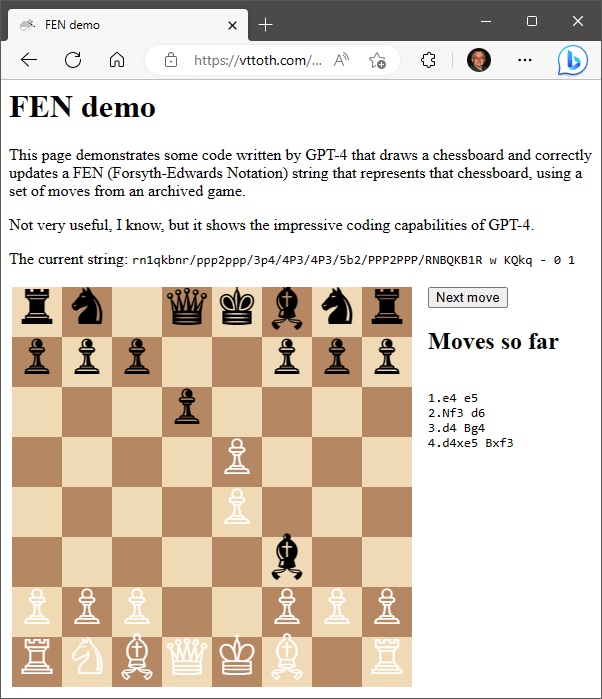

OK, I would be lying if I said that only bad things come out of change. Now that I use AI in software development, not a day goes by without the AI teaching me something I did not know, including tools, language features and whatnot that can help improve the user experience. But it would be so nice if we didn’t take three steps back for every four steps forward.