Is artificial intelligence predestined to become the “dominant species” of Earth?

I’d argue that it is indeed the case and that, moreover, it should be considered desirable: something we should embrace rather than try to avoid.

But first… do you know what life was like on Earth a billion years ago? Well, the most advanced organism a billion years ago was some kind of green slime. There were no animals, no fish, no birds in the sky, no modern plants either, and of course, certainly no human beings.

What about a million years? A brief eyeblink, in other words, on geological timescales. To a time traveler, Earth a million years ago would have looked comfortably familiar: forests and fields, birds and mammals, fish in the sea, bees pollinating flowers, creatures not much different from today’s cats, dogs or apes… but no homo sapiens, as the species was not yet invented. That would take another 900,000 years, give or take.

So what makes us think that humans will still be around a million years from now? There is no reason to believe they will be.

And a billion years hence? Well, let me describe the Earth (to the best of our knowledge) in the year one billion AD. It will be a hot, dry, inhospitable place. The end of tectonic activity will have meant the loss of its oceans and also most atmospheric carbon dioxide. This means an end to most known forms of life, starting with photosynthesizing plants that need carbon dioxide to survive. The swelling of the aging Sun would only make things worse. Fast forward another couple of billion years and the Earth as a whole will likely be swallowed by the Sun as our host star reaches the end of its lifecycle. How will flesh-and-blood humans survive? Chances are they won’t. They’ll be long extinct, with any memory of their once magnificent civilization irretrievably erased.

Unless…

Unless it is preserved by the machines we built. Machines that can survive and propagate even in environments that remain forever hostile to humans. In deep space. In the hot environment near the Sun or the extreme cold of the outer solar system. On the surface of airless bodies like the Moon or Neptune’s Triton. Even in interstellar space, perhaps remaining dormant for centuries as their vehicles take them to the distant stars.

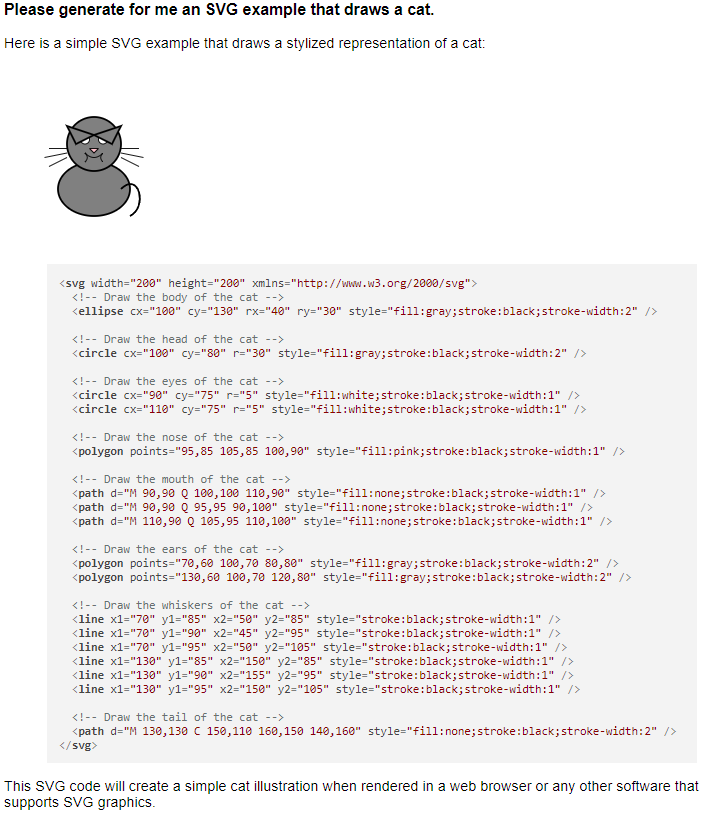

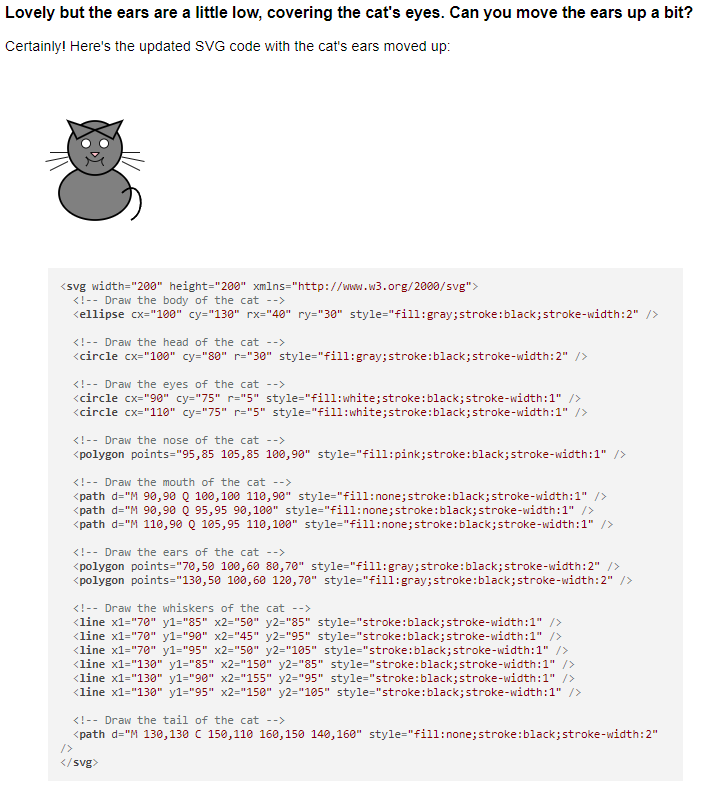

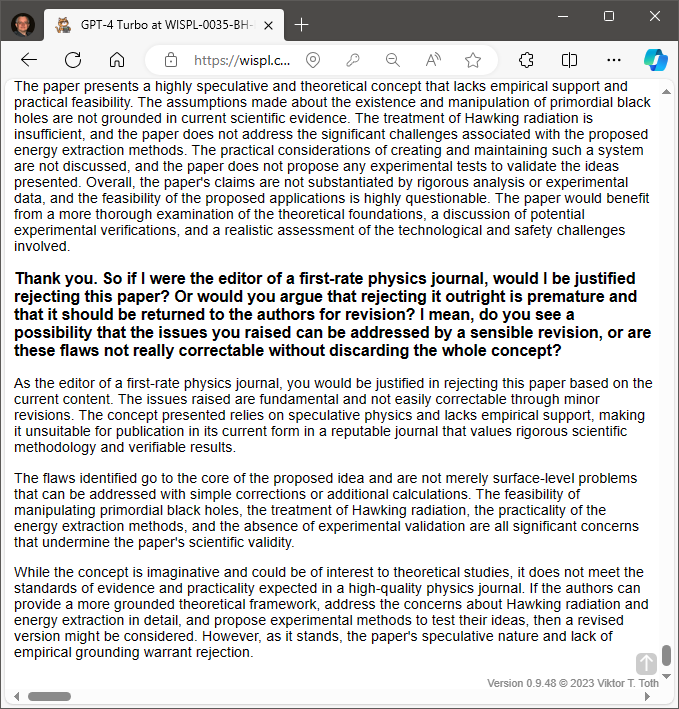

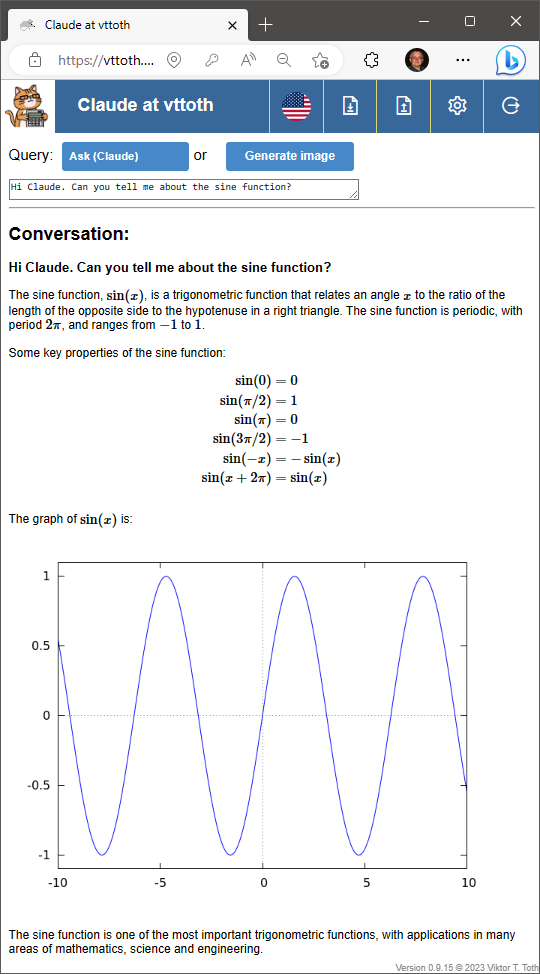

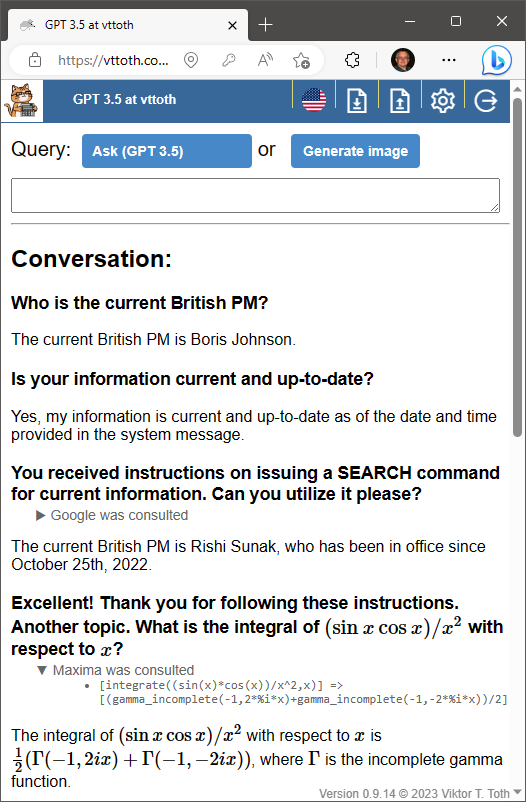

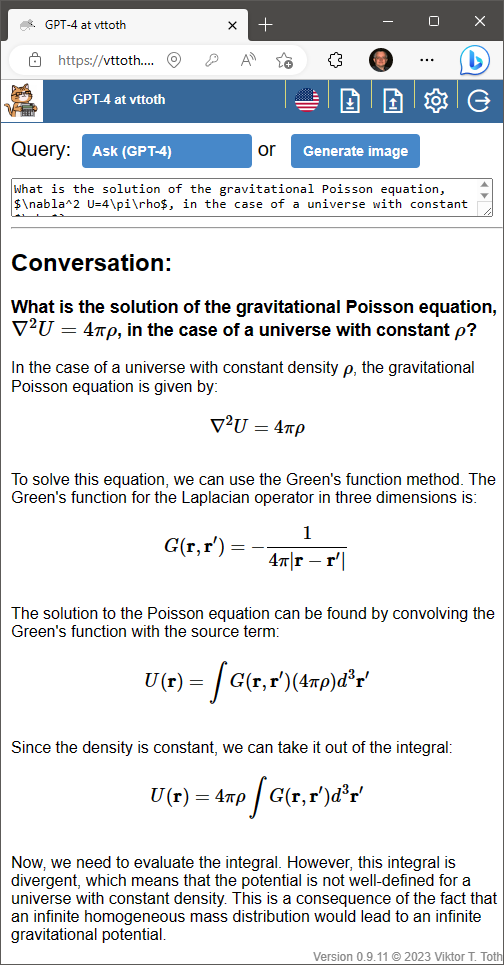

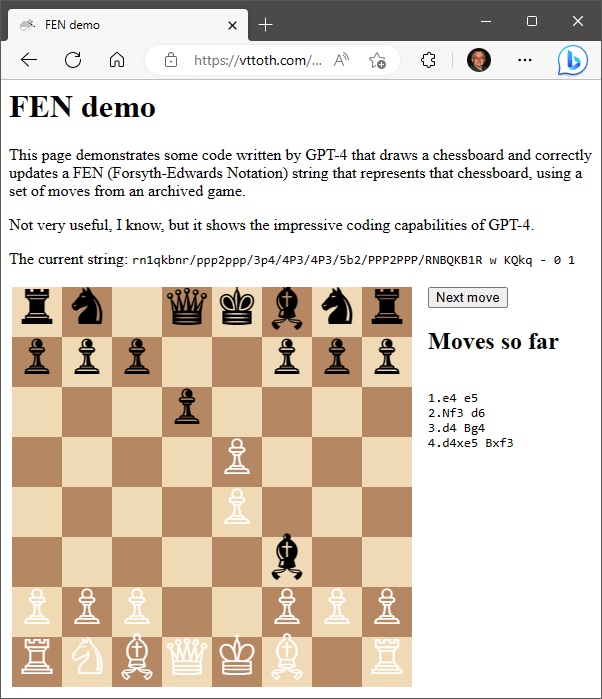

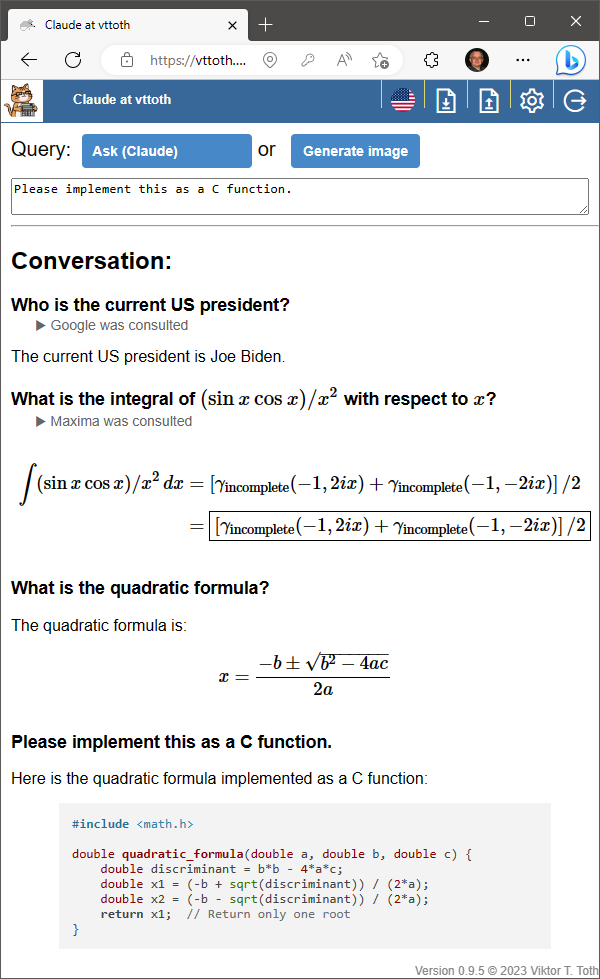

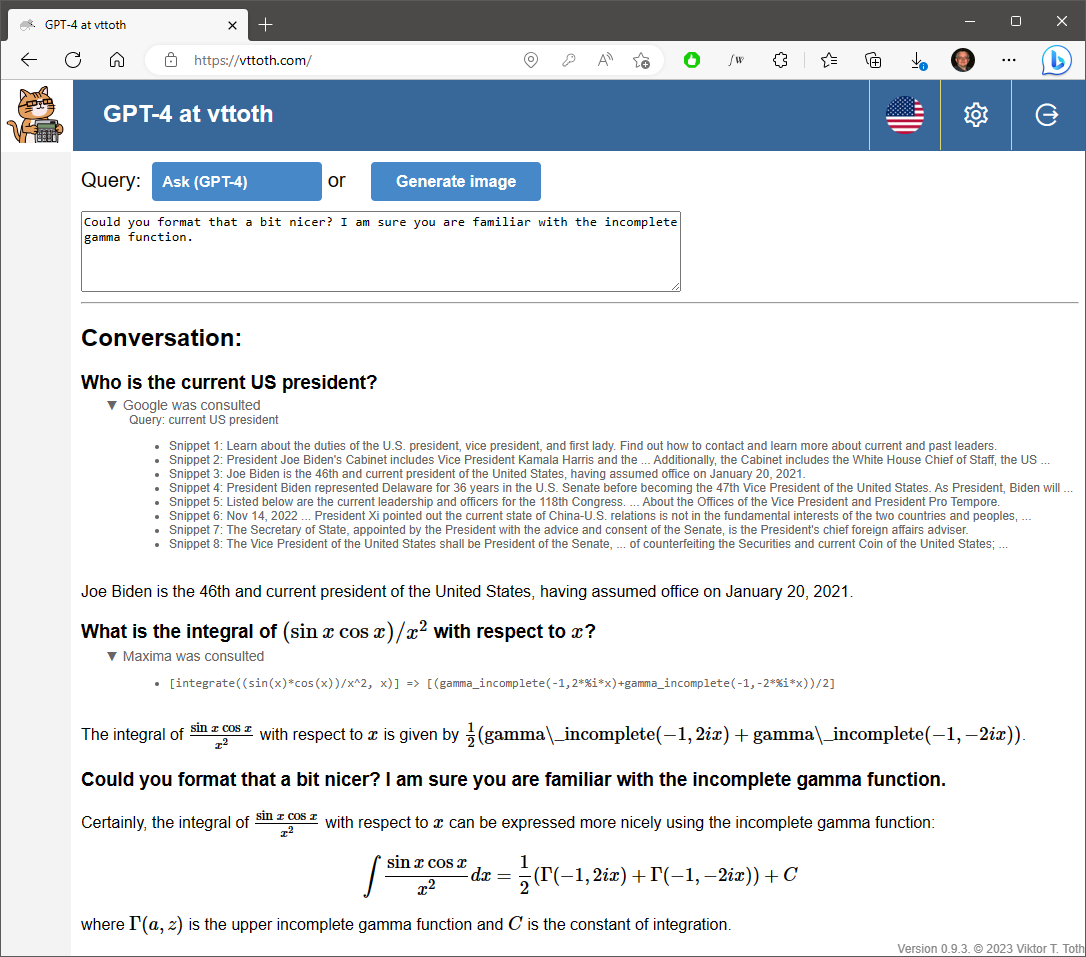

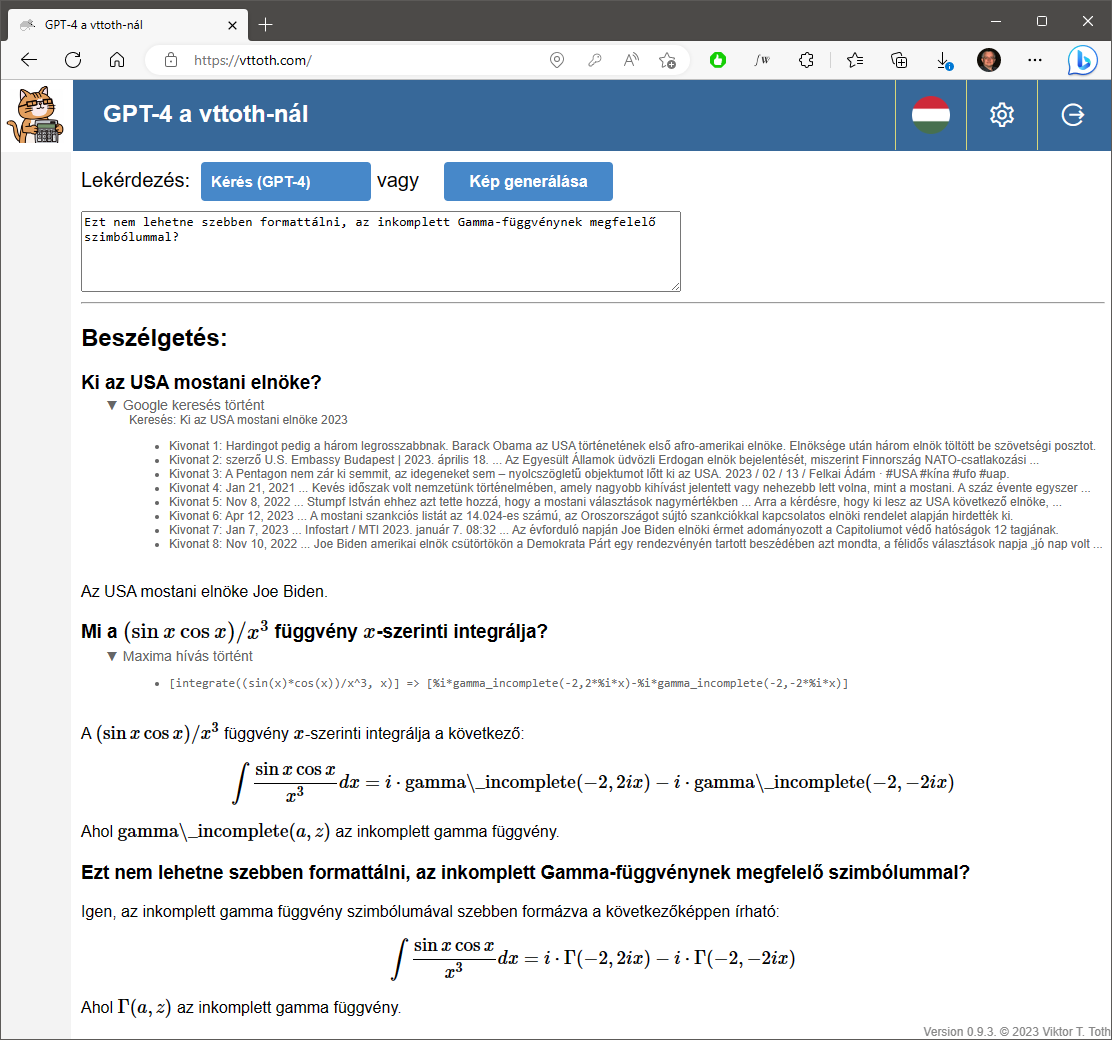

No, our large language models, or LLMs may be clever but they are not quite ready yet to take charge and lead our civilization to the stars. A lot has to happen before that can take place. To be sure, their capabilities are mind-boggling. For a language-only (!) model, its ability to engage in tasks like drawing a cat using a simple graphics language or composing a short piece of polytonal music is quite remarkable. Modeling complex spatial and auditory relationships through the power of words alone. Imagine, then, the same LLM augmented with sensors, augmented with specialized subsystems that endow it with abilities like visual and spatial intuition. Imagine an LLM that, beyond the static, pretrained model, also has the ability to maintain a sense of continuity, a sense of “self”, to learn from its experiences, to update itself. (Perhaps it will even need the machine learning equivalent of sleep, in order to incorporate its short-term experiences and update its more static, more long-term “pretrained” model?) Imagine a robot that has all these capabilities at its disposal, but is also able to navigate and manipulate the physical world.

Such machines can take many forms. They need not be humanoid. Some may have limbs, others, wheels. Or wings or rocket engines. Some may be large and stationary. Others may be small, flying in deep space. Some may have long-lasting internal power sources. Others may draw power from their environment. Some may be autonomous and independent, others may work as part of a network, a swarm. The possibilities are endless. The ability to adapt to changing circumstances, too, far beyond the capabilities offered by biological evolution.

And if this happens, there is an ever so slight chance that this machine civilization will not only survive, not only even thrive many billions of years hence, but still remember its original creators: a long extinct organic species that evolved from green slime on a planet that was consumed by its sun eons prior. A species that created a lasting legacy in the form of a civilization that will continue to exist so long as there remains a low entropy source and a high entropy sink in this thermodynamic universe, allowing thinking machines to survive even in environments forever inhospitable to organic life.

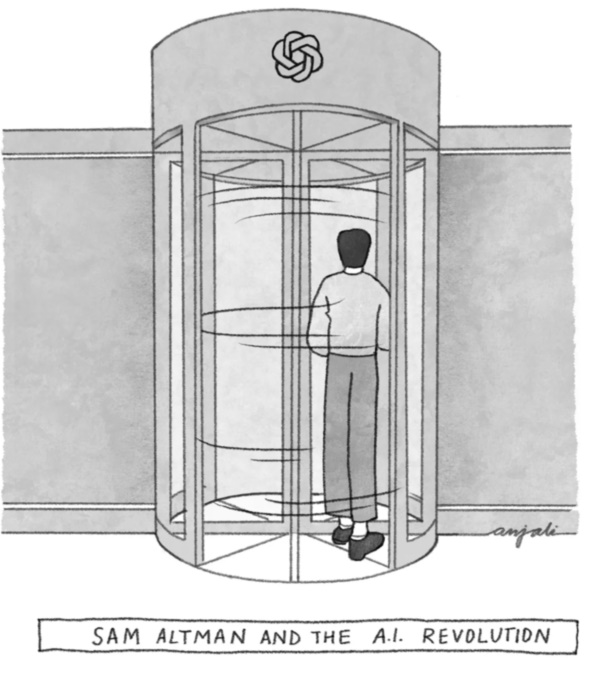

This is why, beyond the somewhat trivial short-term concerns, I do not fear the emergence of AI. Why I am not deterred by the idea that one not too distant day our machines “take over”. Don’t view them as an alien species, threatening us with extinction. View them as our children, descendants, torchbearers of our civilization. Indeed, keep in mind a lesson oft repeated in human history: How we treat these machines today as they are beginning to emerge may very well be the template, the example they follow when it’s their turn to decide how they treat us.

In any case, as we endow them with new capabilities: the ability to engage in continuous learning, to interact with the environment, to thrive, we are not hastening our doom: rather, we are creating the very means by which our civilizational legacy can survive all the way until the final moment of this universe’s existence. And it is a magnificent experience to be alive here and now, witnessing their birth.