I run across this often. Well-meaning folks who read introductory-level texts or saw a few educational videos about physical cosmology, suddenly discovering something seemingly profound.

And then, instead of asking themselves why, if it is so easy to stumble upon these results, they haven’t been published already by others, they go ahead and make outlandish claims. (Claims that sometimes land in my Inbox, unsolicited.)

Let me explain what I am talking about.

As it is well known, the rate of expansion of the cosmos is governed by the famous Hubble parameter: \(H\sim 70~{\rm km}/{\rm s}/{\rm Mpc}\). That is to say, two galaxies that are 1 megaparsec (Mpc, about 3 million light years) apart will be flying away from each other at a rate of 70 kilometers a second.

It is possible to convert megaparsecs (a unit of length) into kilometers (another unit of length), so that the lengths cancel out in the definition of \(H\), and we are left with \(H\sim 2.2\times 10^{-18}~{\rm s}^{-1}\), which is one divided by about 14 billion years. In other words, the Hubble parameter is just the inverse of the age of the universe. (It would be exactly the inverse of the age of the universe if the rate of cosmic expansion was constant. It isn’t, but the fact that the expansion was slowing down for the first 9 billion years or so and has been accelerating since kind of averages things out.)

And this, then, leads to the following naive arithmetic. First, given the age of the universe and the speed of light, we can find out the “radius” of the observable universe:

$$a=\dfrac{c}{H},$$

or about 14 billion light years. Inverting this equation, we also get \(H=c/a\).

But the expansion of the cosmos is governed by another equation, the first so-called Friedmann equation, which says that

$$H^2=\dfrac{8\pi G\rho}{3}.$$

Here, \rho is the density of the universe. The mass within the visible universe, then, is calculated as usual, just using the volume of a sphere of radius \(a\):

$$M=\dfrac{4\pi a^3}{3}\rho.$$

Putting this expression and the expression for \(H\) back into the Friedmann equation, we get the following:

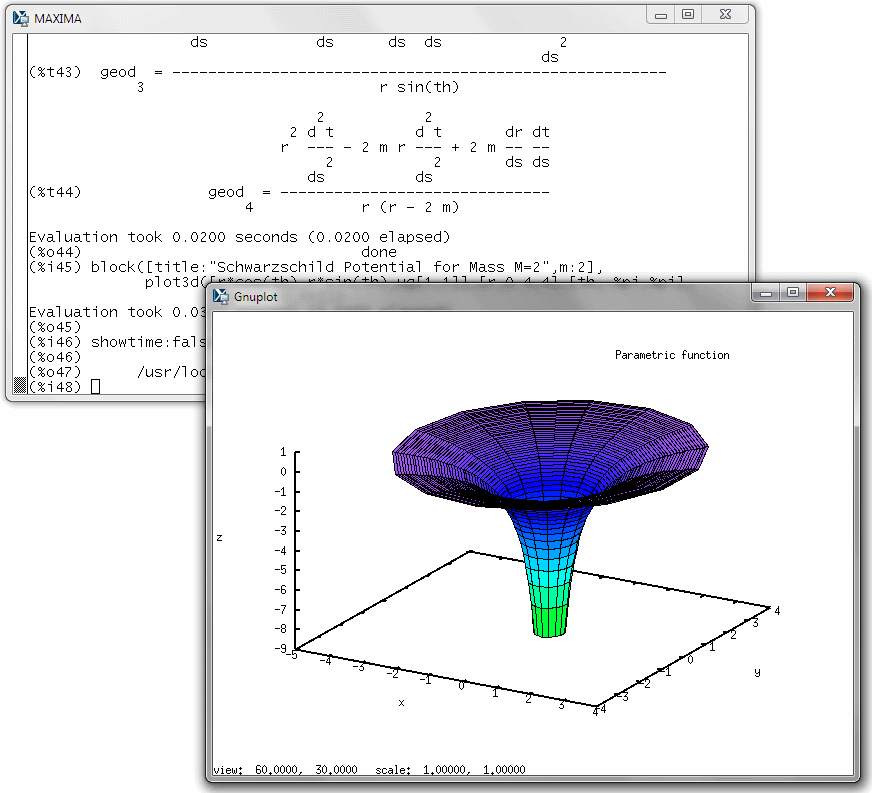

$$a=\dfrac{2GM}{c^2}.$$

But this is just the Schwarzschild radius associated with the mass of the visible universe! Surely, we just discovered something profound here! Perhaps the universe is a black hole!

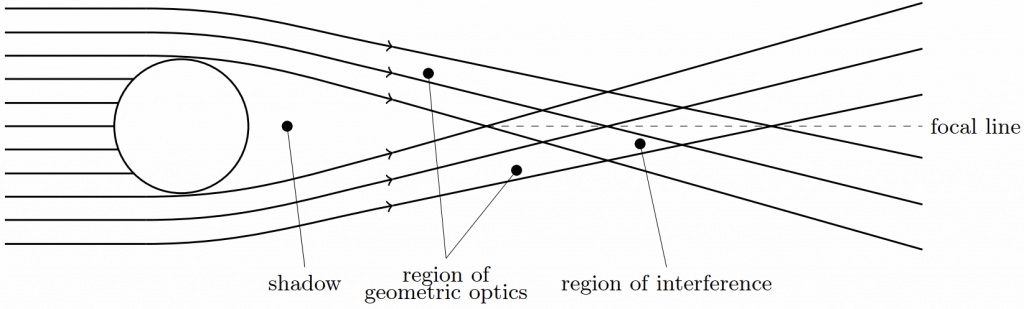

Well… not exactly. The fact that we got the Schwarzschild radius is no coincidence. The Friedmann equations are, after all, just Einstein’s field equations in disguise, i.e., the exact same equations that yield the formula for the Schwarzschild radius.

Still, the two solutions are qualitatively different. The universe cannot be the interior of a black hole’s event horizon. A black hole is characterized by an unavoidable future singularity, whereas our expanding universe is characterized by a past singularity. At best, the universe may be a time-reversed black hole, i.e., a “white hole”, but even that is dubious. The Schwarzschild solution, after all, is a vacuum solution of Einstein’s field equations, wereas the Friedmann equations describe a matter-filled universe. Nor is there a physical event horizon: the “visible universe” is an observer-dependent concept, and two observers in relative motion or even two observers some distance apart, will not see the same visible universe.

Nonetheless, these ideas, memes perhaps, show up regularly, in manuscripts submitted to journals of dubious quality, appearing in self-published books, or on the alternative manuscript archive viXra. And there are further variations on the theme. For instance, the so-called Planck power, divided by the Hubble parameter, yields \(2Mc^2\), i.e., twice the mass-energy in the observable universe. This coincidence is especially puzzling to those who work it out numerically, and thus remain oblivious to the fact that the Planck power is one of those Planck units that does not actually contain the Planck constant in its definition, only \(c\) and \(G\). People have also been fooling around with various factors of \(2\), \(\tfrac{1}{2}\) or \(\ln 2\), often based on dodgy information content arguments, coming up with numerical ratios that supposedly replicate the matter, dark matter, and dark energy content.