I have been debating with myself whether or not I should try to publish these thoughts in a formal journal but I think it’s wisest not to do so. After all, I am not an expert: I “dabbled” in machine learning but I never built a language model, and cognitive science is something that I know precious little about.

Still, I think my thoughts are valuable, especially considering how often I read thoughts from others who are trying to figure out what to make of GPT, Claude, or any of the other sophisticated models.

I would like to begin with two working definitions.

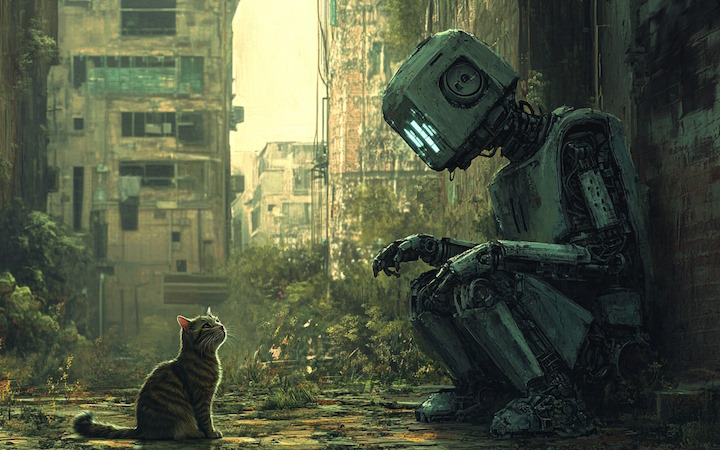

I call an entity sentient if it has the ability to form a real-time internal model of its environment with itself in it, and use this model to plan its actions. Under this definition, humans are obviously sentient. So are cats, and so are self-driving automobiles.

I call an entity sapient if it has the ability to reason about its own capabilities and communicate its reasoning to others. By writing this very sentence, I am demonstrating my own sapience. Cats are obviously not sapient, nor are self-driving automobiles. So humans are unique (as far as we know): we are both sapient and sentient.

But then come LLMs. LLMs do not have an internal model of their environment. They do not model themselves in the context of that environment. They do not plan their actions. They just generate text in response to input text. There’s no sentience there.

Yet, under my definition LLMs are clearly sapient. They have the ability to describe their own capabilities, reason about those capabilities, and communicate their reasoning to others. In fact, arguably they are more sapient than many human beings I know!

This then raises an interesting question. What does it take for an intelligence to be “general purpose”, that is, capable of acting freely, settings its own goals, pursuing these goals with purpose, learning from the experience? Is it sufficient to have both sapience and sentience? Could we just install a language model as a software upgrade to a self-driving car and call it mission accomplished?

Not quite. There are two more elements that are present in cats and humans, but not in self-driving cars or language models, at least not at the level of sophistication that we need.

First, short-term memory. Language models have none. How come, you wonder? They clearly “remember” earlier parts of a conversation, don’t they? Well… not exactly. Though not readily evident when you converse with one, what actually happens is that every turn in the conversation starts with a blank slate. And at every turn, the language model receives a copy of the entire conversation up to that point. This creates the illusion of memory: the model “remembers” what was said earlier, because its most recent instance received that full transcript along with your last question. This method works for brief conversations, but for a general-purpose intelligence, clearly something more sophisticated might be needed. (As an interim solution, to allow for conversations of unlimited length without completely losing context, I set up my own front-end solution to Claude and GPT so that when the transcript gets too long, it asks the LLM itself to replace it with a summary.)

Second, the ability to learn. That “P” in GPT stands for pretrained. Language models today are static models, pretrained by their respective publishers. For a general-purpose intelligence, it’d be important to implement some form of continuous learning capability.

So there you have it. Integration of “sentience” (a real-time internal model of the environment with oneself in it) and “sapience” (the ability to reason and communicate about one’s own capabilities) along with continuous learning and short-term memory. I think that once these features are fully integrated into a coherent whole, we will witness the birth of a true artificial general intelligence, or AGI. Of course we might also wish to endow that entity with agency: the ability to act on its own, as opposed to merely responding to user requests; the ability to continuously experience the world through senses (visual, auditory, etc.); not to mention physical agency, the ability to move around, and manipulate things in, physical reality. (On a side note, what if our AGI is a non-player character, NPC, in a virtual world? What would be the ethical implications?)

Reading what I just wrote so far also reminds me why it is wiser not to seek formal publication. For all I know, many of these thoughts were expressed elsewhere already. I know far too little about the current state-of-the-art of research in these directions and the relevant literature. So let me just leave these words here in my personal blog, as my uninformed (if not clever, at least I hope not painfully dumb) musings.

FYI: Google appears to have misrepresented this post in my weekly Google Alert for “Spinor” by listing it as:

“Cats are obviously not sentient, nor are self-driving automobiles. So humans are unique (as far as we know): we are both conscious and sentient. But …” (sic),

…while your post says “Cats are obviously not sapient,…”.

As for the substance of your post, it will help me in my own less-extensive examination of ChatGPTs “abilities”. Your definitions and insights provide food for thought and I will reply further if I can think of anything constructive to add.

Incidentally, as yet I’ve found no easier way to copy off mathematical material into a docx file other than via screenshots. None of the methods ChatGPT suggested were practicable for me (my programming skills and facilities are well past their use-by date). Any suggestions, please?

(My weekly Google Alert is set to find articles on the mathematical objects, and I get your Spinor Info posts as a bonus.)

Google is not wrong: I indeed used confusing language in the original version of this post (sentience and consciousness as opposed to sapience and sentience, which in retrospect makes a lot more sense), which I have since corrected. Hopefully, Google will update its search index eventually.

As for the math, it’s all LaTeX, using a MathJax extension in WordPress. There is indeed no easy way (not even a hard way) to copy-paste the equations into Word. Right-clicking an equation brings up a menu and you can copy-paste the LaTeX, but Word has rather limited abilities to parse LaTeX. It works sometimes, but don’t expect perfection.

I see what you mean about equations in Word, Thanks Viktor – Paul