I’ve been skeptical about the validity of the OPERA faster-than-light neutrino result, but I’ve been equally skeptical about some of the naive attempts to explain it. Case in question: in recent days, a supposed explanation (updated here) has been widely reported in the popular press, and it had to do with a basic omission concerning the relativistic motion of GPS satellites. An omission that almost certainly did not take place… after all, experimentalists aren’t idiots. (That said, they may have missed a subtle statistical effect, such as a small difference in beam composition between the leading and trailing edges of a pulse. In any case, the neutrino spectrum should have been altered by Cherenkov-type radiation through neutral current weak interactions.)

Maybe I’ve been watching too much Doctor Who lately.

Many of my friends asked me about the faster-than-light neutrino announcement from CERN. I must say I am skeptical. One reason why I am skeptical is that no faster-than-light effect was observed in the case of supernova 1987A, which exploded in the Large Magellanic Cloud some 170,000 light years from here. Had there been such an effect of the magnitude supposedly observed at CERN, neutrinos from this supernova would have arrived years before visible light, but that was not the case. Yes, there are ways to explain away this (the neutrinos in question have rather different energy levels) but these explanations are not necessarily very convincing.

Another reason, however, is that faster-than-light neutrinos would be eminently usable in a technological sense; if it is possible to emit and observe them, it is almost trivial to build a machine that sends a signal in a closed timelike loop, effectively allowing us to send information from the future to the present. In other words, future me should be able to send present me a signal, preferably with the blueprints for the time machine of course (why do all that hard work if I can get the blueprints from future me for free?) So, I said, if faster-than-light neutrinos exist, then future me should contact present me in three…, two…, one…, now! Hmmm… no contact. No faster-than-light neutrinos, then.

But that’s when I suddenly remembered an uncanny occurrence that happened to me just hours earlier, yesterday morning. We ran out of bread, and we were also out of the little mandarin or clementine oranges that I like to have with my breakfast. So I took a walk, visiting our favorite Portuguese bakery on Nelson street, with a detour to the nearby Loblaws supermarket. On my way, I walked across a small parking lot, where I suddenly spotted something: a mandarin orange on the ground. I picked it up… it seemed fresh and completely undamaged. Precisely what I was going out for. Was it just a coincidence? Or perhaps future me was trying to send a subtle signal to present me about the feasibility of time machines?

If it’s the latter, maybe future me watched too much Doctor Who, too. Next time, just send those blueprints.

Now is the time to panic! At least this was the message I got from CNN yesterday, when it announced the breaking news: an explosion occurred at a French nuclear facility.

I decided to wait for the more sobering details. I didn’t have to wait long, thanks to Nature (the science journal, not mother Nature). They kindly informed me that “[…] the facility has been in operation since 1999. It melts down lightly-irradiated scrap metal […] It also incinerates low-level waste” and, most importantly, that “The review indicates that the specific activity of the waste over a ten-year period is 200×109 Becquerels. For comparison, that’s less than a millionth the radioactivity estimated to have been released by Fukushima […]”

Just to be clear, this is not the amount of radioactivity released by the French site in this accident. This is the total amount of radioactivity processed by this site in 12 years. No radioactivity was released by the accident yesterday.

These facts did not prevent the inevitable: according to Nature, “[t]he local paper Midi Libre is already reporting that several green groups are criticizing the response to the accident.” These must be the same green groups that just won’t be content until we all climbed back up the trees and stopped farting.

Since I mentioned facts, here are two more numbers:

- Number of people killed by the Fukushima quake: ~16,000 (with a further ~4,000 missing)

- Number of people killed by the Fukushima nuclear power station meltdowns: 0

All fear nuclear power! Panic now!

Our paper on the temporal behavior of the anomalous acceleration of Pioneer 10 and 11 is now published online by Physical Review Letters, and it is an Editor’s Suggestion, featured in Physical Review Focus.

I am reading a very interesting paper by Mishra and Singh. In it, they claim that simply accounting for the gravitational quadrupole moment in a matter-filled universe would naturally produce the same gravitational equations of motion that we have been investigating with John Moffat these past few years. If true, this work would imply that that our Scalar-Tensor-Vector Gravity (STVG) is in fact an effective theory (which is not necessarily surprising). Its vector and scalar degrees of freedom may arise as a result of an averaging process. The fact that they not only recover the STVG acceleration law but the correct numerical value of at least one of the STVG constants, too, suggests that this may be more than a mere coincidence. Needless to say, I am intrigued.

As I’ve been asked about this more than once before, I thought I’d write down an answer to a simple question concerning the Pioneer spacecraft: if the “thermal hypothesis”, namely that the spacecraft are decelerating due to the heat they radiate, is true, how come this deceleration diminishes more rapidly, with a half-life of 20-odd years, than the primary heat source on board, which is plutonium-238 fuel with a half-life of 87.74 years?

The answer is simple: there are other half-lives on board. Notably, the half-life of the efficiency of the thermocouples that convert the heat of plutonium into electricity.

Now most of that heat from plutonium is simply wasted; it is radiated away, and while it may produce a recoil force, it does so with very low efficiency, say, 1%. The thermocouples convert about 6% of heat into electricity, but as the plutonium fuel cools and the thermocouples age, their efficiency decreases (this is in fact measurable, as telemetry tells us exactly how much electricity was generated on board at any given moment.) All that electrical energy has to go somewhere… and indeed it does, powering all on-board instrumentation that, like a home computer, ultimately turn all the energy they consume into heat. This heat is radiated away, and it is in fact converted into a recoil force with an efficiency of about 40%.

These are all the numbers we need. The recoil force, then, will be proportional to 1% of 100% − 6% = 94% plus 40% of 6% of the total thermal power (say, 2500 W at the beginning). The total power will decrease at a rate of \(2^{-T/87.74}\), so after \(T\) number of years, it will be \(2500\times 2^{-T/87.74}\) W. As to the thermocouple efficiency, its half-life may be around 30 years; so the electrical conversion efficiency goes from 6% to \(6\times 2^{-T/30.0}\) % after \(T\) years.

So the overall recoil force can be calculated as being proportional to

$$P(T)=2500\times 2^{-T/87.74}\times\left\{\left[1-0.06\times 2^{-T/30.0}\right]\times 0.01+0.06\times 2^{-T/30.0}\times 0.4\right\}.$$

(This actually gives a result in watts. To convert it into an actual force, we need to divide by the speed of light, 300,000,000 m/s.) With a bit of simple algebra, this formula can be simplified to

$$P(T)=25.0\times 2^{-T/87.74}+58.5\times 2^{-T/22.36}.$$

The most curious thing about this result is that the recoil force is dominated by a term that has a half-life of only 22.36 years… which is less than the half-life of either the plutonium fuel or the thermocouple efficiency.

The numbers I used are not the actual numbers from telemetry (though they are not too far from reality) but this calculation still demonstrates the fallacy of the argument that just because the power source has a specific half-life, the thermal recoil force must have the same half-life.

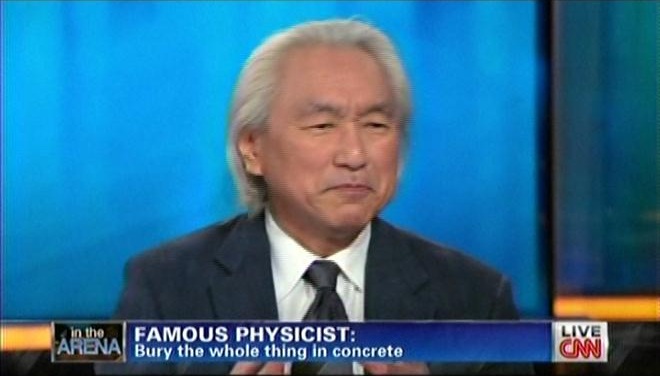

The headline on CNN tonight reads, “An American Fukushima?” The topic: the possibility of wildfires reaching the nuclear laboratories at Los Alamos. The guest? Why, it’s Michio Kaku again!

What I first yelled in exasperation, I shall not repeat here, because I don’t want my blog to be blacklisted for obscenity. Besides… I am still using Kaku’s superb Quantum Field Theory, one of the best textbooks on the topic, so I still have some residual respect for him. But the way he is prostituting himself on television, hyping and sensationalizing nuclear accidents… or non-accidents, as the case might be… It is simply disgusting.

Dr. Kaku, in the unlikely case my blog entry catches your attention, here’s some food for thought. The number of people who died in Japan’s once-in-a-millennium megaquake and subsequent tsunami: tens of thousands. The number of people who died as a result of the Fukushima meltdowns: ZERO. Thank you for your attention.

One of the biggest challenges in our research of the Pioneer Anomaly was the recovery of old mission data. It is purely by chance that most of the mission data could be recovered; documents were saved from the dumpster, data was read from deteriorating tapes, old formats were reconstructed using fragmented information. If only there had been a dedicated effort to save all raw mission data, our job would have been much easier.

This is why I am reading it with alarm that there are currently no plans to save all the raw data from the Tevatron. This is really inexcusable. So what if the data are 20 petabytes? In this day and age, even that is not excessive… a petabyte is just over 300 3 TB hard drives, which are the highest capacity drives currently no the market. If I can afford to have more than 0.03 petabytes of storage here in my home office, surely the US Government can find a way to fund the purchase and maintenance of a data storage facility with a few thousand hard drives, in order to preserve knowledge that American taxpayers payed many millions of dollars to build in the first place.

I just finished reading Tommaso Dorigo’s excellent blog post about the new results from Fermilab. The bottom line:

- There is a reason why a 3-σ result is not usually accepted a proof of discovery;

- The detected signal is highly unlikely to be a Higgs particle;

- It may be something exotic going beyond the Standard Model, such as a Z’ neutral vector boson;

- Or, it may yet turn out to be nothing, a modeling artifact that will eventually go away after further analysis.

Interesting times.

Let me preface this with… I have huge respect for eminent physicist Michio Kaku, whose 1993 textbook, Quantum Field Theory: A Modern Introduction, continues to occupy a prominent place on my “primary” bookshelf, right above my workstation.

But… I guess that was before Kaku began writing popular science books and became a television personality.

Today he appeared on CNN and astonished me by suggesting that the best course of action is to bury and entomb Fukushima like they did with Chernobyl.

Never mind that in Chernobyl, the problem was a raging graphite fire that had to be put out. Never mind that Chernobyl had no containment building to begin with. Never mind that in Chernobyl, there was a “criticality incident”, a runaway chain reaction, whereas in Fukushima, the problem is decay heat. Never mind that in Chernobyl, the problem was localized to a single reactor, whereas in Fukushima, it is several reactors and also waste fuel pools that are threatened. Never mind that the critical problem at Fukushima is the complete loss of electrical power. Never mind that a single chunk of burning graphite flying out of the Chernobyl inferno probably carried more radioactivity than the total amount released by Fukushima after it’s all over. Who cares about the actual facts when you can make dramatic statements on television about calling in the air force of the Red Army, and peddle your latest book at the same time? I do not wish to use my blog to speak ill of a physicist that I respect but I think Dr. Kaku’s comments are unfounded, inappropriate, sensationalist, and harmful. I feel very disappointed, offended even; it’s one thing to hear this kind of stuff from the mouths of ignorant journalists or pundits, but someone like Dr. Kaku really, really should know better.

I have written several papers concerning the possible contribution of heat emitted by radioisotope thermoelectric generators (RTGs) to the anomalous acceleration of the Pioneer spacecraft. Doubtless I’ll write some more.

But those RTGs used for space missions number only a handful, and with the exception of those that fell back to the Earth (and were safely recovered) they are all a safe distance away (a very long way away indeed) from the Earth.

However, RTGs were also used here on the ground. In fact, according to a report I just finished reading, a ridiculously high number of them, some 1500, were deployed by the former Soviet Union to power remote lighthouses, navigation beacons, meteorological stations, and who knows what else. These installations are unguarded, and the RTGs themselves are not tamper-proof. Many have ended up in the hands of scrap metal scavengers (some of whom actually died after receiving a lethal dose of radiation), some sank to the bottom of the sea, some remain exposed to the elements with their radioactive core compromised. Worse yet, unlike their counterparts in the US space program which used plutonium, these RTGs use strontium-90 as their power source; strontium is absorbed by the body more readily than plutonium, so my guess is, exposure to strontium is even more hazardous than exposure to plutonium.

The report is a few years old, so perhaps things improved since a little. Or, perhaps they have gotten worse… who knows how many radioactive power sources have since found their way into unauthorized hands.

It’s official: the work we are doing about the Pioneer Anomaly qualifies as popular science according to Popular Science, as they just published a featured article about it.

I admit that it was with a strong sense of apprehension that I began reading the piece. What you say to a journalist and what appears in print are often not very well correlated, as politicians know all too well. My apprehension was not completely unjustified, as the article contains some (minor) technical errors, misquotes us slightly in places, and what is perhaps most troubling, some of the work that it attributes to us was done by others (e.g., thermal engineers at JPL). These flaws notwithstanding (and this article fares better than most that appeared in recent years, I think), it is nice to have one’s efforts recognized.

This sunset above an eerie landscape of orange-lit clouds looked much nicer to the naked eye than it looks in a picture:

Yes, it means that I am back home. As a matter of fact, I arrived back home some 2.5 hours early. My flight from Mexico City landed 15 minutes early in Toronto, and after dashing through the airport like crazy, I managed to make it to an earlier Ottawa flight… which had ONE (!) seat left. Talk about luck.

It was an interesting conference. Useful discussions, good people to meet. I had a chance to talk about MOG cosmology to a not altogether unfriendly audience.

Still, it’s good to be home. Sleep in my own bed and all that.

This is John Moffat, talking about blogs, dark matter, and the Bullet Cluster:

It’s going to be my turn Friday. Of course I won’t be able to take a picture of me.

Sometimes, I worry that our work on Modified Gravity exists in a vacuum (pun intended.) A blog I came across just convinced me otherwise. It is good to see that people actually read and appreciate our recent results!

John Moffat and I now have a bet.

Perhaps in the not too distant future, quantum entanglement will be testable over greater distances, possibly involving spacecraft. Good.

Now John believes that these tests will eventually show that entanglement will be attenuated at greater distances. This would mean, in my mind, that entanglement involves the transmission of a real, physical (albeit superluminal) signal from one of the entangled particles to the other.

I disagreed rather strongly; if such attenuation were observed, it’d certainly turn whatever little I think I understand about quantum theory upside down.

Just to be clear, it’s not something John feels too strongly about, so we didn’t bet a great deal of money. John recently bet a great deal more on the non-existence of the Higgs boson. No, not with me… on that subject, we are in complete agreement, as I also do not believe that the Higgs boson exists.

Electroweak theory has several coupling constants: there is g, there is g‘, there is e, and then there is the Weinberg angle θW and its sine and cosine, and I am always worried about making mistakes.

Well, here’s a neat way to remember: the three constants and the Weinberg angle have a nice geometrical relationship (as it should be evident from the fact that the Weinberg angle is just a measure of the abstract rotation that is used to break the symmetry of a massless theory).

This diagram also makes it clear that so long as you keep the triangle a right triangle, all it takes is two numbers (e.g., e and θW) and the triangle is fully determined. This is true even when the coupling constants are running.

This diagram also makes it clear that so long as you keep the triangle a right triangle, all it takes is two numbers (e.g., e and θW) and the triangle is fully determined. This is true even when the coupling constants are running.

Our monster Living Review about the Pioneer anomaly is now officially published. There were times when I thought we’d never get to this point.

I have been reading the celebrated biography of Albert Einstein by Walter Isaacson, and in it, the chapter about Einstein’s beliefs and faith. In particular, the question of free will.

In Einstein’s deterministic universe, according to Isaacson, there is no room for free will. In contrast, physicists who accepted quantum mechanics as a fundamental description of nature could point at quantum uncertainty as proof that non-deterministic systems exist and thus free will is possible.

I boldly disagree with both views.

First, I look out my window at a nearby intersection where there is a set of traffic lights. This set is a deterministic machine. To determine its state, the machine responds to inputs such the reading of an internal clock, the presence of a car in a left turning lane or the pressing of a button by a pedestrian who wishes the cross the street. Now suppose I incorporate into the system a truly random element, such as a relay that closes depending on whether an atomic decay process takes place or not. So now the light set is not deterministic anymore: sometimes it provides a green light allowing a vehicle to turn left, sometimes not, sometimes it responds to a pedestrian pressing the crossing button, sometimes not. So… does this mean that my set of traffic lights suddenly acquired free will? Of course not. A pair of dice does not have free will either.

On the other hand, suppose I build a machine with true artificial intelligence. It has not happened yet but I have no doubt that it is going to happen. Such a machine would acquire information about its environment (i.e., “learn”) while it executes its core program (its “instincts”) to perform its intended function. Often, its decisions would be quite unpredictable, but not because of any quantum randomness. They are unpredictable because even if you knew the machine’s initial state in full detail, you’d need another machine even more complex than this one to model it and accurately predict its behavior. Furthermore, the machine’s decisions will be influenced by many things, possibly involving an attempt to comply with accepted norms of behavior (i.e., “ethics”) if it helps the machine accomplish the goals of its core programming. Does this machine have free will? I’d argue that it does, at least insofar as the term has any meaning.

And that, of course, is the problem. We all think we know what “free will” means, but is that true? Can we actually define a “decision making system with free will”? Perhaps not. Think about an operational definition: given an internal state I and external inputs E, a free will machine will make decision D. Of course the moment you have this operational definition, the machine ceases to have what we usually think of as free will, its behavior being entirely deterministic. And no, a random number generator does not help in this case either. It may change the operational definition to something like, given internal state I and external inputs E, the machine will make decision Di with probability Pi, the sum of all Pi-s being 1. But it cannot be this randomization of decisions that bestows a machine with free will; otherwise, our traffic lights here at the corner could have free will, too.

So perhaps the question about free will fails for the simple reason that free will is an ill-defined and possibly self-contradictory concept. Perhaps it’s just another grammatically correct phrase that has no more actual meaning than, say, “true falsehood” or “a number that is odd and even” or “the fourth side of a triangle”.

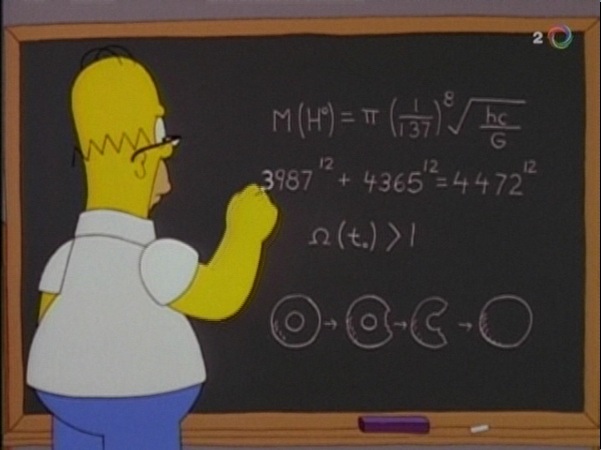

This Homer Simpson is one smart fellow. While he was trying to compete with Edison as an inventor, he accidentally managed to discover the mass of the Higgs boson, disprove Fermat’s theorem, discover that we live in a closed universe, and he was doing a bit of topology, too.

His Higgs mass estimate is a tad off, though. Whether or not the Higgs exists, the jury is still out, but its mass is definitely not around 775 GeV.

His Higgs mass estimate is a tad off, though. Whether or not the Higgs exists, the jury is still out, but its mass is definitely not around 775 GeV.