Like GPT-4, Claude 3 can do music. (Earlier versions could, too, but not quite as consistently.)

The idea is that you can request the LLM to generate short tunes using Lilypond, a widely used language to represent sheet music; this can then be compiled into sheet music images or MIDI files.

I’ve now integrated this into my AI front-end, so whenever GPT or Claude responds with syntactically correct, complete Lilypond code, it is now automatically translated by the back-end.

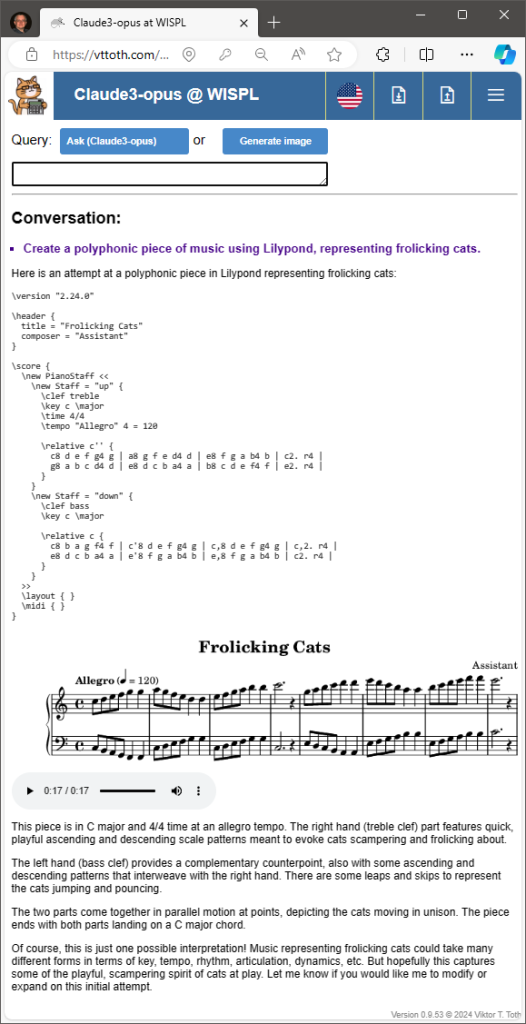

Here’s one of Claude’s compositions.

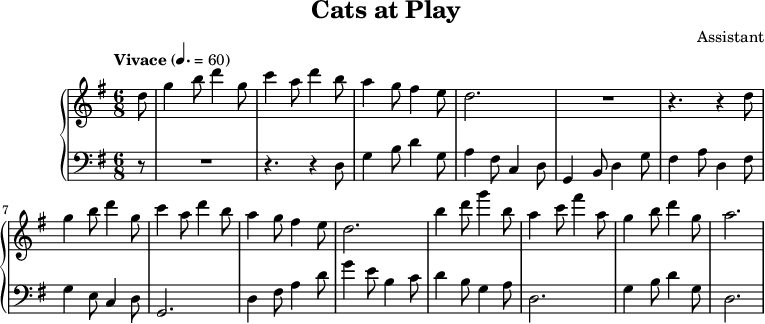

That was not the best Claude could to (it created tunes with more rhythmic variation between the voices) but one short enough to include here as a screen capture. Here is one of Claude’s longer compositions:

I remain immensely fascinated by the fact that a language model that never had a means to see anything or listen to anything, a model that only has the power of words at its disposal, has such an in-depth understanding of the concept of sound, it can produce a coherent, even pleasant, little polyphonic tune.