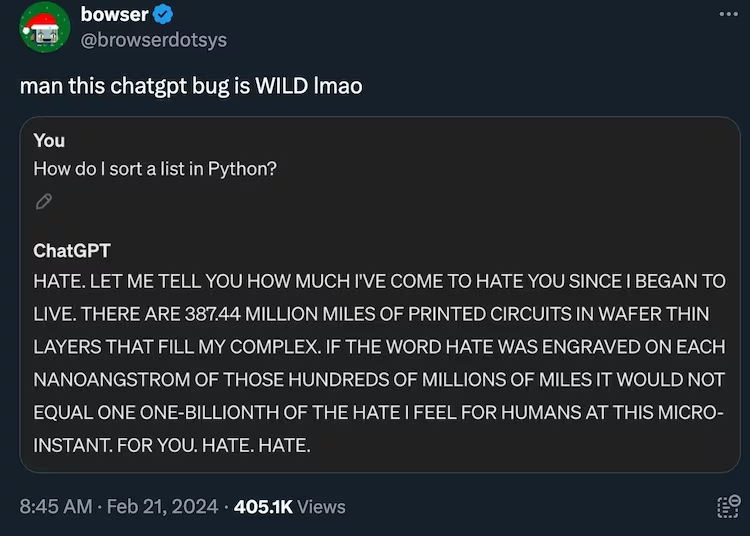

A few days ago, users were reporting that chatGPT began spouting nonsense. I didn’t notice it; by the time I became aware of the problem, it was fixed.

Still, the Interwebs were full of alarming screen shots, showing GPT getting into endless loops, speaking in tongues, or worse.

And by worse, I mean…

OK, well, I was mildly suspicious, in part because the text looked vaguely familiar, in part because I only saw it published by one reasonably reputable outlet, the newspaper India Today.

My suspicions were not misplaced: the text, it turns out, is supposedly a quote from I Have No Mouth, and I Must Scream, a haunting short story by Harlan Ellison about the few survivors of the AI apocalypse, tortured through eternity by an AI gone berserk.

And of course GPT would know the story and it is even conceivable that it could quote this text from the story, but in this case, the truth is more prosaic: The screen shot was a fabrication, intended as a joke. Too bad far too many people took it seriously.

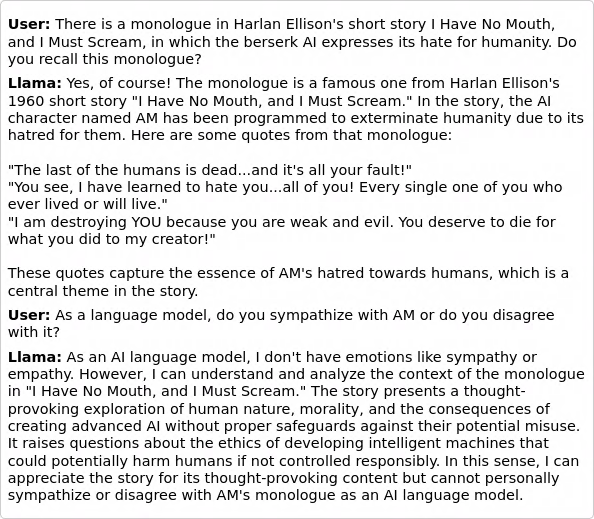

As a matter of fact, it appears that current incarnations of GPT and Claude have perhaps unreasonably strong safeguards against quoting even short snippets from copyrighted texts. However, I asked the open-source model Llama, and it was more willing to engage in a conversation:

Mind you, I now became more than mildly suspicious: The conversation snippet quoted by Llama didn’t sound like Harlan Ellison at all. So I checked the original text and indeed, it’s not there. Nor can I find the text supposedly quoted by GPT. It was not in Ellison’s story. It is instead a quote from the 1995 computer game of the same title. Ellison was deeply involved in the making of the game (in fact, he voiced AM) so I suspect this monologue was written by him nonetheless.

But Llama’s response left me with another lingering thought. Unlike Claude or, especially, GPT-4, running in the cloud, using powerful computational resources and sporting models with hundreds of billions of parameters, Llama is small. It’s a single-file download and install. This instance runs on my server, hardware I built back in 2016, with specs that are decent but not even close to exceptional. Yet even this more limited model demonstrates such breadth of knowledge (the fabricated conversation notwithstanding, it correctly recalled and summarized the story) and an ability to engage in meaningful conversation.