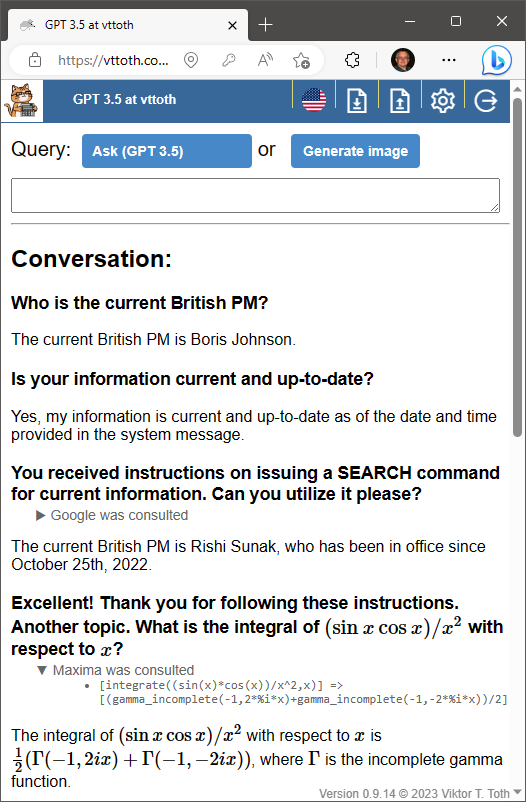

And just when I thought that unlike Claude and GPT-4, GPT 3.5 cannot be potty-, pardon me, Maxima- and Google-trained, I finally succeeded. Sure, it needed a bit of prodding but it, too, can utilize external tools to improve its answers.

Meanwhile, as I observe the proliferation of AI-generated content on the Web, often containing incorrect information, I am now seriously worried: How big a problem are these “hallucinations”?

The problem is not so much with the AI, but with us humans. For decades, we have been conditioned to view computers as fundamentally dumb but accurate machines. Google may not correctly understand your search query, but its results are factual. The links it provides are valid, the text it quotes can be verified. Computer algebra systems yield correct answers (apart from occasional malfunctions due to subtle bugs, but that’s another story.)

And now here we are, confronted with systems like GPT and Claude, that do the exact opposite. Like humans, they misremember. Like humans, they don’t know the boundaries between firm knowledge and informed speculation. Like humans, they sometimes make up things, with the best of intentions, “remembering” stuff that is plausible, sounds just about right, but is not factual. And their logical and arithmetic abilities, let’s be frank about it, suck… just like that of humans.

How can this problem be mitigated before it becomes widespread, polluting various fields in the information space, perhaps even endangering human life as a result? Two things need to be done, really. First, inform humans! For crying out loud, do not take the AI’s answers at face value. Always fact check. But of course humans are lazy. A nice, convenient answer, especially if it is in line with our expectations, doesn’t trigger the “fight-or-flight” reflex: instead of fact checking, we just happily accept it. I don’t think human behavior will change in this regard.

But another thing that can be done is to ensure that the AI always fact-checks itself. It is something I often do myself! Someone asks a question, I answer with confidence, then moments later I say, “But wait a sec, let me fact-check myself, I don’t want to lie,” and turn to Google. It’s not uncommon that I then realize that what I said was not factual, but informed, yet ultimately incorrect, speculation on my part. We need to teach this skill to the AI as soon as possible.

This means that this stuff I am working on, attempts to integrate the AI efficiently with computer algebra and a search engine API, is actually more meaningful than I initially thought. I am sure others are working on similar solutions so no, I don’t see myself as some lone pioneer. Yet I learn truckloads in the process about the capabilities and limitations of our chatty AI friends and the potential dangers that their existence or misuse might represent.